i'm trying to implement deferred rendering. i had been drawing diffuse, depth, normal at ‘_renderTargetList[0], _renderTargetList[1], _renderTargetList[2]’.

this is render code.

void Renderer::renderLight()

{

ID3D11RenderTargetView *pRenderTargetView = nullptr;

ID3D11DepthStencilView *pDepthStencilView = nullptr;

g_pGraphicDevice->getContext()->OMGetRenderTargets(1, &pRenderTargetView, &pDepthStencilView);

//-------------------------------------------------------------------------------------------------------------------------------------------------------------

g_pGraphicDevice->getContext()->ClearRenderTargetView(_lightTarget->getRenderTargetView(), reinterpret_cast<const float *>(&Colors::Black));

g_pGraphicDevice->getContext()->ClearDepthStencilView(pDepthStencilView, D3D11_CLEAR_DEPTH | D3D11_CLEAR_STENCIL, 1.f, 0u);

ID3D11RenderTargetView *pLightRenderTargetView = _lightTarget->getRenderTargetView();

g_pGraphicDevice->getContext()->OMSetRenderTargets(1u, &pLightRenderTargetView, nullptr);

g_pGraphicDevice->getContext()->PSSetShader(g_pShaderManager->getPixelShader(TEXT("PointLightShader.cso")), nullptr, 0);

ID3D11ShaderResourceView *pDepth = _renderTargetList[1]->getShaderResouceView();

ID3D11ShaderResourceView *pNormal = _renderTargetList[2]->getShaderResouceView();

for (auto iter = _lightComponentList.begin(); iter != _lightComponentList.end(); ++iter)

(*iter)->Render();

g_pGraphicDevice->getContext()->OMSetRenderTargets(1, &pRenderTargetView, pDepthStencilView);

}now i will draw Pointlight, so i made ‘_lightTarget’ and ’PointLightShader'.

when i draw Pointlight it's not work well. so i had started debug it and founded that only g_Depth was sampled. although i set depth, normal texture and sampled these textures with uv.

this is PointShader.hlsl

Texture2D g_Depth;

Texture2D g_Normals;

SamplerState g_Sampler;

struct PixelIn

{

float4 pos : SV_POSITION;

float2 uv : TEXCOORD0;

float3 normal : NORMAL0;

float3 tangent : NORMAL1;

float3 binormal : NORMAL2;

};

cbuffer CBuffer

{

float4 g_lightPosition;

float4 g_lightColor;

row_major float4x4 g_inverseCameraViewMatrix;

row_major float4x4 g_inverseProjectiveMatrix;

};

float4 main(PixelIn pIn) : SV_TARGET

{

//PixelOut pOut;

// uv (0 <= x, y <= 1) to proj (-1 <= x, y <= 1)

//float4 projectPosition = float4(0.f, 0.f, 0.f, 0.f);

//projectPosition.x = ((2.f * pIn.uv.x) - 1.f);

//projectPosition.y = ((-2.f * pIn.uv.y) + 1.f);

//projectPosition.z = (1.f - g_Depth.Sample(g_Sampler, pIn.uv).r);

//projectPosition.w = 1.f;

// not important!

...

float4 depth = g_Depth.Sample(g_Sampler, pIn.uv);

float4 normal = g_Normal.Sample(g_Sampler, pIn.uv);

return normal; // sampled from g_Depth ???

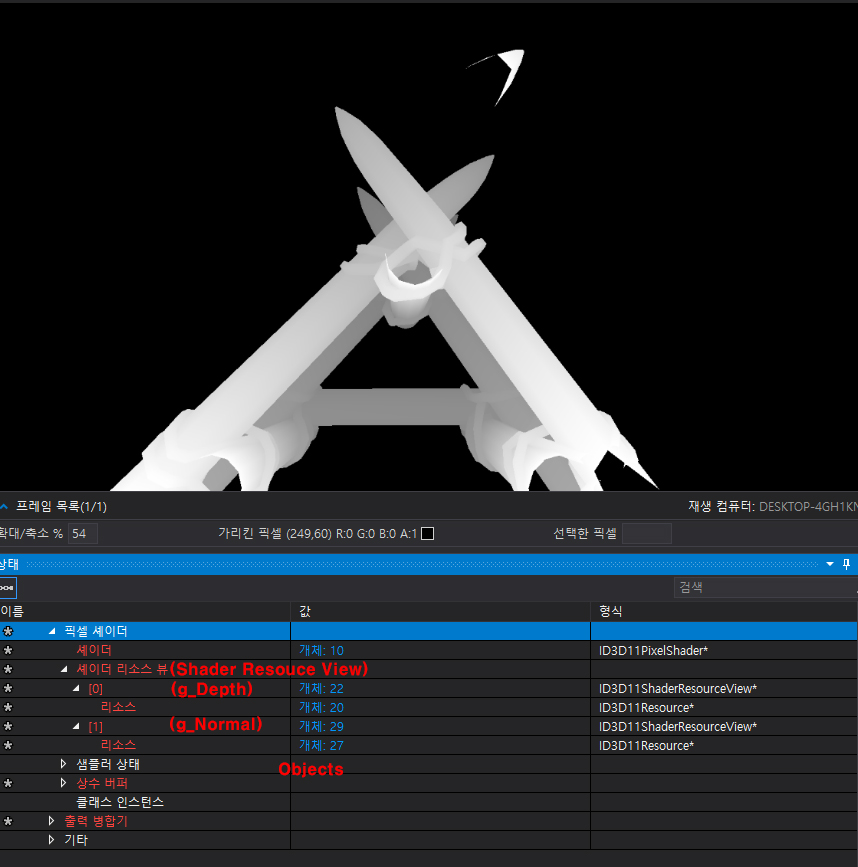

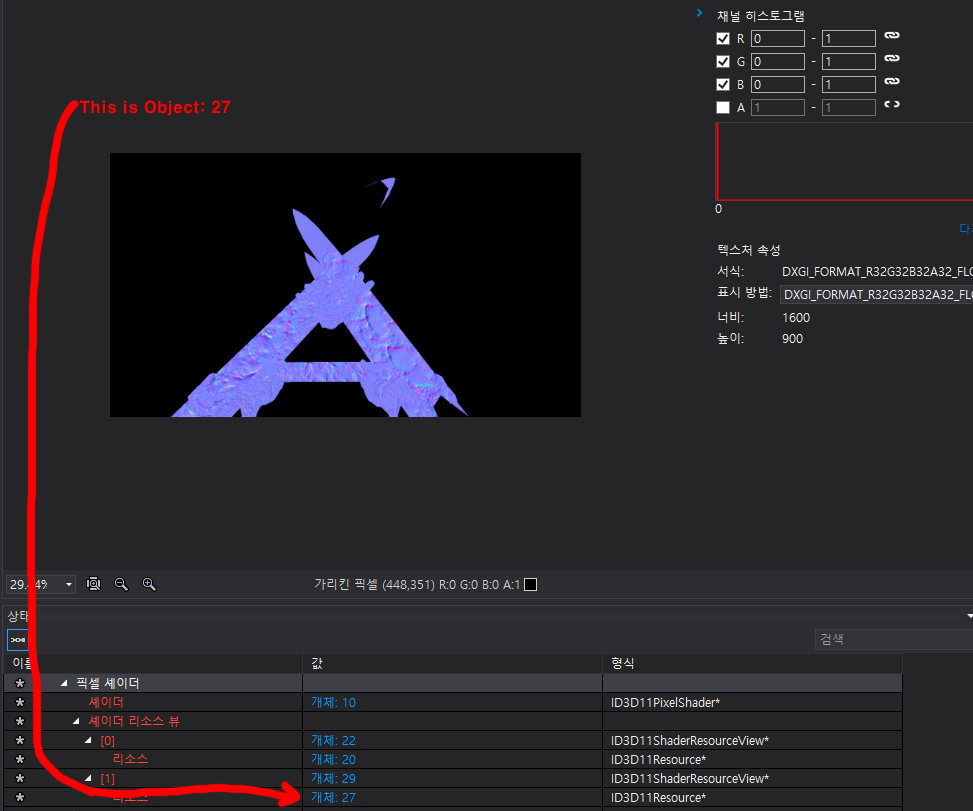

}this is result

i used graphic debugging tools and i checked that resouces bounded well (object 27)

anybody who explain this situation? please?