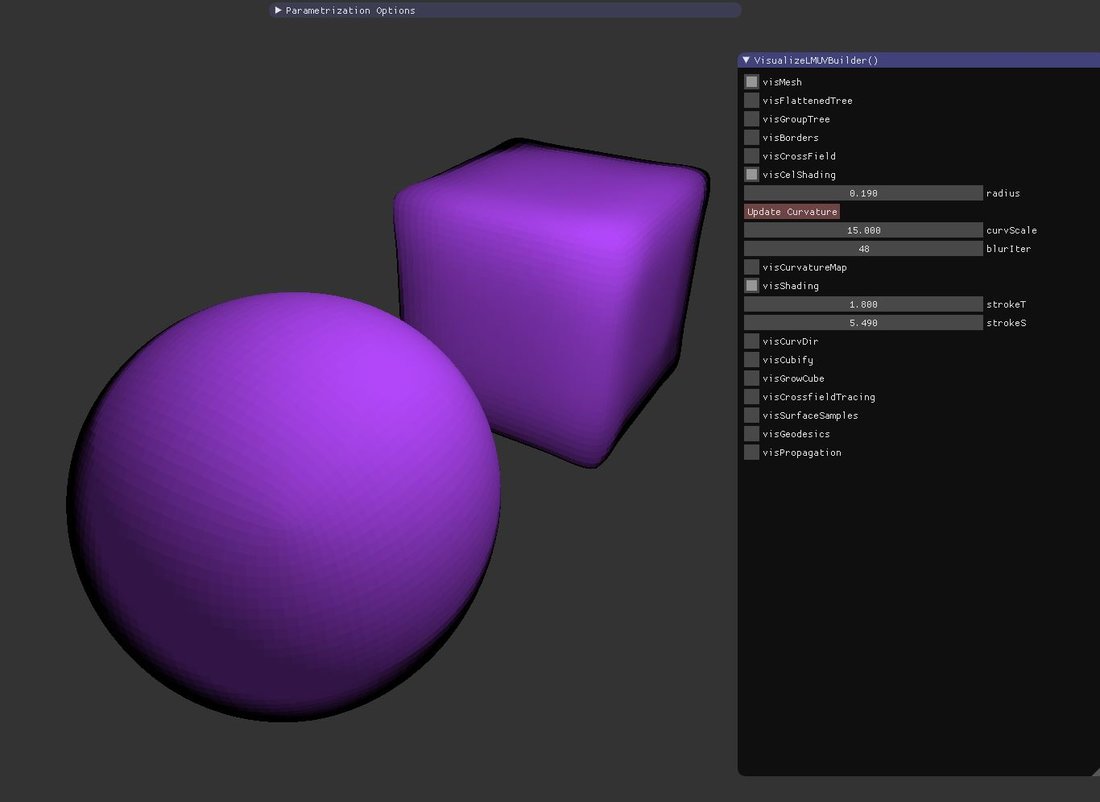

... got the idea to blur normals, so flat / round stuff isn't that different.

Also previously i used manual setting for eye position which made sphere stroke even wider.

Looks much better now, also no popping under camera movement. But the need for second normal channel hurts, or you'd accept that smooth look for everything

Edit: The blurred normals do all the trick now. curvature map might not be necessary anymore.

static bool visCelShading = 0; ImGui::Checkbox("visCelShading", &visCelShading);

if (visCelShading)

{

static float radius = 0.19f;

ImGui::DragFloat("radius", &radius, 0.01f);

static std::vector<vec> vertexCurvatureDirectionsBoth;

static std::vector<float> vertexConeAngles;

if (ImGui::Button("Update Curvature") || vertexConeAngles.size()==0)

{

mesh.BuildVertexCurvatureDirections (

&vertexCurvatureDirectionsBoth, 0, 0,

&vertexConeAngles, 0, 0,

0, radius, mesh.mVertexNormals, mesh.mPolyNormals);

}

static float curvScale = 15.0f;

ImGui::DragFloat("curvScale", &curvScale, 0.01f);

std::vector<float> vertexMap;

vertexMap.resize(mesh.mVertices.size());

for (int i=0; i<mesh.mVertices.size(); i++)

{

//vertexMap[i] = vertexCurvatureDirectionsBoth[i].Length() * curvScale;

vertexMap[i] = fabs(vertexConeAngles[i]) * curvScale;

}

std::vector<float> blurredVertexMap;

mesh.BlurVertexMap (blurredVertexMap, vertexMap);

std::vector<float> polyCurvatureMap;

mesh.VertexMapToPolyMap (polyCurvatureMap, vertexMap);

static int blurIter = 10;

ImGui::DragInt("blurIter", &blurIter, 0.01f);

std::vector<vec> blurredVertexNormals1 = mesh.mVertexNormals;

std::vector<vec> blurredVertexNormals2;

for (int i=0; i<blurIter; i++)

{

mesh.BlurVertexMap (blurredVertexNormals2, blurredVertexNormals1);

mesh.BlurVertexMap (blurredVertexNormals1, blurredVertexNormals2);

}

std::vector<vec> smoothPolyNormals;

mesh.VertexMapToPolyMap (smoothPolyNormals, blurredVertexNormals1);

for (int i=0; i<smoothPolyNormals.size(); i++) smoothPolyNormals[i].Normalize();

static bool visCurvatureMap = 0; ImGui::Checkbox("visCurvatureMap", &visCurvatureMap);

if (visCurvatureMap)

{

for (int i=0; i<mesh.mPolys.size(); i++)

{

float c = polyCurvatureMap[i];

float col[3] = {c,c,c}; VisPolyFilled (mesh, i, col);

}

}

static bool visShading = 1; ImGui::Checkbox("visShading", &visShading);

if (visShading)

{

vec lightPos (4,5,-3);

//static vec eyePos (8,4,-2);

//ImGui::SliderFloat3 ("eyePos", (float*)&eyePos, -10,10);

static float strokeT = 0.2f;

ImGui::DragFloat("strokeT", &strokeT, 0.01f);

static float strokeS = 2.0f;

ImGui::DragFloat("strokeS", &strokeS, 0.01f);

RenderPoint (eyePos, 1,1,1);

for (int i=0; i<mesh.mPolys.size(); i++)

{

vec pos = mesh.mPolyCenters[i];

//vec normal = mesh.mPolyNormals[i];

vec normal = smoothPolyNormals[i];

vec LightD = vec(pos - lightPos).Unit();

float NdotL = max (0, normal.Dot(LightD));

float ambient = 0.4f;

float rec = NdotL + ambient;

float strokefactor = max (0, min (1, polyCurvatureMap[i] ));

vec eyeD = vec(pos - eyePos).Unit();

float NdotE = max (0, normal.Dot(eyeD));

NdotE = (NdotE*0.98f + NdotL + 0.02f); // wider stroke in shadow

float tangF = 1.0f - sqrt(NdotE);

float darken = max (0, min (1, tangF*strokeS - strokeT)) * strokefactor;

vec diff (0.5f, 0.2f, 0.7f);

diff *= (1.0f-darken);

vec lit = diff * rec;

VisPolyFilled (mesh, i, &lit[0]);

}

}

}