Written by Erik-Murphy Chutorian, Founder and CEO of 8th Wall.

This story was previously published on The Next Web.

This year will be the year that we see the first applications designed for an AR-powered, mobile camera-first world. I’ve heard many discussions about how to accelerate AR development and adoption, but will everyone’s panacea be the cloud? While I believe cloud services will be essential to AR’s success and play a big role in its evolution, contrary to popular belief, cloud isn’t the gating factor. In my view the roadblocks to AR adoption are around design, reach and instructing people how to rely on what AR can do.

Let’s take a step back and look at how interfaces have evolved. Computers have gained the ability to see and hear, and these virtual senses will usher in new era of natural user interfaces. Touchscreens will soon be on the way out, in the same way that the keyboard and mouse are now. I see the writing on the wall.

We snap photos instead of texting, ask Alexa for our news and weather and can e-shop by seeing how products fit in our homes. As environmental understanding becomes more sophisticated on our phones, I believe user interfaces will start to interact with that environment. However, the cell phone is an accidental user interface for AR, and as such, we have to bridge the form factor and experience with something that is familiar to how people already use their phones.

Not everyone will use the term ‘AR’ to describe what is going on, but I trust that 2018 will be the year consumers experience AR and it’s going to happen on their mobile phone. The best part of mobile AR? There’s nothing to strap on your face — just hold up a phone and open an app. Before we give up our touchscreens, much more will need to happen and contrary to previous discussion in the industry, a lack of specialized cloud services is not what’s holding back the transition to these camera-centric AR apps.

The big hurdle to overcome is getting acclimated to designing with the perspective of AR as the first medium, as opposed to a secondary or add-on channel or feature. AR-first design will be key to creating successful everyday apps for new, natural types of user interaction.

How did we get to mobile AR?

The hype around Mobile AR began last September when Apple launched ARKit, now one of a handful of new software libraries that allow mobile developers to add augmented reality features to standard phone apps. These libraries offer virtual sensors that provide information about the environment and precisely how a phone is moving through it.

For mobile developers, it means an opportunity to be first to design and build new intuitive user experiences that can disrupt how we interact with our phones. In the same way that desktop websites were redesigned for a mobile-first world, we will soon see that camera-enabled physical interactions become the norm for interacting with many types of apps, including ecommerce, communication, enterprise, and gaming.

Where are the killer apps?

People use their phones for email, news, communication, entertainment, shopping, navigation, gaming, and photography. Mobile AR isn’t going to change that. More likely, many of the killer AR apps will be very same apps we already use today afterthey have redesigned for AR. Companies that are slow to embrace this technology will be ripe for disruption. It’s happening already.

Snapchat was first into the AR space and redefined how a younger generation communicates. Facebook and Google followed suit, and now Amazon, IKEA, Wayfair, and others are dipping their toes into the pool of AR. Niantic recently acquired a new AR start-up too, can we hope to see the physical world merge with the Wizarding world? What startups will innovate where the incumbents are slow to change? Will 2018 bring us a successor to maps, email, or photos?

The AR Cloud is not the missing piece

Modern apps rely on internet connectivity, big data, and location to round out their functionality. AR apps are no different in this respect, and in the same way we use Waze and Yelp to provide local, crowdsourced information about our environment, we will continue to do so when these apps are rebuilt for AR-first design.

In today’s tech-speak, the ‘AR Cloud’ is a set of backend services built to support AR features like persistence and multiplayer. These services consist of distributed databases, search engines, and algorithms for computer vision and machine learning. Most are well-scoped engineering projects and their success will be in their speed of delivery and quality of execution.

Technology behemoths and AR startups are competing to build these cloud services, with some of the heavily invested players going so far as to say “Apple’s ARKit is almost useless without the AR Cloud.” In contrast, the reality is quite the opposite. A single, well-designed AR mobile app can succeed immediately, but AR Cloud solutions can’t gain traction until enough top mobile apps are designed for AR. Ensuring that happens quickly is critical to their success.

AR is limited today by a lack of design principles

We need to think about how to design for mobile AR and specifically mobile apps for this new camera-first world. How do we break away from swipes, 2D-menus and the like, now that we can track precisely and annotate real objects in the world? AR technology has created an entirely new set of options for how we can interact with our phones, and from this we need to design AR interactions.

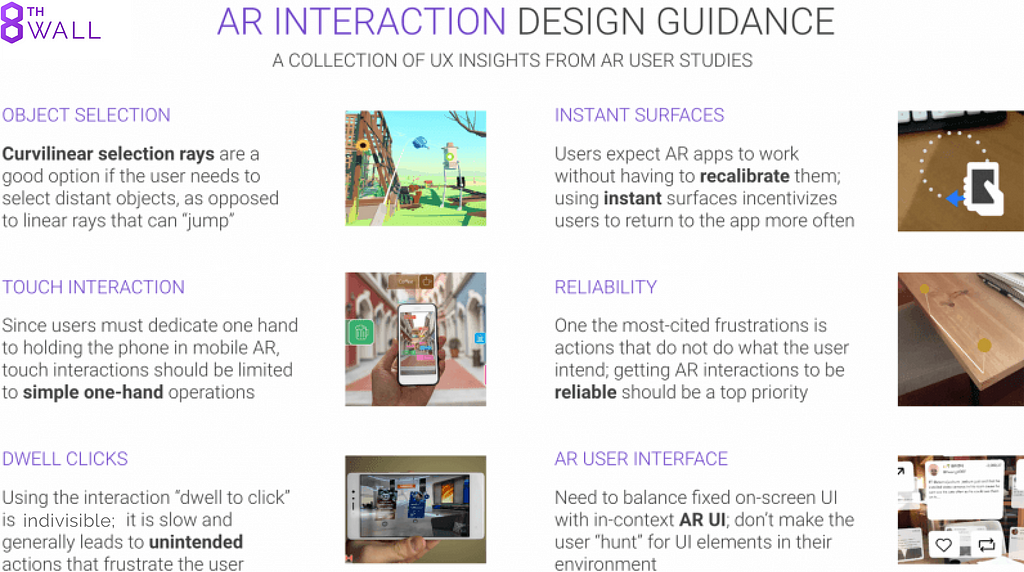

To better understand how we should think about AR-first design, my team and I recently conducted an AR User Study to understand peoples’ experiences with the first crop of mobile AR apps. This resulted in the following AR interaction guidelines, which are by no means an exhaustive list:

- Prefer curvilinear selection for pointing and grabbing. By using a gentle arc instead of a straight line, people can select distant objects without their cursor jumping as it gets nearer to the horizon.

- Keep AR touch interactions simple. Limit gestures to simple one-hand operations, since one hand is dedicated to holding and moving the phone.

- Avoid Dwell clicking, e.g., hovering on a selected object for a period of time, as this selection mechanism is slow and generally leads to unintended actions.

- Initialize virtual objects immediately. People expect AR apps to work seamlessly, and the surface calibration step found in many ARKit apps is an interaction that breaks the flow of the application.

- Ensure reliability. Virtual objects should appear in consistent locations, and being able to accurately select, move and tether objects is important if these interactions are provided.

- AR apps need to balance fixed on-screen UI with in-context AR UI. Users shouldn’t need to “hunt” for UI elements in their environment.

Before we can capitalize on cloud features for AR, we need to determine how to implement these and other guidelines into a uniform set of user interactions that are natural and fluid.

Looking forward to the year of mobile AR

I feel strongly that 2018 will be the year that we see the first applications designed for an AR-powered, camera-first world. The first developers to build them have a strong first-mover advantage on the next generation of applications, communication platforms, and games. I believe cloud services will be essential to this success and play a big role in its evolution, but my view is that other challenges remain around design, reach and instructing people how to rely on this new technology.

In the true tradition of mobile technology, it won’t take long before new startups and tech behemoths defy what everyone once thought was or was not possible in this space. It’s not an AR Cloud or a killer new use case that will make AR successful. My take is that AR-first design, where we prioritize the AR experience over traditional 2D interfaces, will be the key to unlocking mobile AR. The first developers to build these apps will have a strong first-mover advantage on the next generation of applications, communication platforms, and games.

Design is the only thing holding mobile AR back was originally published in 8th Wall on Medium, where people are continuing the conversation by highlighting and responding to this story.