We recently launched 8th Wall Web, the first solution of its kind for augmented reality (AR) in the mobile browser. As the 8th Wall Director of Engineering, I wanted to share with you why I’m so proud of the team, and why I’m really excited about what we accomplished.

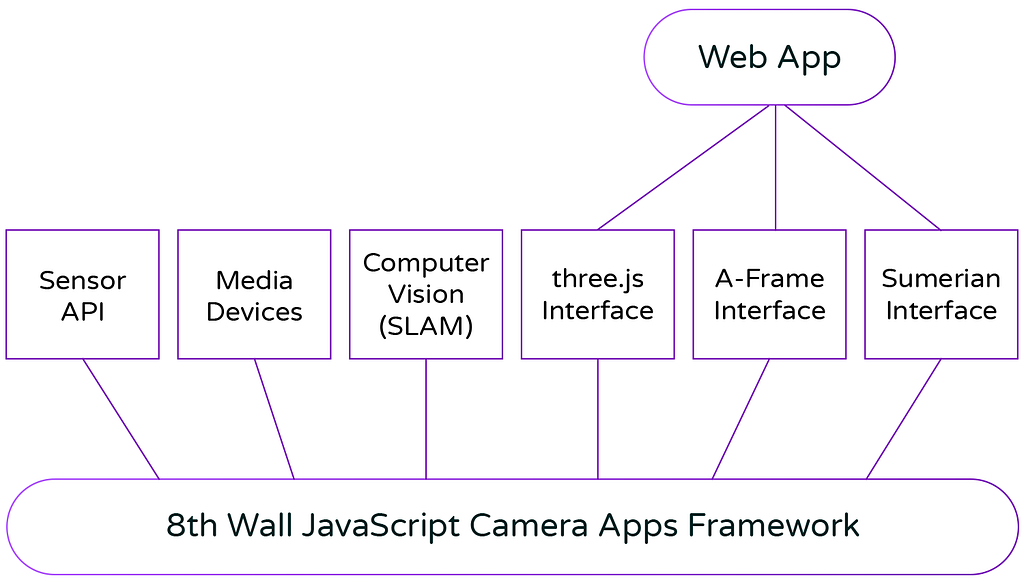

8th Wall Web makes interactive AR for the web possible through three main components: 8th Wall’s SLAM engine, a high throughput camera application framework, and bindings with common web 3D engine frameworks. 8th Wall Web is built entirely on standard web technologies such as the JS MediaDevices API, Sensor API, WebGL and WebAssembly.

AR for everyone

At 8th Wall, we believe augmented reality is for everyone. We’re on a mission to make immersive AR content accessible to all, regardless of what device you’re using.

We launched 8th Wall XR to grow the audience of AR apps. 8th Wall XR taps the full power of native AR frameworks when available, and expands beyond the 100 device models that ARKit and ARCore support today. AR apps powered by 8th Wall reach users on over 3,400 different types of phones and tablets. Our data shows this increases an app’s reach tenfold on Android.

AR is an incredible new capability that will alter the way we interact with devices, with enormous room for growth. By optimizing viral flows, top-performing AR apps have the potential to reach an audience size 10,000 times larger than today.

One reason for today’s limited virality is AR inoculation: if only 1 out of 10 users can experience AR apps, every fan of an app needs to share it with ten times as many friends in order for the app to go viral. We developed 8th Wall XR to lower the barrier to virality and help creators maximize the reach and success of their AR apps. We do this by making AR work for everyone.

Another reason for limited virality in AR is app-installation friction. Many users will click on web links shared by their friends, but only a few will install an app. In a given month, most smartphone users will install zero apps, according to a 2017 report by comScore MobiLens.

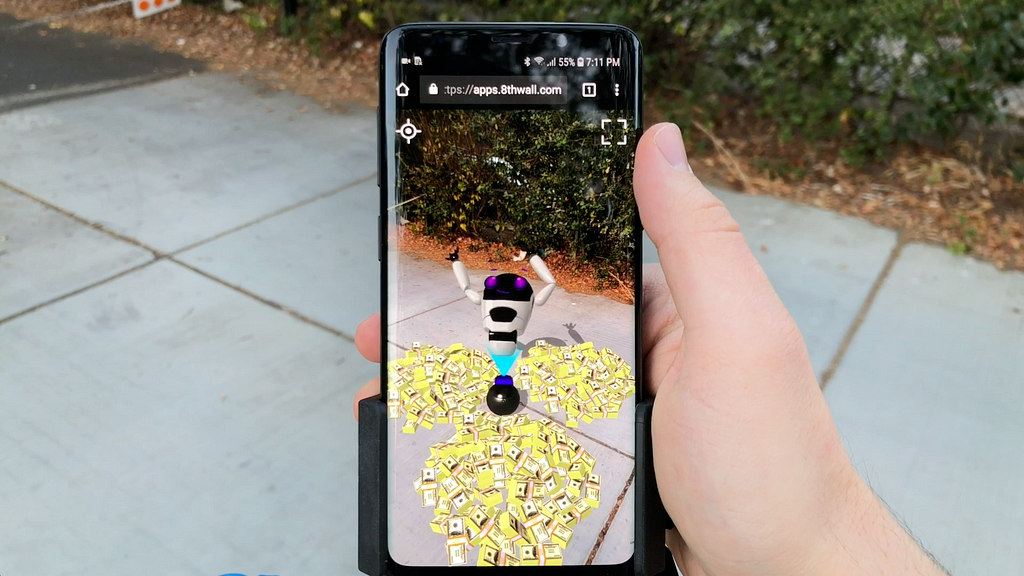

The web is a friction-free way for users to share and engage with content. 8th Wall’s browser-based AR demo, JINI, was used by 7 times as many people on its launch day than our first promotional native app. By bringing 8th Wall XR to the web for everyone, we believe we can help creators maximize their reach to new audiences with AR.

AR for the web

8th Wall SLAM is a 6DoF markerless tracking system with feature point extraction, sensor fusion, robust estimation, triangulation, mapping, bundle adjustment and relocalization. 8th Wall SLAM is loaded as a browser script, just like any other web software.

To create content for AR apps, we leverage 3D frameworks like WebGL, three.js, A-Frame, or Amazon Sumerian. Depending on the rendering system, 8th Wall Camera apps can run hosted in an external JavaScript animation run loop, in parallel with another JavaScript run loop, or in their own JavaScript run loop.

The 8th Wall JS Camera apps framework orchestrates camera capture, computer vision processing, rendering, and display.

The 8th Wall JS Camera apps framework orchestrates camera capture, computer vision processing, rendering, and display.Connecting the SLAM and app layers is the 8th Wall JavaScript Camera apps framework. This orchestrates camera capture, computer vision processing, rendering, and display. We designed it as a platform that can accommodate many types of AI-driven camera apps. For example, the 8th Wall Camera framework could drive apps that use face processing, hand detection, target image tracking, or object recognition.

What we achieved

Before we started building 8th Wall Web, we thought it would be impossible to achieve performant SLAM tracking using web-native technologies; but we also believed it would be the best way to help AR reach more users. What followed was the most daunting, technically challenging, and exciting experience of my career.

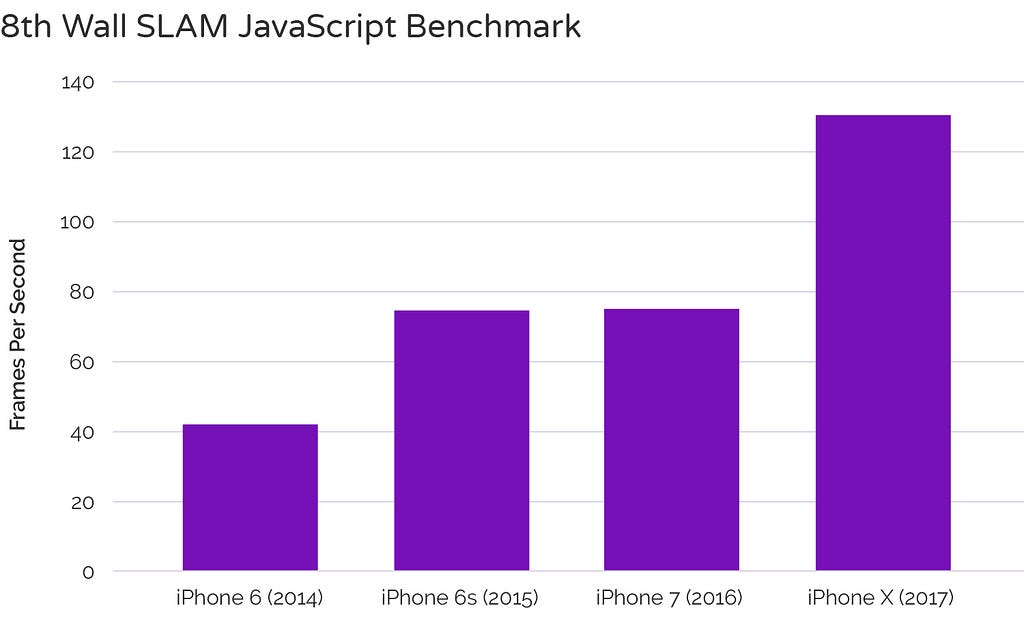

We started with our custom-built, highly-optimized mobile SLAM system. Over the course of the 8th Wall Web project, we exceeded what we thought was possible, further improving its performance in JavaScript by over 5 times, so that even on some phones released four years ago, we are able to achieve a 30FPS runtime.

JavaScript benchmark performance of the 8th Wall SLAM JavaScript engine on iPhone 6, 6s, 7, and X by the date they were released.

JavaScript benchmark performance of the 8th Wall SLAM JavaScript engine on iPhone 6, 6s, 7, and X by the date they were released.I’m incredibly proud of the 8th Wall team and what we achieved, and I wanted to share some of our experiences that made it possible.

How we did it

Build, measure, test, benchmark, repeat

The 8th Wall XR for Unity package is produced from code written in C++, Objective C, Java and C#. It is built to run on multiple ARM and x86 architectures across Android and iOS, as well as within the Unity Editor on OSX and Windows. To manage this complexity, we created a highly customized set of crosstools and Starlark extensions for the Bazel build system.

To adapt our Unity build for the web, we added further Bazel functionality to transpile C++ code to asm.js and WebAssembly, and to produce transpiled, minified, uglified JavaScript targets with NPM and C++ dependencies. We also created custom Bazel rules for cross-platform JavaScript tests. These allowed us to run our existing C++ unit tests and micro benchmarks in Node, Safari and Chrome, both on desktop and on mobile phones directly from the command line.

8th Wall SLAM is instrumented from the ground up with custom efficient profiling code that measures the speed of all subsystems. This leverages C++ scoping rules to generate call traces on every frame processed by our computer vision engine. These are then distilled into compact statistics that can provide feedback on performance of fine-grained sections of code across runs and devices.

To optimize for the web, we created a JavaScript benchmark suite that served as an end-to-end performance and quality integration test. A key challenge for the benchmark suite was loading large datasets quickly to promote rapid iteration and evaluation. To achieve rapid benchmarking, we built a custom JavaScript file system to overcome browser cache, database, and memory limitations.

In addition to evaluating changes at development time, we developed infrastructure to run our benchmark suite every hour on test devices and to log results to a server. This allowed us to quickly identify the source of performance or quality regressions.

Optimize, optimize, optimize

Once we had visibility into the performance and quality of our code, we began searching for areas where optimization was likely to have an impact. Some of these were amenable to classic optimization of algorithms and data structures. For example we developed a new custom sorting algorithm for choosing the subset of feature points to process for mapping. Some optimizations were known and reported in the computer vision literature, e.g.customizing cost function derivatives for bundle adjustment. Other optimizations were novel. For example, we were able to achieve a 10x retrieval speed up for locality sensitive hashes compared to standard libraries by optimizing for our specific operation domain.

We also optimized sections of C++ code to perform well specifically in transpiled JavaScript. For example, we found that the JavaScript execution was particularly slow for sections of C++ code that read from one buffer while writing to another. Rewriting core algorithms to minimize this load-store pattern helped some sections of code significantly.

Two versions of the same code. The second runs 15% slower in compiled C++, but runs 35% faster when transpiled to JavaScript:

Another finding was that calling C++ methods from inner loops caused many temporary variable allocations triggering frequent JS garbage collection events. Inlining a few critical methods in C++ led to a substantial reduction in JavaScript memory management overhead.

Rearchitect for the web

In native C++ code, our SLAM engine uses C++11 threads, atomics, conditions and memory barriers to utilize multiple CPU cores available on modern mobile devices to speed up processing. In JavaScript, only one thread is available to the web application. This property forced us to fundamentally rethink how the overall flow of a camera application should be architected.

We moved as much processing as possible from the CPU to WebGL in order to maximize parallel computation within the constraints of JavaScript. One example was feature point detection. This came with an unexpected benefit: when feature point extraction runs on the CPU it must follow certain algorithmic constraints that make it run very efficiently. On the GPU, different performance characteristics allowed us to improve the robustness and quality of feature detection in ways that would have been prohibitive before.

In the browser, all computer vision, game logic, and rendering must be done within a single animation frame. We found browsers were more effective at making parallel use of the GPU in certain parts of this cycle. This forced us to modularize code into blocks that could be easily rearranged to run in different portions of a single animation frame to maximize efficiency.

It was important to us that 8th Wall Web runs well on as many devices as possible. To keep performance high on older device classes, we built algorithms that detect performance hiccups and dynamically restructure the processing pipeline to spread cpu load among multiple animation frames.

What’s next

These are only a few of the changes that allowed us to bring AR technology to the web. Over the course of this project, we improved the speed of our SLAM engine by 5 times while also improving tracking quality, but we’re not done yet. We’re hard at work to integrate these same great updates into 8th Wall XR for native apps, as we continue to improve the way people experience AR across devices.

We are committed to our goal of bringing AR to everyone. We look forward to pushing the boundaries of what’s possible, and to help creators bring their unique voices and amazing experiences to wider audiences than ever.

How We Engineered AR for the Mobile Browser with 8th Wall Web was originally published in 8th Wall on Medium, where people are continuing the conversation by highlighting and responding to this story.