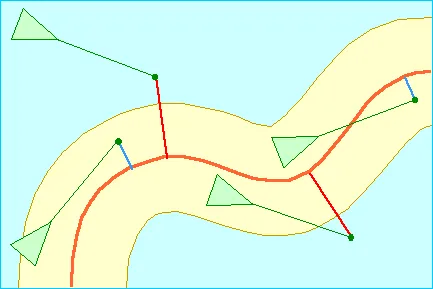

Steering behaviors are use to maneuver IA agents in a 3D environment. With these behaviors, agents are able to better react to changes in their environment.

While the navigation mesh algorithm is ideal for planning a path from one point to another, it can't really deal with dynamic objects such as other agents. This is where steering behaviors can help.

What are steering behaviors?

Steering behaviors are an amalgam of different behaviors that are used to organically manage the movement of an AI agent.

For example, behaviors such as obstacle avoidance, pursuit and group cohesion are all steering behaviors...

Steering behavior are usually applied in a 2D plane: it is sufficient, easier to implement and understand. (However, I can think of some use cases that require the behaviors to be in 3D, like in games where the agents fly to move)

One of the most important behavior of all steering behaviors is the seeking behavior. We also added the arriving behavior to make the agent's movement a whole lot more organic.

Steering behaviors are described in this paper.

What is the seeking behavior?

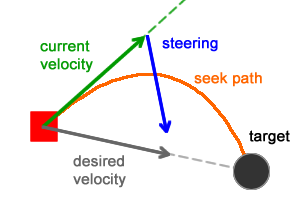

The seeking behavior is the idea that an AI agent "seeks" to have a certain velocity (vector).

To begin, we'll need to have 2 things:

- An initial velocity (a vector)

- A desired velocity (also a vector)

First, we need to find the velocity needed for our agent to reach a desired point... This is usually a subtraction of the current position of the agent and the desired position.

\(\overrightarrow{d} = (x_{t},y_{t},z_{t}) - (x_{a},y_{a},z_{a})\)

Here, a represent our agent and t our target. d is the desired velocity

Secondly, we must also find the agent's current velocity, which is usually already available in most game engines.

Next, we need to find the vector difference between the desired velocity and the agent's current velocity. it literally gives us a vector that gives the desired velocity when we add it to that agent's current velocity. We will call it "steering velocity".

\(\overrightarrow{s} = \overrightarrow{d} - \overrightarrow{c}\)

Here, s is our steering velocity, c is the agent's current velocity and d is the desired velocity

After that, we truncate our steering velocity to a length called the "steering force".

Finally, we simply add the steering velocity to the agent's current velocity .

// truncateVectorLocal truncate a vector to a given length

Vector3f currentDirection = aiAgentMovementControl.getWalkDirection();

Vector3f wantedDirection = targetPosition.subtract(aiAgent.getWorldTranslation()).normalizeLocal().setY(0).multLocal(maxSpeed);

// We steer to our wanted direction

Vector3f steeringVector = truncateVectorLocal(wantedDirection.subtract(currentDirection), steeringForce);

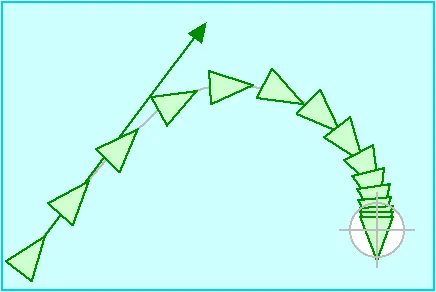

Vector3f newCurrentDirection = MathExt.truncateVectorLocal(currentDirection.addLocal(MathExt.truncateVectorLocal(wantedDirection.subtract(currentDirection), m_steeringForce).divideLocal(m_mass)), maxSpeed);This computation is done frame by frame: this means that the steering velocity becomes weaker and weaker as the agent's current velocity approaches the desired one, creating a kind of interpolation curve.

What is the arriving behavior?

The arrival behavior is the idea that an AI agent who "arrives" near his destination will gradually slow down until it gets there.

We already have a list of waypoints returned by the navigation mesh algorithm for which the agent must cross to reach its destination. When it has passed the second-to-last point, we then activate the arriving behavior.

When the behavior is active, we check the distance between the destination and the current position of the agent and change its maximum speed accordingly.

// This is the initial maxSpeed

float maxSpeed = unitMovementControl.getMoveSpeed();

// It's the last waypoint

float distance = aiAgent.getWorldTranslation().distance(nextWaypoint.getCenter());

float rampedSpeed = aiAgentMovementControl.getMoveSpeed() * (distance / slowingDistanceThreshold);

float clippedSpeed = Math.min(rampedSpeed, aiAgentMovementControl.getMoveSpeed());

// This is our new maxSpeed

maxSpeed = clippedSpeed;Essentially, we slow down the agent until it gets to its destination.

The future?

As I'm writing this, we've chosen to split the implementation of the steering behaviors individually to implement only the bare necessities, as we have no empirical evidence that we'll need to implement al of them. Therefore, we only implemented the seeking and arriving behaviors, delaying the rest of the behaviors at an indeterminate time in the future,.

So, when (or if) we'll need it, we'll already have a solid and stable foundation from which we can build upon.