Hello, I have recently tried to learn a bit about programming shaders. I am now working on a screen space reflections shader for URP 17 in Unity 6. The shader is used in a renderer feature. I have a basic working version of the shader that's pretty stable and where the reflections are visible from a long enough distance.

Below is a link to the current shader i have right now.

urp-enhanced/Assets/Shaders/RendererFeatures/TrueScreenSpaceReflections.shader at main · CoolCreasu/urp-enhanced

The issue i am working on right now is trying to optimize it. I found a blog page about a method to do screen space reflections: Casual Effects: Screen Space Ray Tracing from Morgan McGuire.

My maths isn't good but as far as I understand it the general idea of his method is to calculate as much as possible beforehand. For example the start and end positions on screen are used to calculate the delta step for the xy position on screen. I think there also is a DDA algorithm but I am for now only focussing on the calculations in screen space.

The code below is what is working for me right now:[loop]for (float t = tOffset; t < 1.0 && steps < MAX_STEPS; t += tStep, steps += 1){ float4 pos = lerp(sPosCS, ePosCS, t); float testDepth = LinearEyeDepth(pos.z / pos.w, _ZBufferParams); float2 uv = (((pos.xy / pos.w) * float2(1, -1)) + 1) * 0.5; #if UNITY_REVERSED_Z float sceneDepth = LinearEyeDepth(SampleSceneDepth(uv), _ZBufferParams); #else float sceneDepth = LinearEyeDepth(lerp(UNITY_NEAR_CLIP_VALUE, 1.0, SampleSceneDepth(uv)), _ZBufferParams); #endif if (sceneDepth < testDepth) { return SAMPLE_TEXTURE2D(_BlitTexture, sampler_BlitTexture, uv); }}

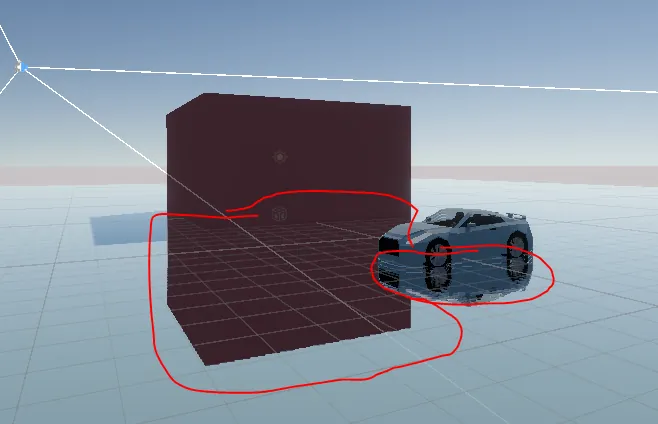

Below is an image of that working code:

However what I want to achieve is something like the following:

Where I can precompute z and then just use t to lerp in between.float startDepth = LinearEyeDepth(sPosCS.z / sPosCS.w, _ZBufferParams); // precompute zfloat endDepth = LinearEyeDepth(ePosCS.z / ePosCS.w, _ZBufferParams); // precompute zfloat2 P0 = ((sPosCS.xy / sPosCS.w * float2(1, -1)) + 1) * 0.5;float2 P1 = ((ePosCS.xy / ePosCS.w * float2(1, -1)) + 1) * 0.5;uv = lerp(P0, P1, t); // not correct: linear depth seems not to match with this uv interpolation.

However if I use the z calculates above i still need to use the interpolated pos.xy / pos.w each step. I tried calculating start and end uv beforehand too but then my reflections seem to only show up if I look very downwards on the plane reflecting other objects.

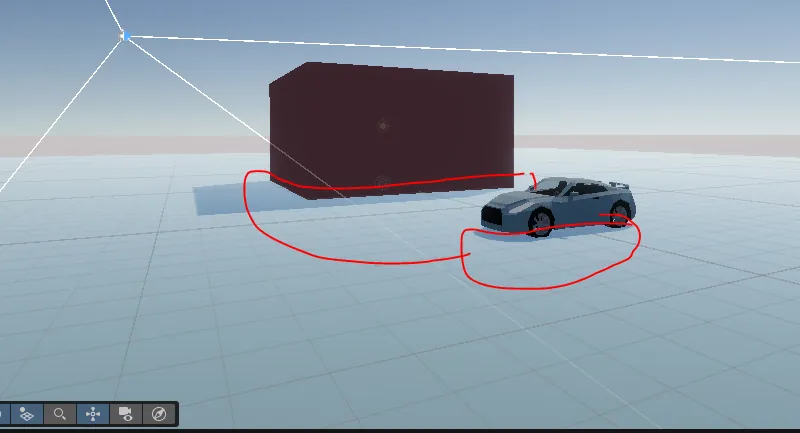

Below is an image when i try to precalculate the values:

And an image of the same perspective as the image above if i try to use precalculated z and uv positions linearly interpolated with t

I am sorry if my explanation isn't clear but I hope someone can help figure out how I can properly calculate the values beforehand so I only have to use lerp or maybe even calculate a delta for z and uv and then add that delta each step.

If i precalculate z but keep using for the uv coordinates my reflections are visible if i look very horizontal, so i think something isn't correct.float2 uv = (((pos.xy / pos.w) * float2(1, -1)) + 1) * 0.5;

Maybe the interpolated z isn't actually at the same position as the interpolated uv? If anyone knows how this can be correctly done it would be a great help!

.png)