BTW, what is needed for denoising? A normals image and a depth image (as well as the colour image)?

Path tracing in Vulkan

taby said:

Instead of generating 3 rays per main() function like I'm doing now (one per colour channel), I would make like 10 or 20 channels. Now, how do I go about converting those 10 or 20 channels back down to 3 channels. Hmm.

What you want to do is spectral rendering - normally when you trace a path in your scene, you bounce ray around calculating its energy contribution to the pixel, or rather color to be more precise (it is often some float3 value holding RGB representing energy contribution in each channel). You want an extension that resembles reality more closely, there is nothing like RGB in reality - but wavelength.

Small physics window to describe what's happening:

Using spectral rendering makes no sense unless we describe physics properties related to it.

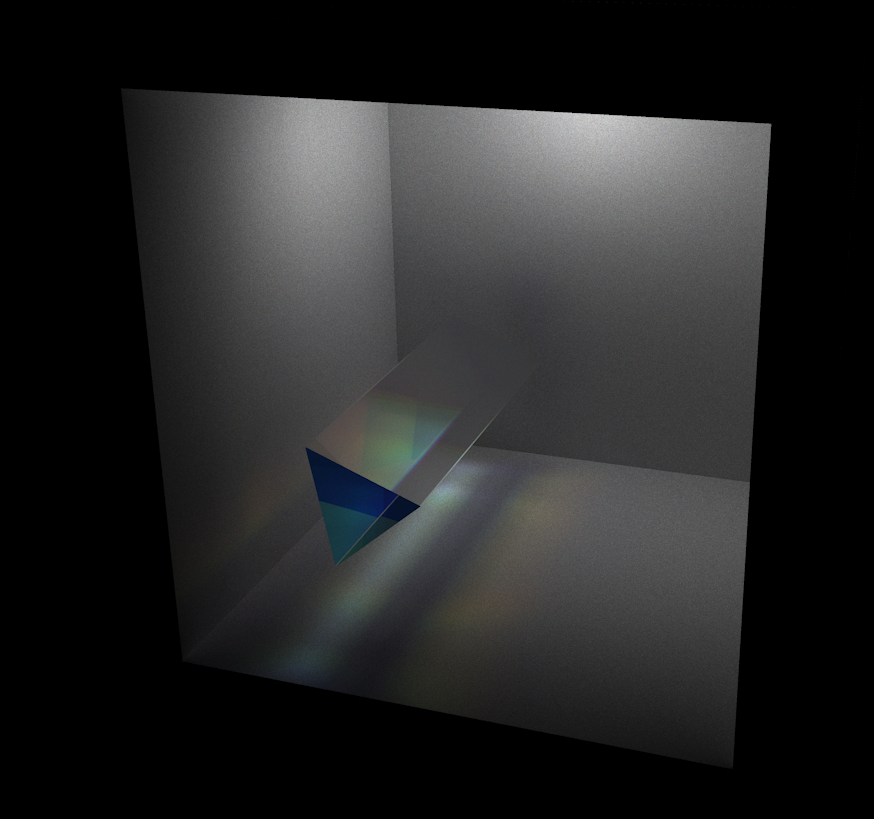

Index of refraction in physics is often measured with yellow light of specific wavelength (IOR of diamond is therefore 2.417 … which for a standard color ray tracer is enough). Now, here is a tricky part - when you would start measuring it in reality with various lasers (you need specific wavelength to measure!), you'd find out that it varies. If you would start investigating you would most likely find information that dispersion of diamond is 0.044, and IOR values are 2.407 for red, and 2.451 for blue (beginning and end of visible spectrum). It becomes rather complicated quite fast in terms of physics (different wavelengths travel at different speeds through participating media). This relation is described by Cauchy's equation.

You can build a spectral ray tracer taking this into account. The convergence rate will massively drop (of course) and you can generate effects like dispersion (chromatic aberration) - therefore simulating imperfection of lenses.

…

In short - what you need are functions to convert RGB to wavelength, and wavelength to RGB.

Here is an example of 2D path tracer doing the effect: 2d spectral ray tracer (shadertoy.com)

…

This is the proper way how to handle dispersion rendering. If I'm not mistaken PBRT book had a section for this (at least in the v2 version I have, I can't tell for v3 or v4 - but I believe they still have it).

taby said:

BTW, what is needed for denoising? A normals image and a depth image (as well as the colour image)?

This depends (I know, I'm annoying with this response - it's often quite a bit more complicated than one can imagine).

There are filtering techniques - like Gaussian, Bilateral, A-Trous, Guided and Median. These tend to blur images often based on some additional feature buffers (in most cases use G-Buffers for primary rays) - normals, albedo, depth (position) … some use reprojected path length, view position, first-bounce data (those can retain more information). You often lose high frequency information - often causing major differences to ground truth in highlights and shadows.

Machine learning has become somewhat popular, and were used in OptiX and IOID, and of course DLSS. I don't want to bump too much into those - OptiX uses it for example. I personally have somewhat mixed feelings about machine learning approaches and especially using NNs, mainly because we do have a commercial project (not launched yet, and not related to ray tracing), where machine learning is one of the reasons why I had to push its release off few times. While in some cases the results were more than satisfying (especially in cases similar enough to what is in training set), more random cases cause major mismatches (and if you guessed users will input their data - you guessed right - and as it's going to be paid service, errors at the level we have now are not acceptable). Sigh… It's more demotivating than motivating working with those.

Last one are sampling technique (which also includes TAA, Spatio-Temporal filtering, ReSTIR, etc. etc.). Which tend to re-use samples temporally (from previous frames) or spatially (from area around the current sample) … or both. These have advantage over the others, these may be unbiased.

Real world examples - Minecraft RTX uses SVGF (Spatio-Temporal Variance Guided Filter), Quake 2 RTX uses A-SVGF (Adaptive Spatio-Temporal Variance Guided Filtering).

EDIT: For reference - SVGF implementation - https://www.shadertoy.com/view/tlXfRX

My current blog on programming, linux and stuff - http://gameprogrammerdiary.blogspot.com

I added this text into the paper:

The three main visualization algorithms in contemporary graphics programming are the rasterizer, ray tracing, and path tracing.

The rasterizer literally converts vector graphics (generally, triangles) into raster graphics (pixels).

Generally, a depth buffer is used to discern which triangles to draw, and which to discard, depending on distance to the eye.

This depth testing algorithm does the job, but it is just simply not as programmable as a ray tracer or path tracer.

The ray tracer does a similar job, insomuch that it converts triangles into pixels.

Rather than using a depth buffer though, one generally uses a bounding volume hierarchy to determine eye ray / triangle intersection.

The path tracer is identical to the ray tracer, except that it also takes global illumination into account.

The path tracer uses the exact same bounding volume hierarchy setup as the ray tracer.

As we will soon see, caustics occur naturally in the case of the backward path tracer in particular.

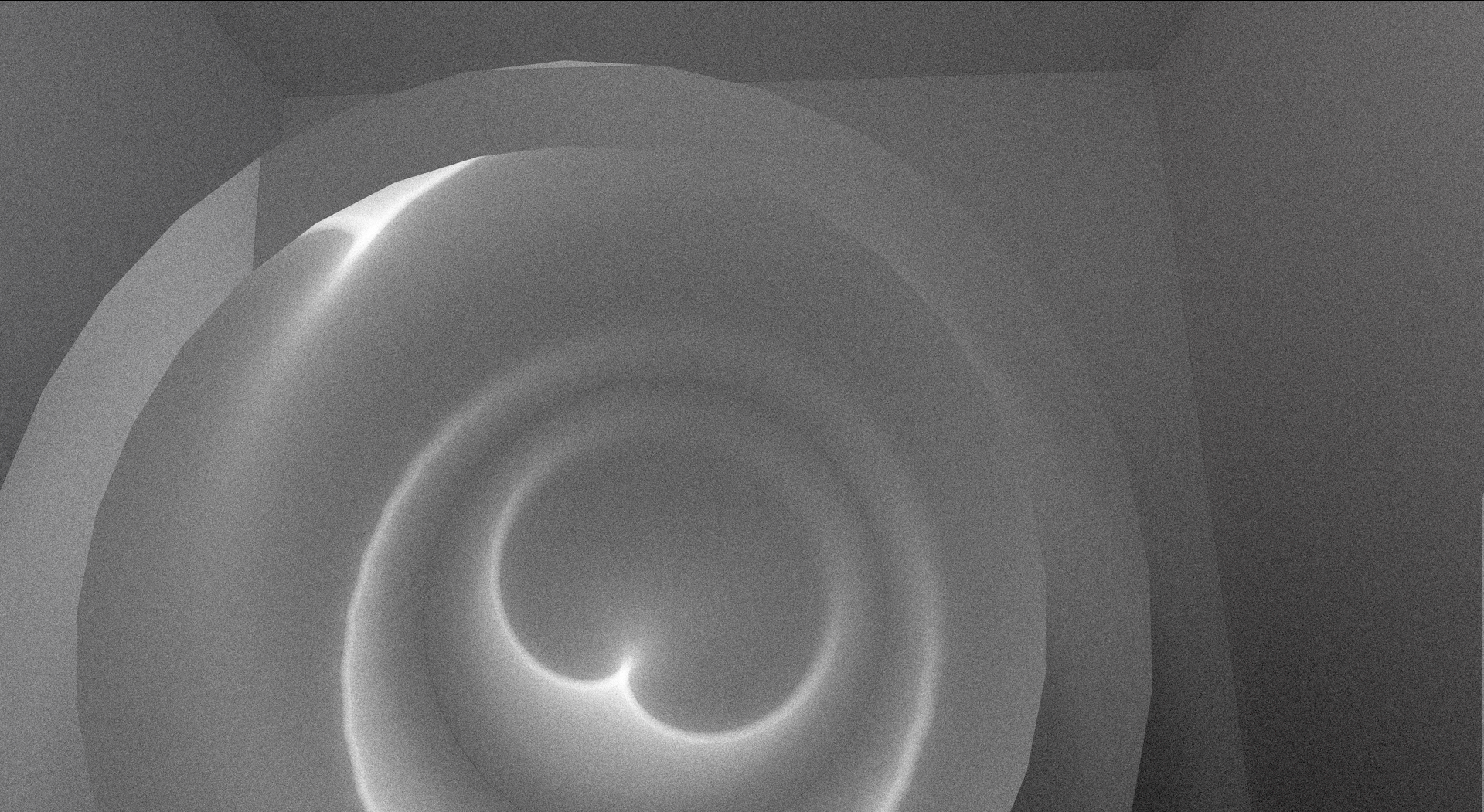

Nice sharp caustics. Like you said, shrinking the light volume causes the caustics to gain sharpness.

So, with the help of someone from the Vulkan Discord server, I implemented 6-channel colour. It's working well, but not fully tested.

Did you do full spectral rendering? Or just adding multiple colors?

My current blog on programming, linux and stuff - http://gameprogrammerdiary.blogspot.com

I just added in more colours LOL. Full spectral rendering is beyond my capabilities at this time. I want to implement it bad though!

taby said:

Full spectral rendering is beyond my capabilities at this time. I want to implement it bad though!

You might be interested in this paper on hero wavelength sampling. It boils down to randomly picking a wavelength at each ray intersection that is used to control the outgoing ray (rather than tracing different rays for each wavelength), and then making compensations to the other wavelengths so that you get the correct result at convergence. All wavelengths use the same ray paths, and some artifacts are removed. I use this approach for acoustic ray tracing which requires simulating things (e.g. BRDFs) differently at different audio frequencies.

Hmm. I am wondering if there is just a simple way of doing this using the HSV colour space.