Right. So my approach is spund, sort of. Still working on it. 🙂

Thanks for the comments, y'all.

Right. So my approach is spund, sort of. Still working on it. 🙂

Thanks for the comments, y'all.

Right. So my approach (gotten with the help of many people) is sound, sort of. Still working on it. 🙂

Thanks for the comments, y'all.

taby said:

OK, one big problem: my code does not specify a light source, not even once.

In naive path tracing that's not generally a big problem (as was mentioned - that doesn't require lights definition at all - only material emissivity), but … the moment you start doing improvements for better convergence (explicit sampling - also known as next event estimation … being the most common), you NEED to know your lights. The big question is - what are the lights? And that boils down to how you define your scene.

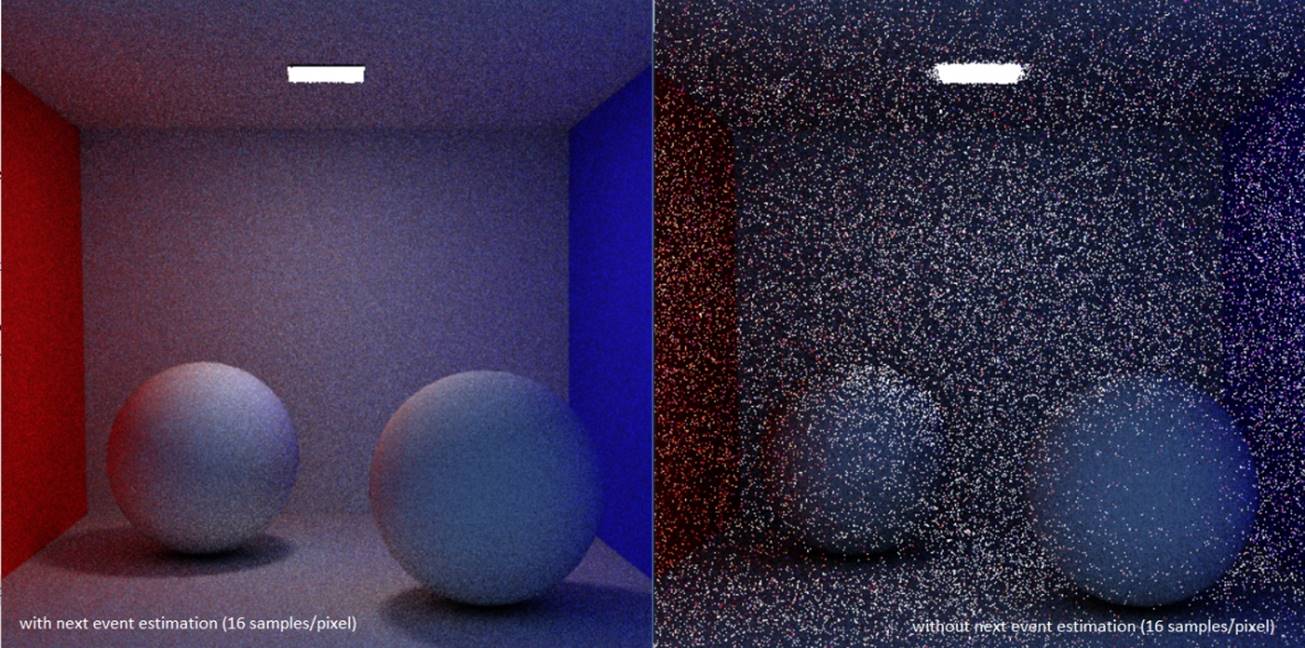

Main difference between naive path tracing and explicit one is that explicit one takes just few samples per pixel to produce already quite smooth image:

With some improvements - explicit path tracing can therefore produce almost smooth images within just few samples per pixel (thus you can achieve real time path tracing). Especially for scenes with mainly diffuse surfaces. They do require knowledge about lights though (as they have to explicitly sample them). The terms “explicit path tracing” or “next event estimation in path tracing” are a bit confusing, but in general interchangeable.

taby said:

So, even though I have caustics working

Caustics will work in path tracers by default - the problem is, the caustics work properly and are visible in your test because your light is large enough and you can do ridiculous amount of samples. If you look at smallpt (url: https://www.kevinbeason.com/smallpt/), that's a very nice reference path tracer. You can clearly see caustics working there too.

The main problem is convergence rate for caustics - for naive path tracing, or even explicit one - it is quite low. You have to throw thousands and thousands of samples to get it properly for larger lights, and many more (in order of magnitudes) when your light is small.

There are extensions - like bi-directional path tracing - that help convergence a bit, at cost of algorithm complexity.

My current blog on programming, linux and stuff - http://gameprogrammerdiary.blogspot.com

I don't think that it's quite understood that the caustics functionality does not take a long time to converge, whatever that may mean. Perhaps the method that I used is novel.

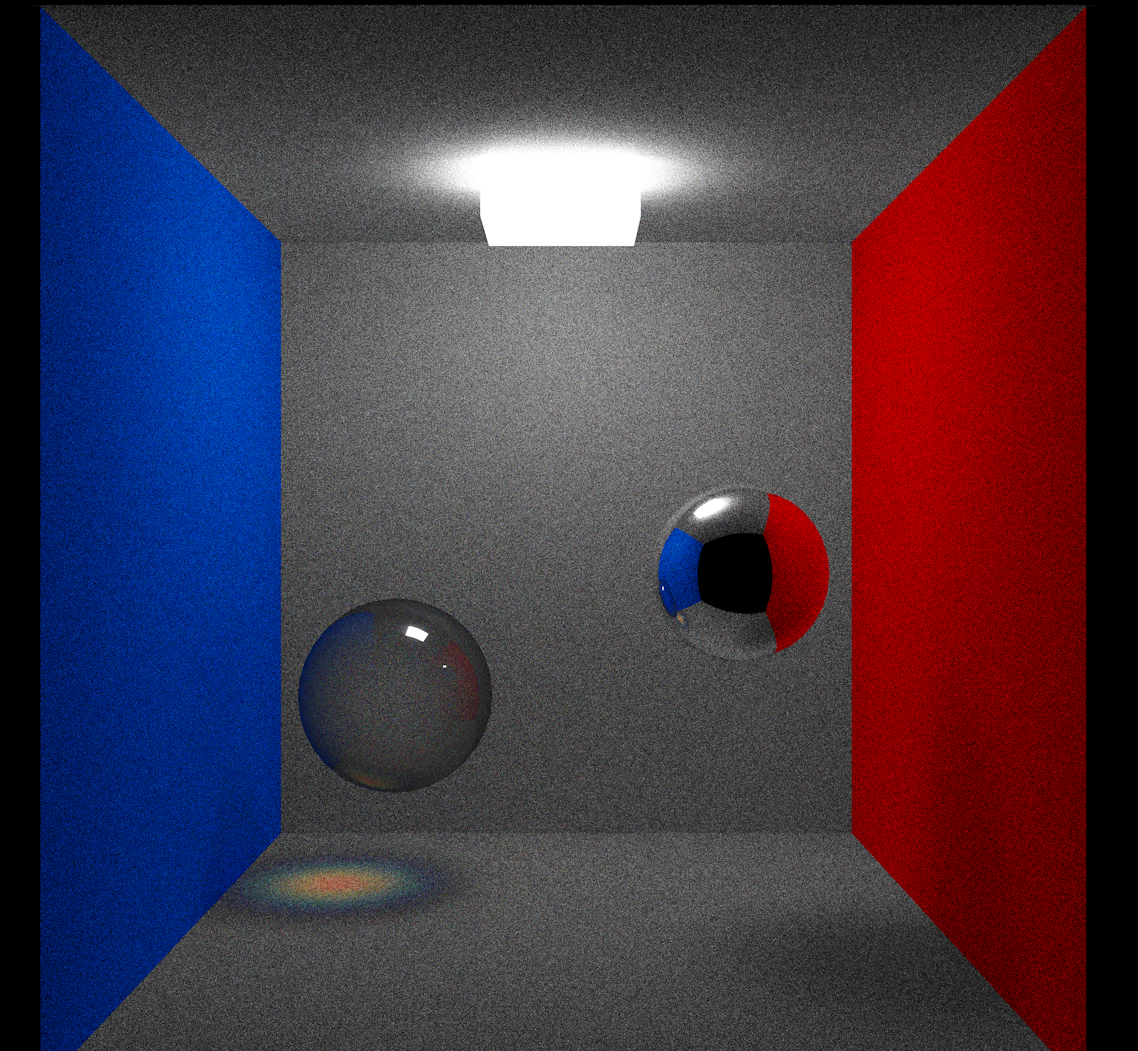

Here is a screenshot with caustics. I used 16 samples per pixel. This small image was downsized from the original 7000x5000 image, using bicubic sampling, to reduce the noise:

You should be very careful about declaring that the results look correct. With global illumination algorithms it's very easy to convince yourself that your result is correct, only to realize later that there is a serious bug.

The reason you may be seeing good convergence with caustics is that you are not evaluating the rendering equation accurately in some way. For instance, if you don't include the cosine term in the BRDF, you will get more contributions from glancing angles which are too strong, this can make images seem less noisy but in the end the results are still wrong. If you don't compensate for the PDF of sampling in the color calculation, your results will be wrong. If you don't have color bleeding, then there is clearly a serious problem in your implementation. Your code should be structured like the example I posted, particularly in how it accumulates the pixel color. If it's not like that, it's probably wrong.

To verify your results you really need to compare to a reference implementation which is known to be correct. It should match perfectly, and if not, then you have a bug somewhere.

You really need to understand how the algorithms work at a deep level in order to get things right. Global illumination is hard. Don't expect it to work correctly by just hacking together some code from random places on the internet, which is the approach you seem to be taking. There are no shortcuts.

You’re right that there are things needed to be done to make the renderer more accurate, and that in order to do so, I will need to learn the pertinent algorithms better. The caustics are pixel-perfect because the algorithm is perfect. All it does is trace a path, based on the wavelength-dependent index of refraction, like it should. Like JoeJ stated in an otherwise private email, my direct lighting is not being calculated properly. I hope that, in the end, it’s an easy fix.

https://github.com/sjhalayka/cornell_box_textured/blob/main/raygen.rgen

P.S. I resent the insinuation that the codes that I based mine off of are all pieces of junk. You have to start somewhere.

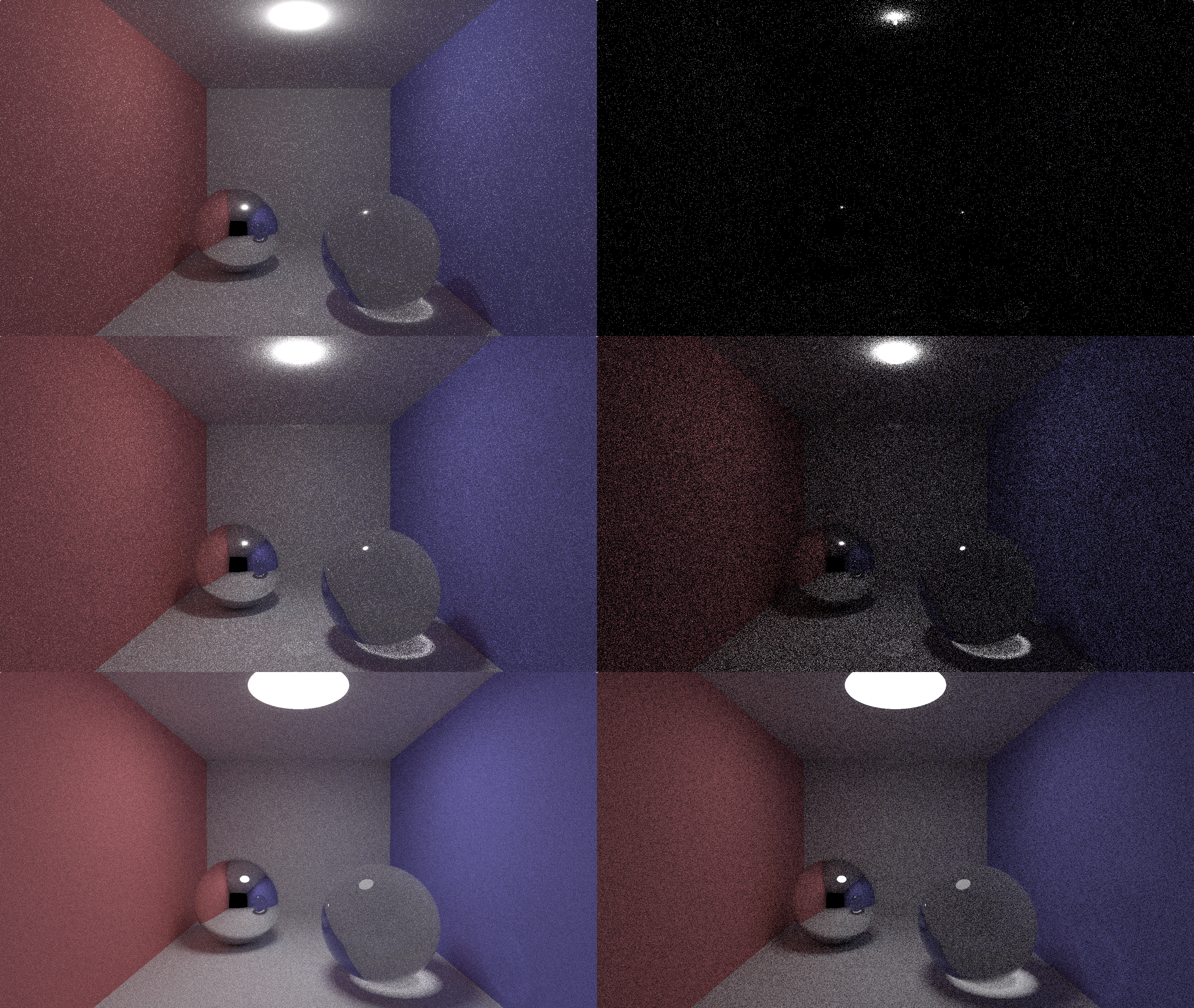

Okay - let me demonstrate. I ran 2 variants (one with naive path tracing, one with explicit (next event estimation) path tracing). Next image is large (beware) - sorry for posting this monstrosity:

So… you should be able to clearly see impact of 2 things. Explicit sampling vs. light size, and caustics noise vs. light size. The bigger the light, the better the caustics - using naive vs. explicit has a major impact on the scene, but not really that big on quality of caustics (unless light is too small!). The only way how you could get better convergence for caustics would be using bi-directional path tracing (and I'd dare to say metropolis light transport would be better than monte carlo approach, especially for caustics - but that's from experience).

I sadly don't have any equivalent/similar implementations of path tracers that can be easily changed like smallpt in shadertoy, that implement bi-directional with neither metropolis light transport nor monte carlo. So I can't quickly provide comparison to those.

My current blog on programming, linux and stuff - http://gameprogrammerdiary.blogspot.com

Thank you for the demonstration. I am still hesitant to use a model that specifies a light position. This way any kind of light can be made… bunny-shaped for instance.

In any case, I hope that it's clear that the caustics use one ray path per pixel, which is why it's so fast and accurate. There's no extra sampling/convergence involved, nor is there backward/bi-directional ray path tracing required.

taby said:

This way any kind of light can be made… bunny-shaped for instance.

You can still have explicit lights with arbitrary shape, you just need a function to sample a random point on the light source, like in the pseudocode I posted earlier. There are efficient ways to do this for triangle meshes and other shapes (e.g. spheres).