So I have a program that involves rendering a few models and some tessellated terrain, and allowing the user to navigate through it using wasd (R and F for vertical) keys and a mouselook camera. I also have it rendering two triangles at the front of the screen that I am attempting to use as the basis for some post-processing raymarching stuff. I also have a depth framebuffer texture that I render from the player's camera perspective to get a sense of how far each pixel's ray must be cast. For reference, here's the part of my fragment shader that deals with the raymarching:

float LinearizeDepth(in vec2 uv)

{

float zNear = 0.1; // TODO: Replace by the zNear of your perspective projection

float zFar = 250.0; // TODO: Replace by the zFar of your perspective projection

float depth = texture2D(texture0, uv).x;

float z_n = 2.0 * depth - 1.0;

float z_e = 2.0 * zNear * zFar / (zFar + zNear - z_n * (zFar - zNear));

return z_e;

}

float mapSphere(vec3 eyePos)

{

return length(eyePos) - 1.0;

}

float doMap(vec3 eyePos)

{

float total = 999999;

for(int x = 0; x<5; x++)

{

for(int y = 0; y<5; y++)

{

eyePos += vec3(x*10,y*10,0);

total = min(total,mapSphere(eyePos));

eyePos -= vec3(x*10,y*10,0);

}

}

return total;

}

Above: Some functions to help set things up.

Below: The part of main that deals with the two triangles applied to the screen.

if(skyDrawingSky == 1)

{

vec2 uv = (vert.xy + 1.0) * 0.5;

//vert is the 2d coords from -1 to 1 of the two triangles rendered to the screen for the raymarching shader

float depth = LinearizeDepth(uv);

//get the depth of a particular pixel's view from a depth buffer rendered to texture0

vec4 r = normalize(vec4(-vert.x,-vert.y,1.0,0.0));

r = r * camAngleMain;

//I thought adding a 3rd dimension and the normalizing would be equivalent to a perspective matrix

//At which point I just multiply those 'perspective coordinates' by the inverse of my actual camera view matrix that I use for the polygonal graphics

vec3 rayDirection = r.xyz;

vec3 rayOrigin = -camPositionMain;

float distance = 0;

float total = 0;

for(int i = 0; i < 64; i++)

{

vec3 pos = rayOrigin + rayDirection * distance;

float value = doMap(pos);

distance += value;

if(distance >= depth)

break;

else

total += clamp(1-value,0,1);

}

color = vec4(1,1,1,total);

return;

}

edit: Changing the raymarching camera glsl code to:

vec4 r = normalize(vec4(vert.x,vert.y,1.0,0.0));

r = r * camProjectionMain;

r = r * camAngleMain;

vec3 rayDirection = r.xyz;

vec3 rayOrigin = camPositionMain;

Doesn't really change anything, but it's easier to read.

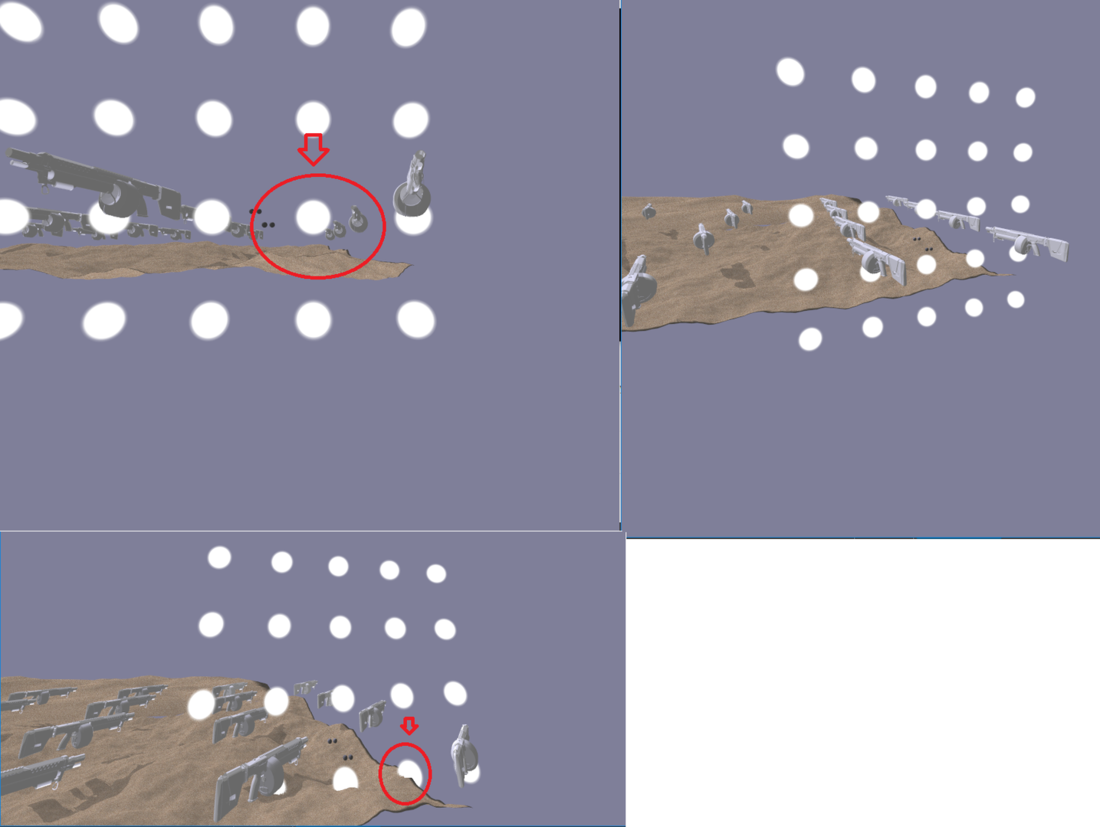

My main problem is that for some reason vertically panning the camera results in vertical distortion of objects as they approach the top and bottom of the view/screen. If you look at the attached image, the white orbs are part of the raymarching shader, everything else is tesselated/polygonal graphics. In the first two images, the orbs can be clearly seen to be above or below the sand colored heightmap. In the last image, the position of the orbs is a bit off such that they now clip through the terrain. I can't figure out why this is. My camera seems to *almost* work, but not quite.

My aspect ratio at the moment is a perfect square, and I multiply my raymarching screen coordinates (2d from -1 to 1) by the inverse of my camera view matrix so I don't really know why this would happen. Is there anything I'm doing wrong with my implementation here?