In this blog series I write about some modern volume rendering techniques for real-time applications and why I believe their importance will grow in the future.

If you have not read part one of the series please check it out here, it is an introduction to the topic and overview of volume rendering techniques. Check it out if you haven’t already and then go on.

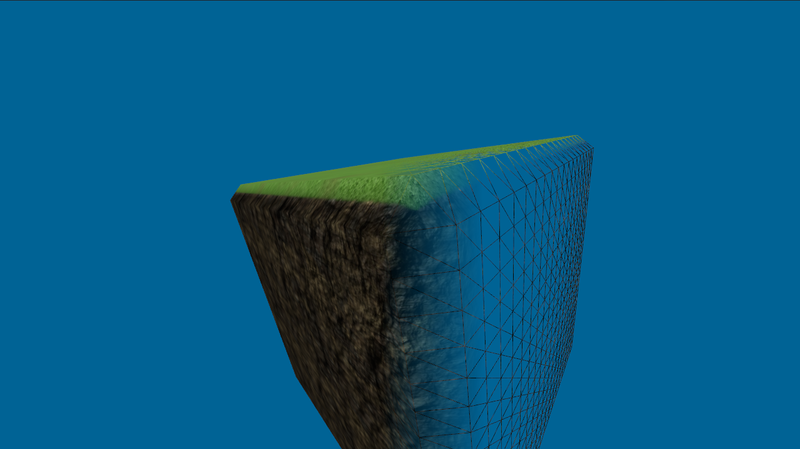

In this second post from our multi-post series on volume rendering for games, I’ll explain the technical basics that most solutions share. Through all the series I’ll concentrate on ‘realistic’, smooth rendering – not the ‘blocky’ one you can see in games like Minecraft.

Types of Techniques

Volume rendering techniques can be divided in two main categories – direct and indirect.

Direct techniques produce a 2D image from the volume representation of the scene. Almost all modern algorithms use some variation of ray-casting and do their calculations on the GPU. You can read more on the subject in the papers/techniques “Efficient Sparse Voxel Octrees” and “Gigavoxels”.

Although direct techniques produce great looking images, they have some drawbacks that hinder their wide usage in games:

- Relatively high per-frame cost. The calculations rely heavily on compute shaders and while modern GPUs have great performance with them, they are still effectively designed to draw triangles.

- Difficulty to mix with other meshes. For some parts of the virtual world we might still want to use regular triangle meshes. The tools developed for editing them are well-known to artists and moving them to a voxel representation may be prohibitively difficult.

- Interop with other systems is difficult. Most physics systems for instance require triangle representations of the meshes.

Indirect techniques on the other hand generate a transitory representation of the mesh. Effectively they create a triangle mesh from the volume. Moving to a more familiar triangle mesh has many benefits.

The polygonization (the transformation from voxels to triangles) can be done only once – on game/level load. After that on every frame the triangle mesh is rendered. GPUs are designed to work well with triangles so we expect better per-frame performance. We also don’t need to do radical changes to our engine or third-party libraries because they probably work with triangles anyway.

In all the posts in this series I’ll talk about indirect volume rendering techniques – both the polygonization process and the way we can effectively use the created mesh and render it fast – even if it’s huge.

What is a Voxel?

A voxel is the building block of our volume surface. The name ‘voxel’ comes from ‘volume element’ and is the 3D counterpart of the more familiar pixel. Every voxel has a position in 3D space and some properties attached to it. Although we can have any property we’d like, all the algorithms we’ll discuss require at least a scalar value that describes the surface. In games we are mostly interested in rendering the surface of an object and not its internals – this gives us some room for optimizations. More technically speaking we want to extract an isosurface from a scalar field (our voxels).

The set of voxels that will generate our mesh is usually parallelepipedal in shape and is called a ‘voxel grid’. If we employ a voxel grid the positions of the voxels in it are implicit.

In every voxel, the scalar we set is usually the value of the distance function at the point in space the voxel is located. The distance function is in the form f(x, y, z) = dxyz where dxyz is the shortest distance from the point x, y, z in space to the surface. If the voxel is “in” the mesh, than the value is negative.

If you imagine a ball as the mesh in our voxel grid, all voxels “in” the ball will have negative values, all voxels outside the ball positive, and all voxels that are exactly on the surface will have a value of 0.

Marching Cubes

The simplest and most widely known polygonization algorithm is called ‘Marching cubes’. There are many techniques that give better results than it, but its simplicity and elegance are still well worth looking at. Marching cubes is also the base of many more advanced algorithms and will give us a frame in which we can more easily compare them.

The main idea is to take 8 voxels at a time that form the eight corners of an imaginary cube. We work with each cube independently from all others and generate triangles in it – hence we “march” on the grid.

To decide what exactly we have to generate, we use just the signs of the voxels on the corners and form one of 256 cases (there are 2^8 possible cases). A precomputed table of those cases tells us which vertices to generate, where and how to combine them in triangles.

The vertices are always generated on the edges of the cube and their exact position is computed by interpolating the values in the voxels on the corners of that edge.

I’ll not go into the details of the implementation – it is pretty simple and widely available on the Internet, but I want to underline some points that are valid for most of the MC-based algorithms.

- The algorithm expects a smooth surface. Vertices are never created inside a cube but always on the edges. If a sharp feature happens to be inside a cube (very likely) then it will be smoothed out. This makes the algorithm good for meshes with more organic forms – like terrain, but unsuitable for surfaces with sharp edges like buildings. To produce a sufficiently sharp feature you’d need a very high resolution voxel grid which is usually unfeasible.

- The algorithm is fast. The very difficult calculation of what triangles should be generated in which case is pre-computed in a table. The operations on each cube itself are very simple.

- The algorithm is easily parallelizable. Each cube is independent of the others and can be calculated in parallel. The algorithm is in the family “embarrassingly parallel”.

After marching all the cubes, the mesh is composed of all the generated triangles.

Marching cubes tends to generate many tiny triangles. This can quickly become a problem if we have large meshes.

If you plan to use it in production, beware that it doesn’t always produce ‘watertight’ meshes – there are configurations that will generate holes. This is pretty unpleasant and is fixed by later algorithms.

In the next series I’ll discuss what are the requirements of a good volume rendering implementation for a game in terms of polygonization speed, rendering performance and I’ll look into ways to achieve them with more advanced techniques.

References

Cyril Crassin, Fabrice Neyret, Sylvain Lefebvre, Elmar Eisemann. 2009.

GigaVoxels : Ray-Guided Streaming for Efficient and Detailed Voxel Rendering.

Samuli Laine, Tero Karras. 2010.

Efficient Sparse Voxel Octrees.

Paul Bourke, 1994,

About the Author(s)

Stoyan Nikolov is co-founder and lead software engineer at Coherent Labs - Next-Gen game UI middleware (www.coherent-labs.com)

I don't want to be the one who always points out something for the hell of it, but isn't the web already filled with general description of algorithms ? Gamedev is visited by advanced users who know how to look for general information, from Gamedev i expect indepth code analysis.

From a voxel tutorial i want to know how i can render zillion of voxels with my gpu, leave shallow tutorials for another site, there are far too many around already.