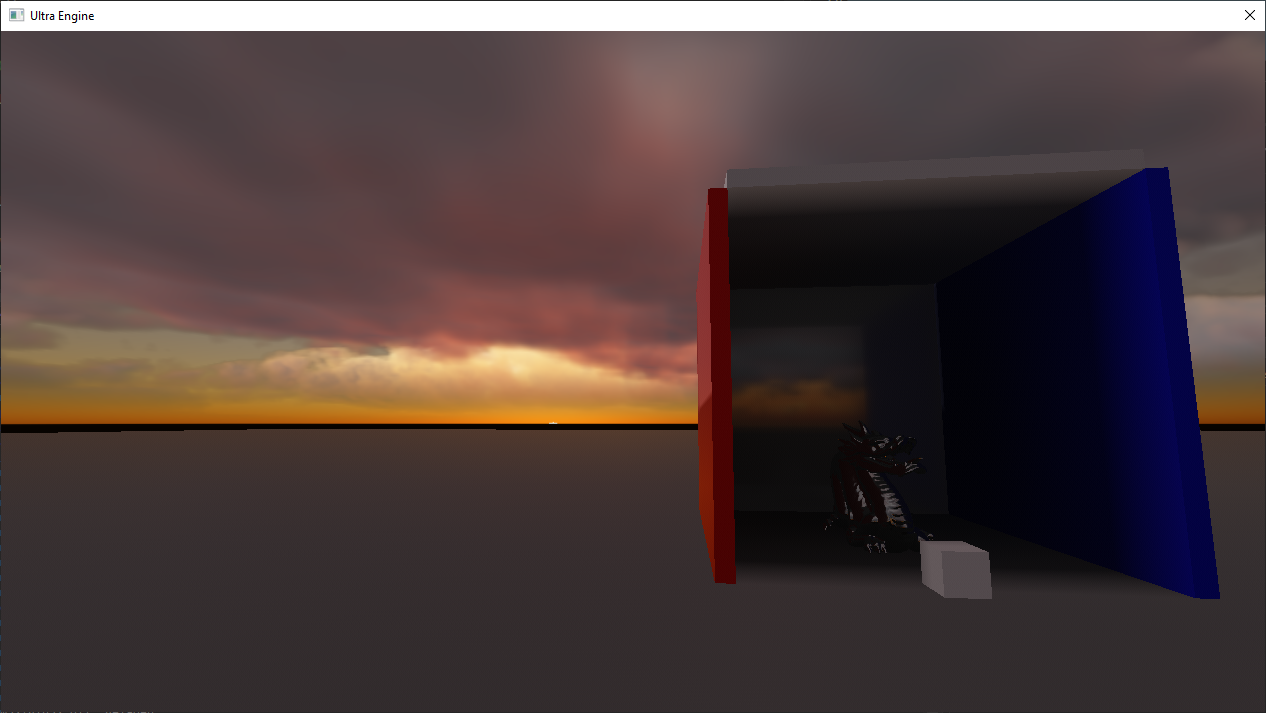

I'm looking for a solution to handle indirect lighting of dynamic objects for low-end hardware (integrated graphics and GPUs that end with __50). I've done hand-placed environment probe volumes in the past with pretty good results. These are basically a box volume of any dimensions inside which an object uses a cubemap rendered from the center of that box. The idea is to put one volume in each room, with the edges touching the inside walls, and it gives an okay approximation of diffuse and specular reflection.

I think id's current engine does something similar to this, mixing probe volumes with screen-space reflections. I read a little bit about it but they like to speak in flowery terms to obfuscate what they are actually doing.

All these papers about irradiance volumes just seem to be about rendering a 3D grid of cubemaps, and they don't seem practical outside of the authors' carefully-picked setups. You would need a huge amount of memory to extend very far. A 512x512x512 cubemap storing six colors would require 3 GB storage space. I can imagine all kinds of problems when a wall lies too close to a probe.

If you used a smaller number of probes and made them follow the camera around, you're still going to have a bad performance hit when the camera shifts one unit and 128x128 cells have to be updated.

I feel like all these papers are very dishonest about the usefulness of such an approach, unless I am missing something. For low-end hardware, is there something better than the hand-placed volumes I have described? I want to make sure there's no other good solution before I proceed.