Ref: https://devforum.roblox.com/t/cage-mesh-deformer-studio-beta/1196727

Roblox has something new in a clothing system. You can dress your character with multiple layers of mesh clothing, and they adjust the meshes to keep the layering correct. No more inner layers peeking through outer layers. They call this a “cage mesh deformer”. It's still in beta.

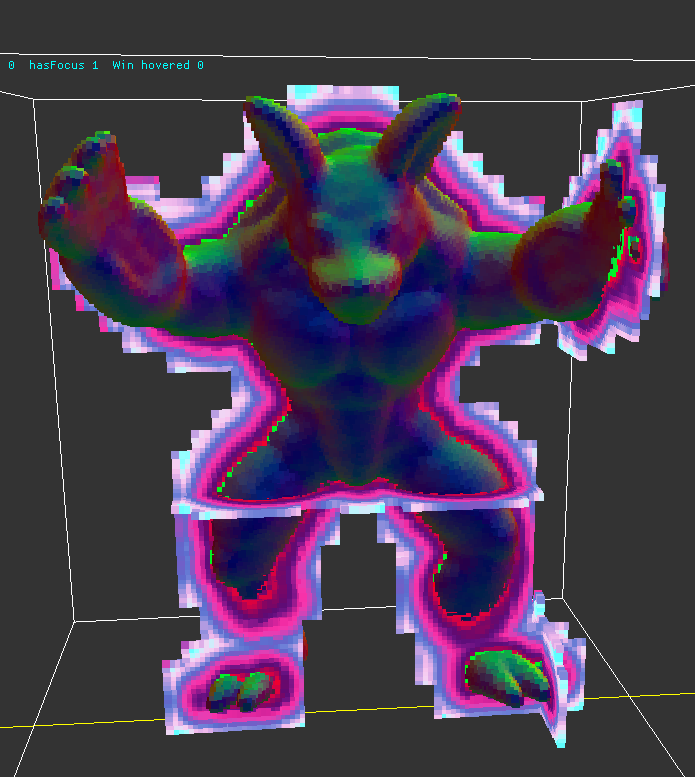

Red outline = Inner Cage

Blue outline = Outer Cage

This is a form of precomputation - you do this once during the process of putting on clothes, and the result is a single rigged mesh the GPU can render as usual.

Is this a new concept? Are there any theory papers on this? How do you take a group of clothing mesh layers that fit in pose stance and adjust them so they work as the joints move? Looks like Roblox is automating that, at least partly.

There was, apparently, a Lightwave plugin for cage deformers around 2014, but it didn't catch on. (https://www.lightwave3d.com/assets/plugins/entry/cage-deformer) So it's not a totally new idea.

There are a few ways to go at this. Here's one that occurs to me:

- You have a jacket put on over a body. They're both rigged mesh, and in pose stance (standing, arms out, legs spread slightly) everything is layered properly. But if the arm bends, the elbow pokes through the jacket. The goal is to fix that.

- So, suppose we project the vertices of the jacket inward onto the body, where they become additional vertices of the body. We project the vertices of the body outward onto the jacket, where they become additional vertices of the jacket.

- For the jacket, interpolate the bone weights for the new points. Then use the bone weights of the jacket on the corresponding points of the underlying body. Now the body will maintain the proper distance from the inside of the jacket.

- Use the result as an ordinary rigged mesh.

If there are multiple layers of clothing, do this from the outside in, so each layer is the “outer cage” of the next layer inward.

The idea is to do this automatically, so that users can mix and match clothing and it Just Works.

(Second Life has mix and match clothing from many creators, and poking through is a constant problem, patched by adding alpha texture layers to blank out troublesome inner layers. It doesn't Just Work.)