Guess the links don't work, forum editor got stuck once more. But easy to find. However, i also found his implementation for some DCC plug in, and as mentioned in paper they do some taylor series stuff to approximate form factor, although they also store surfel data from which we can get this precisely without storing extra coefficients and processing them. So my method is simpler and faster.

If you only need one point, given code is likely fast enough, but if you need many points like for solid voxelization, this is how to speed it up:

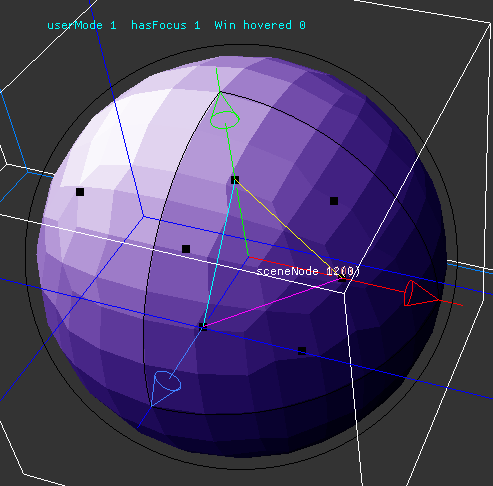

- Make acceleration structure for mesh triangles (I use simple BVH, but octree etc. would work as well)

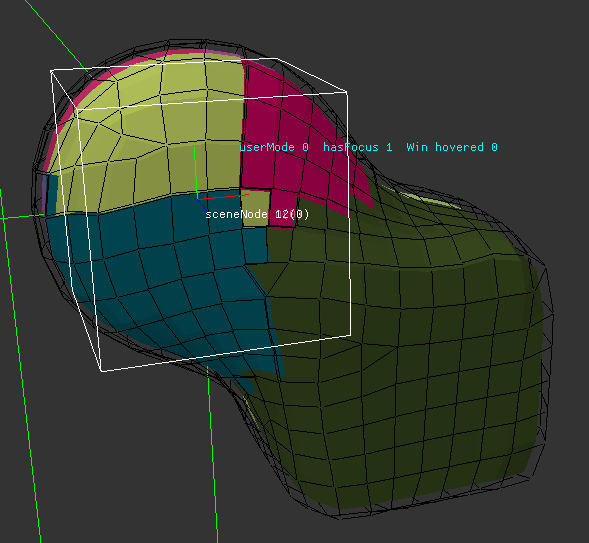

- Calculate surfel for each triangle (using triangle area and normal)

- Make a hierarchy bottom up: So each internal node becomes a surfel as well by summing up area and averaging normal from the surfels of all child nodes. (Exactly like here: https://developer.nvidia.com/gpugems/gpugems3/part-ii-light-and-shadows/chapter-12-high-quality-ambient-occlusion) EDIT - no, meant this earlier one: https://developer.nvidia.com/gpugems/gpugems2/part-ii-shading-lighting-and-shadows/chapter-14-dynamic-ambient-occlusion-and

- Finally, when traversing the surface for the query point, for distant surface patches it is good enough to stop at some internal node, also like proposed in Bunnels article above, to get O(log n). Axact triangle test is only necessary if very close to it - otherwise surfels are fine. If geometry resolution is high and uniform (like marching cubes output), exact triangle test might not be necessary at all.

Notice the speedup vs. RT approach, where you need to traverse BVH for each of maybe hundrets of rays. Surface integration is also more robust to issues like holes and imperfections, degenerate cases, non manifolds, etc.

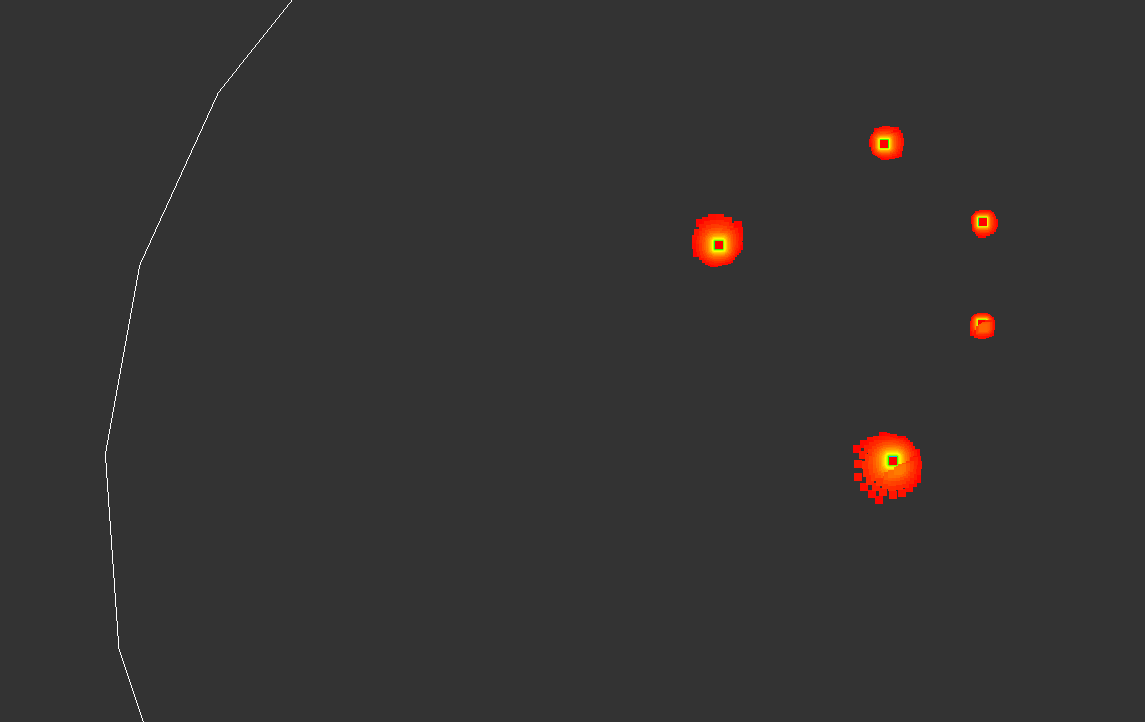

To make it even faster, traversal stack can be shared across a query volume of points using hierarchical top down processing if we have that (not just a single point). But that's complicated so i'd do this only if you really have to. My final performance is voxelizing 20 models at 2k^3 resolution in 30 minutes IIRC.

Ofc. rasterizing + depth peeling would be much faster still, but this only works for proper manifold input (rarely the case for game models, but usually is for extracted iso surface).

Limitations: You must know the surface normal for your input surface. If the surface points inwards or outwards.

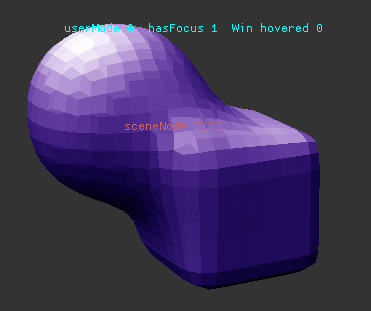

Unfortunately this is not always given in practice. Imagine an architecture model like Sponza. The artist models a flat floor, then puts uncapped cylinders on top to get columns. Now imagine to go inside the column. You see back faces from the column which is fine, but you also see front facing floor triangles when looking down. So you do not really know if you are inside or outside. In such cases i get bulbs of empty space inside the columns near the floor. I filter them out with some heuristics, but do not remember precisely how.

This problem would appear also with RT or the winding number approach.