So to give a bit of context as to what i'm trying to achieve and where i seem to be failing at:

I'm currently implementing an SSAO approach which requires me to reconstruct the viewspace position from the depth buffer.

The depth is encoded into an RGB (24 bit) texture. (As i can't access the depth buffer directly.)

float4 ClipPos : COLOR0;

//vertex

void main(in input IN, out v2p OUT)

{

OUT.Position = mul(gm_Matrices[MATRIX_WORLD_VIEW_PROJECTION],IN.Position);

OUT.ClipPos = OUT.Position;

}

//=============================

//fragment

float4 ClipPos : COLOR0;

float3 ftov(float depth)

{

//encodes float into RGB

float depthVal = depth * (256.0*256.0*256.0 - 1.0) / (256.0*256.0*256.0);

float4 encode = frac( depthVal * float4(1.0, 256.0, 256.0*256.0, 256.0*256.0*256.0) );

return encode.xyz - encode.yzw / 256.0 + 1.0/512.0;

}

void main(in v2p IN, out p2s OUT)

{

OUT.Depth = float4(ftov(IN.ClipPos.z/IN.ClipPos.w),1.0);

}

To prepare for depth reconstruction i inverse the projection matrix: (and i think herein lies the issue: )

scr_set_camera_projection();//set matrix

obj_controller.projectionMatrix_player = matrix_get(matrix_projection);//get projection matrix

var pLin = r44_create_from_gmMat(obj_controller.projectionMatrix_player);//convert

pLin = r44_invert(pLin);//invert

obj_controller.projectionMatrix_player_inverted = gmMat_create_from_r44(pLin);//convert back to send it to the shader

Now, i noticed that there is an issue with the reconstructed depth component in the shader.

varying vec2 v_vTexcoord;

uniform sampler2D gDepth;

float vtof(vec3 pack)

{

//decodes RGB to float

float depth = dot( pack, 1.0 / vec3(1.0, 256.0, 256.0*256.0) );

return depth * (256.0*256.0*256.0) / (256.0*256.0*256.0 - 1.0);

}

//reconstructs Viewspace position from depth

vec3 getViewPos(vec2 texcoord){

vec4 clipPos;

clipPos.xy = texcoord.xy * 2.0 - 1.0;

clipPos.z = vtof(texture2D(gDepth, texcoord).rgb);

clipPos.w = 1.0;

vec4 viewP = projection_inv * clipPos;

viewP = viewP/viewP.w;

return viewP.xyz;

}

void main()

{

vec3 fragPos = getViewPos(v_vTexcoord);

float si = sign(fragPos.z);//get sign from z component

if(si < 0.0){

gl_FragColor = vec4(1.0,0.0,0.0,1.0);

}else{

gl_FragColor = vec4(0.0,1.0,0.0,1.0);

}

}

So in DirectX, +Z goes towards the screen while -Z goes into the distance (effectively reversed compared to OpenGL)

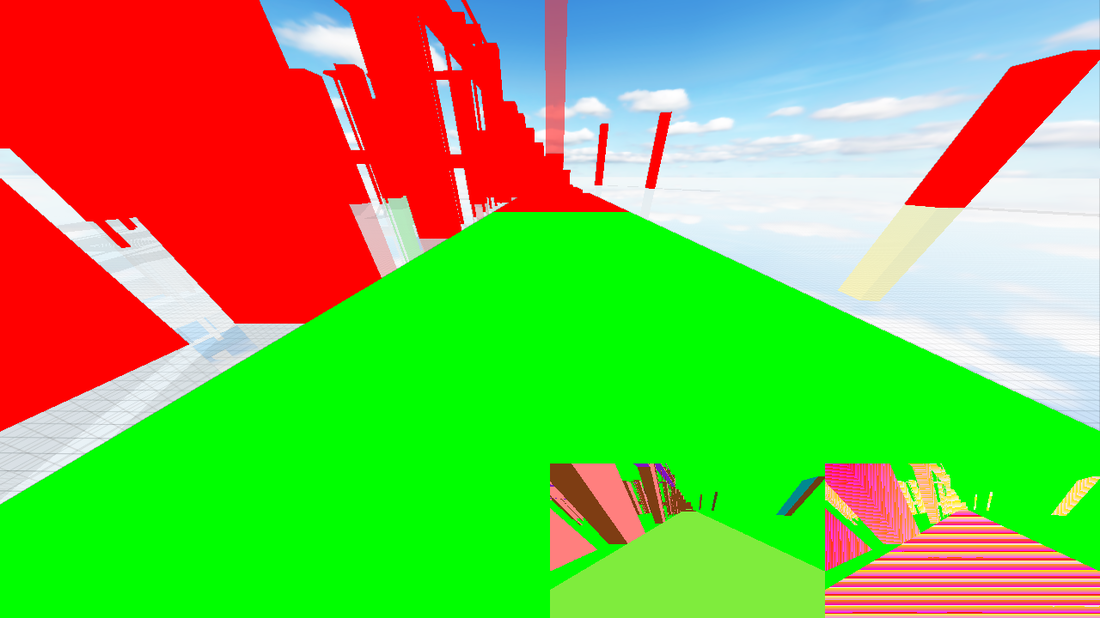

However, after hours of debugging i simply tried to render the sign of the Z coordinate. And this is the result:

For some reason, the Z coordinate is positive (up until a point) which is shown as the green coloring in the scene.

And after that it becomes red (where z becomes negative).

From my understanding it should be all red (as the Z component should start to be negative from the cameras origin point.)

(The encoding/decoding of RGB values was tested and shouldn't be the culprit.) Has anyone an idea/direction as to what the issue could be? (I think that maybe the conversion process for the inversion call might be the cause of this?. Or maybe the reconstruction in the shader is wrong?)