I am writing a toy software renderer (non real-time) and I can't get shadow mapping to work. I think the problem lies where I attempt to transform positions to light space. When I was debugging the problem, I saw that my code was sometimes getting back 1.0 as a depth value from sampling the shadow map. This is the max depth value and implies that it is sampling a position where there is no geometry in the shadow map. As a first step, I want to check that my theory is correct before posting a bunch of code. For reference here is the scene being rendered with the problem:

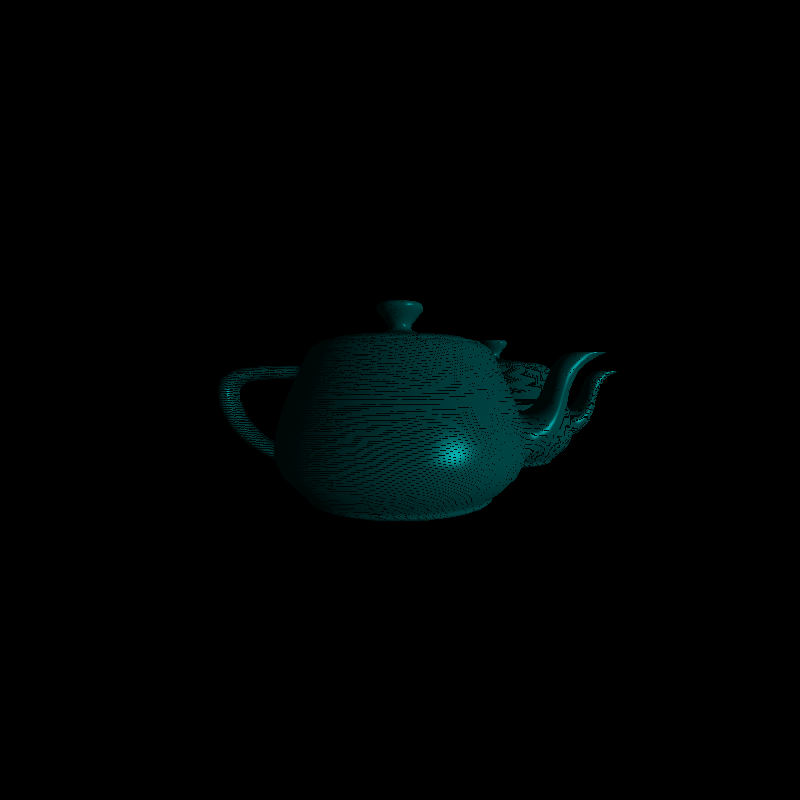

Firstly, I think that my actual shadow map is correct. I store it as a big array of floats that represent depth values from -1 (closest to camera) to 1 (away from camera). Here is the shadow map outputted as an image: (The scale has been inverted so bright pixels indicate geometry that's closer to the camera)

For each triangle, I do the standard transformations on each vertex (model, view, projection, perspective divide, viewport). For each fragment, I calculate the barycentric coordinates using vertex coordinates in "device space". Device space means that they have been transformed by the model view projection matrix, have had the perspective divide and have been mapped from 0-800 (output image size). I then use these barycentric coordinates in all subsequent calculations.

For lighting, all calculations are done in eye space. I interpolate the eye space vertices and eye space normals and do calculations based on the phong reflectance model. The depth of each fragment (for z buffering) is calculated by interpolating over the vertices in clip space using the barycentric coordinates:

float depth =

((vertex_clip[0].z / vertex_clip[0].w) * bary.x) +

((vertex_clip[1].z / vertex_clip[1].w) * bary.y) +

((vertex_clip[2].z / vertex_clip[2].w) * bary.z);For shadow mapping, as far as I understand I need two values: the depth of the current fragment in light space, and the depth of the current fragment as sampled from the shadow map. If the current fragment in light space is "deeper" than the sampled value, then the point is in shadow. In addition to transforming the vertices by the camera's transformations, I also transform them by the light's matrices too. I store the vertices in "light clip space" and "light device space". Light clip space is achieved by transforming the vertices by the model, view_light and projection matrices. Light device space is achieved with the full chain (model, view_light, projection, perspective divide, viewport). This is exactly how I transform them to generate the shadow map. I then use the previously calculated barycentric coordinates to interpolate over the vertices in these two spaces. The depth of the current fragment in light space is calculated like this:

float depth_light =

((vertex_clip_light[0].z / vertex_clip_light[0].w) * bary.x) +

((vertex_clip_light[1].z / vertex_clip_light[1].w) * bary.y) +

((vertex_clip_light[2].z / vertex_clip_light[2].w) * bary.z);And the position to sample in the shadow map is calculated by interpolating the vertices in "light device space":

V4f pixel_position_device_light =

vertex_device_light[0] * bary.x +

vertex_device_light[1] * bary.y +

vertex_device_light[2] * bary.z;Is there any part of that whole process that I am fundamentally getting wrong or misunderstanding? Or does my theory check out, implying that my problem probably lies somewhere else?