Hello,

I'm currently working on a visualisation program which should render a low-poly car model in real-time and highlight some elements based on data I get from a database. So far no difficulty here. So far I managed to set up my UWP project so that I have a working DirectX 11 device and real-time swapchain-based output.

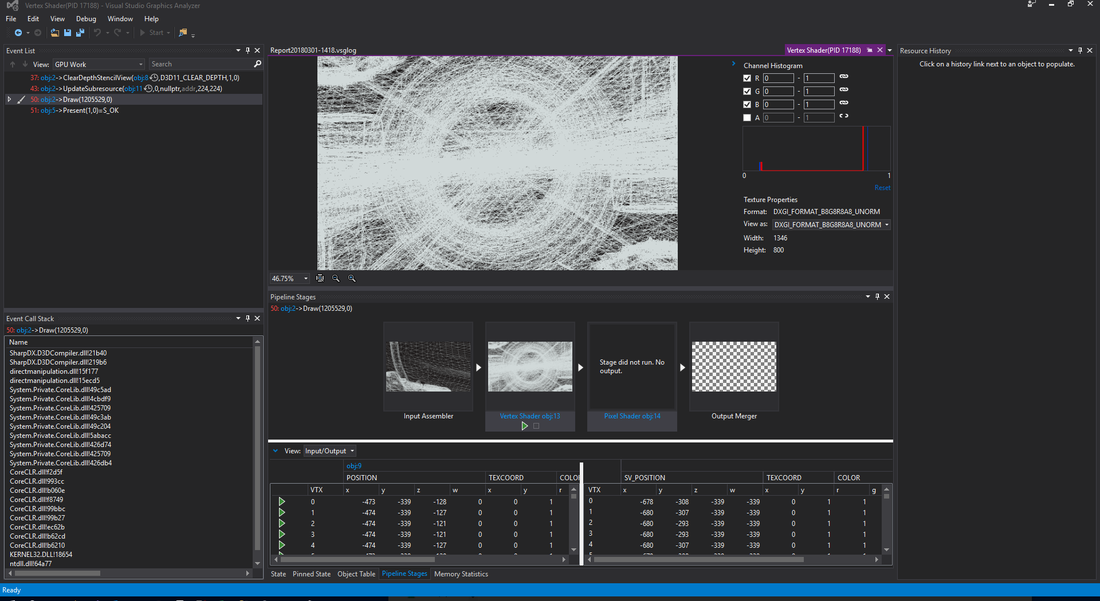

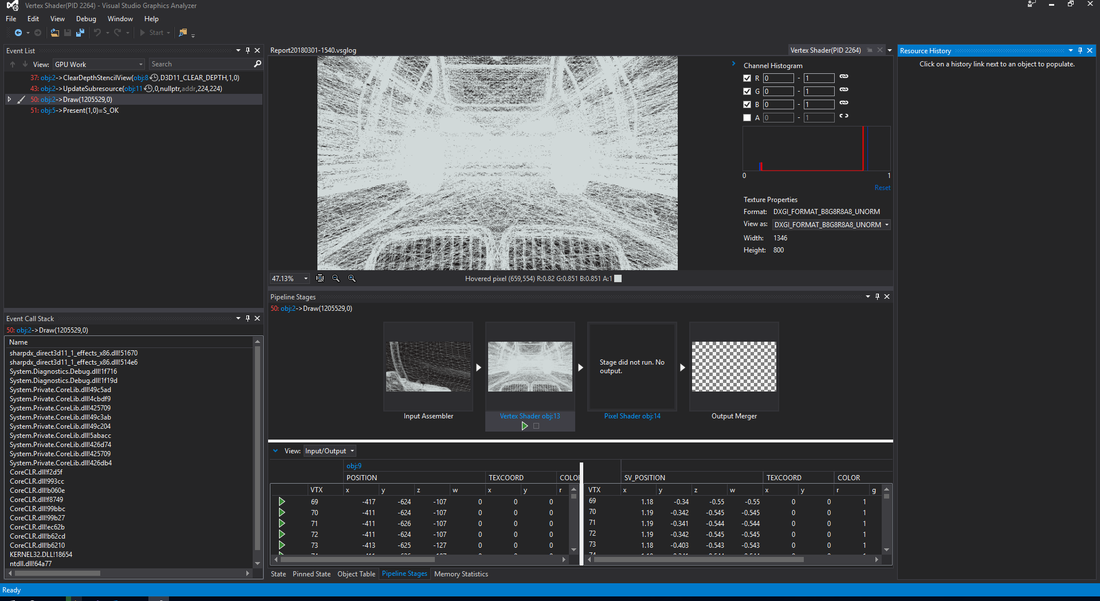

When I debug my Input Assembler stage shows a valid model - the whole thing is correctly rendered in the VS Graphics Analyzer. The Vertex Shader output seems okay, too - but it's likely that the camera view-matrix is a bit wrong (too close, maybe the world matrix is rotated wrong as well) but the VS-GA does show some output there. Now the Pixel Shader does not run, though. My assumption is, that the coordinates I get from the Vertex Shader are bad (high values which I guess should actually be between -1.0f and 1.0f). So obviously the Rasterizer clips all vertices and nothing ends up rendered.

I've been struggling with this for days now and since I really need to get this working soon I hoped someone here has the knowledge to help me fix this.

Here's a screenshot of the debugger:

I'm currently just using a simple ambient light shader (see here).

And that's my code for rendering. The model class I'm using simply loads the vertices from a STL-file and creates the vertex buffer for it. It sets it and renders the model indexed or not (right now I don't have a index buffer since I haven't figured out how I calculate the indices for the model ... but that'll do for now).

public override void Render()

{

Device.Clear(Colors.CornflowerBlue, DepthStencilClearFlags.Depth, 1, 0);

_camera.Update();

ViewportF[] viewports = Device.Device.ImmediateContext.Rasterizer.GetViewports<ViewportF>();

_projection = Matrix.PerspectiveFovRH((float) Math.PI / 4.0f, viewports[0].Width / viewports[0].Height,

0.1f, 5000f);

_worldMatrix.SetMatrix(Matrix.RotationX(MathUtil.DegreesToRadians(-90f)));

_viewMatrix.SetMatrix(_camera.View);

_projectionMatrix.SetMatrix(_projection);

Device.Context.InputAssembler.InputLayout = _inputLayout;

Device.Context.InputAssembler.PrimitiveTopology = PrimitiveTopology.TriangleList;

EffectTechnique tech = _shadingEffect.GetTechniqueByName("Ambient");

for (int i = 0; i < tech.Description.PassCount; i++)

{

EffectPass pass = tech.GetPassByIndex(i);

pass.Apply(Device.Context);

_model.Render();

}

}The world is rotated around the X-axis since the model coordinate system is actually a right-handed CS where X+ is depth and Y is the horizontal and Z the vertical coordinate. Couldn't figure out if it was right that way, but should be theoretically.

public void Update()

{

_rotation = Quaternion.RotationYawPitchRoll(Yaw, Pitch, Roll);

Vector3.Transform(ref _target, ref _rotation, out _target);

Vector3 up = _up;

Vector3.Transform(ref up, ref _rotation, out up);

_view = Matrix.LookAtRH(Position, _target, up);

}The whole camera setup (static since no input has been implemented) is for now:

_camera = new Camera(Vector3.UnitZ);

_camera.SetView(new Vector3(0, 0, 5000f), new Vector3(0, 0, 0), MathUtil.DegreesToRadians(-90f));So I tried to place the camera above the origin, looking down (thus the UnitZ as up-vector).

So can anybody explain me why the vertex shader ouput is so wrong and obviously all vertices get clipped?

Thank you very much!