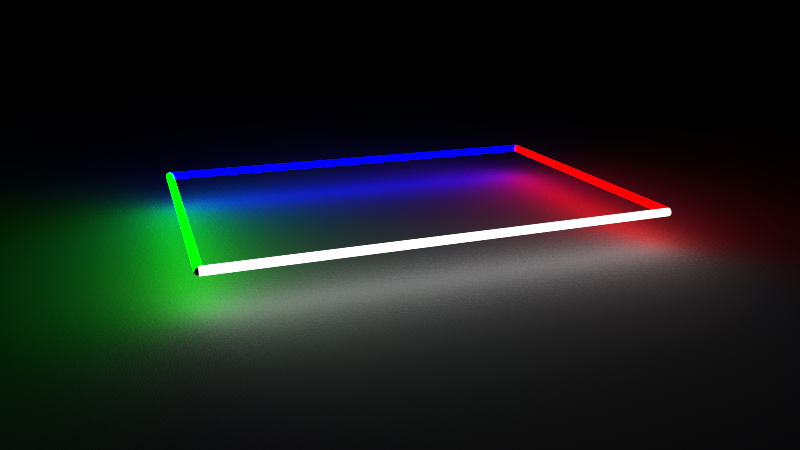

Hi, first post here. I'm making a simple Augmented Reality game from the known 2D puzzle game Slitherlink or Loopy. This will be the first time I'm using shaders, so I'm on a bit of a steep learning curve here.

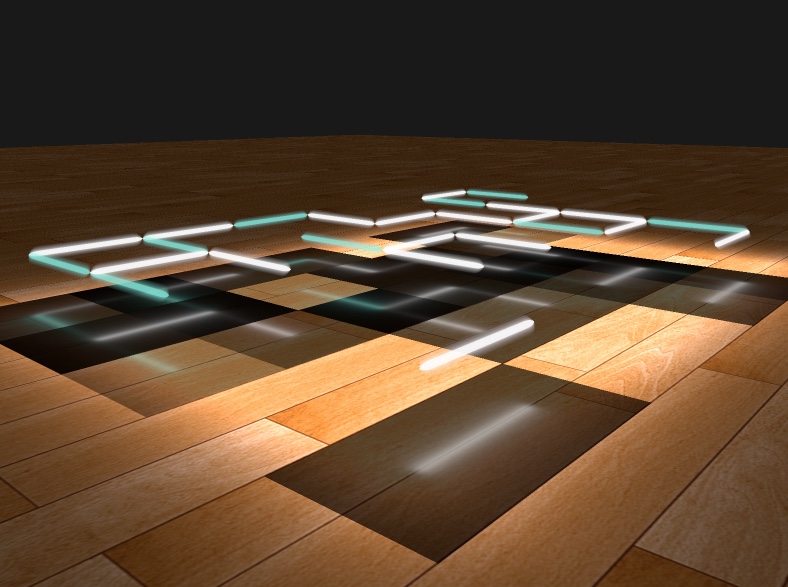

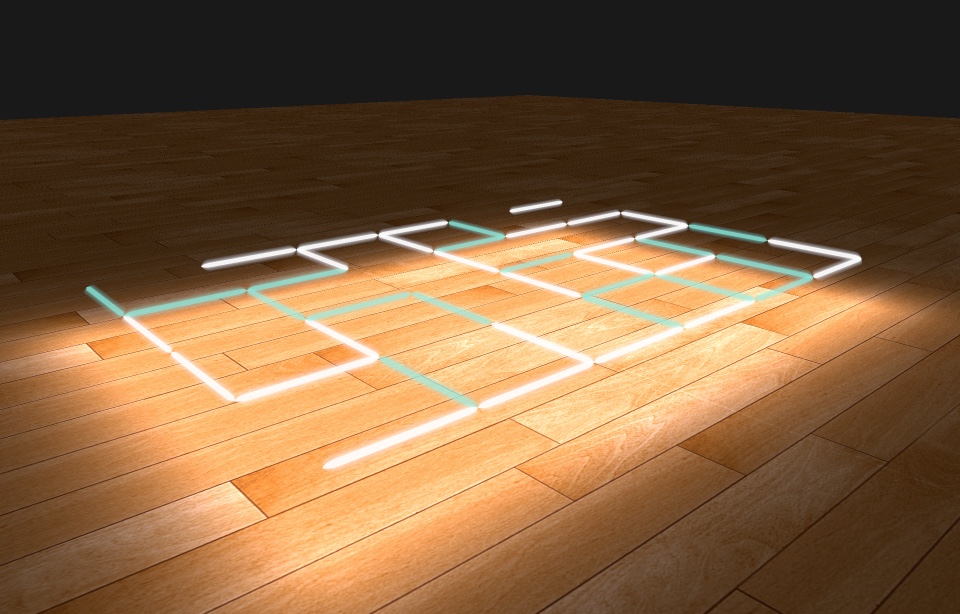

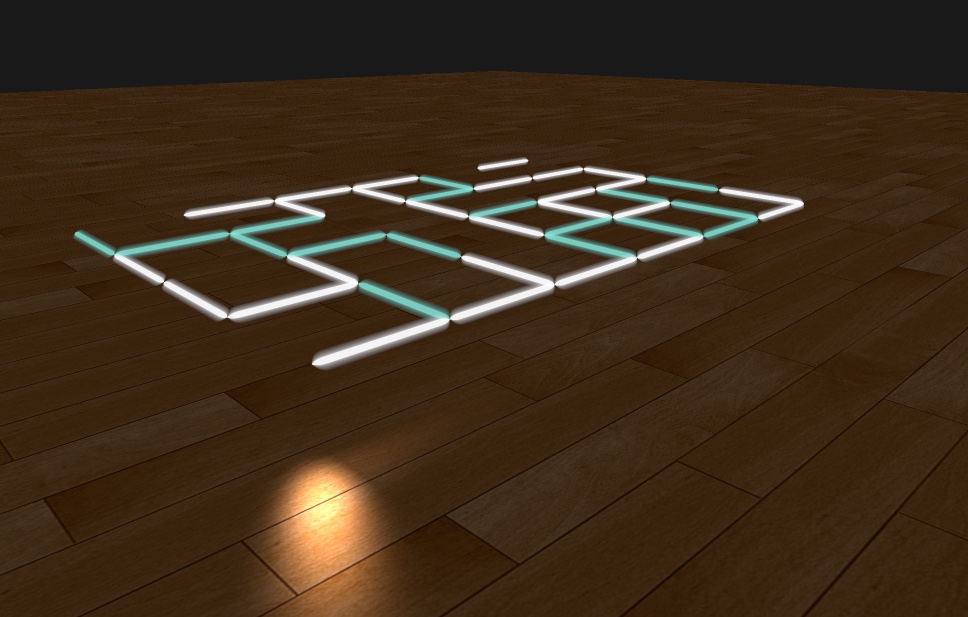

My concept is that AR will look really nice with self illuminating objects, instead of normal materials where the shadows would be missing or wrong, as would be quite striking when composited to the camera feed.

So I'd like to make the game as "laser-beams" levitating above the table, which is technically saying displaying and illuminating using tube lights. This is where I'm stuck.

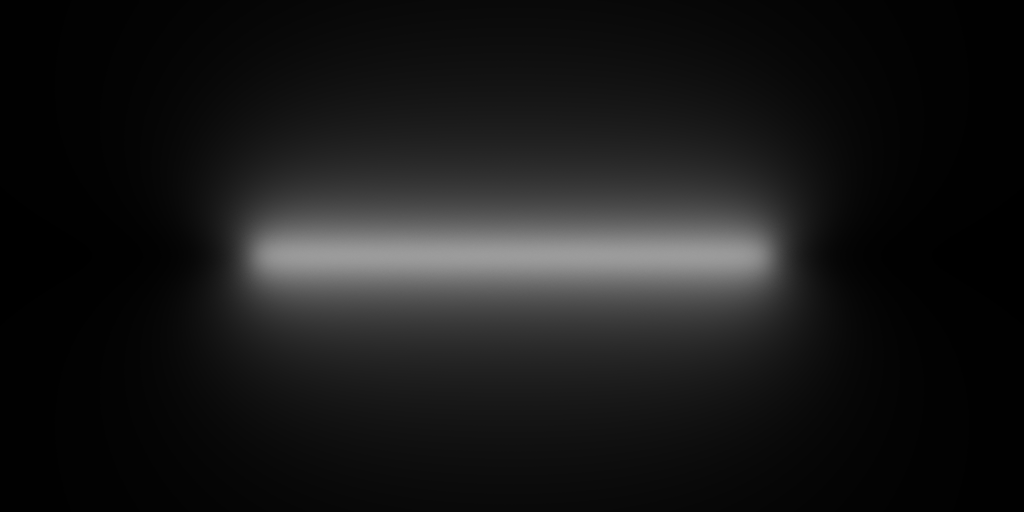

I've implemented smooth 2D line segment rendering by creating rectangles perpendicular to the camera and shading them in the fragment shader.

I also looked into area lights, but all I could come up with was just "getting the nearest point in a rectangle" concept, which is:

- looking nice on diffuse as long as it's a uniform color

- but is totally wrong for Blinn and Phong shading

My biggest problem is how to get the tube light illumination effect. Instead of the uniform white area on the screenshot below, I'd like to get colored, grid-like illumination on the ground. The number of tube lights can be up to 200.

My only idea is to render to a buffer from a top orthogonal projection, apply gaussian blur and use it for diffuse lighting on the floor. Does this sound reasonable?

Also, does anyone know how to get spectacular reflections right with an area light? Nothing PBR, just a Blinn would be nice.

The scene is very simple: floor on 0, all lights in the same height and only the floor needs to be lit.

My shader (Metal, but pretty much 1:1 GLSL):

fragment float4 floorFragmentShader(FloorVertexOut in [[stage_in]],

constant Uniforms& uniforms [[buffer(2)]],

texture2d<float> tex2D [[texture(0)]],

sampler sampler2D [[sampler(0)]]) {

float3 n = normalize(in.normalEye);

float lightIntensity = 0.05;

float3 lightColor = float3(0.7, 0.7, 1) * lightIntensity;

// area light using nearest point

float limitX = clamp(in.posWorld.x, -0.3, 0.3);

float limitZ = clamp(in.posWorld.z, -0.2, 0.2);

float3 lightPosWorld = float3(limitX, 0.05, limitZ);

float3 lightPosEye = (uniforms.viewMatrix * float4(lightPosWorld, 1)).xyz;

// diffuse

float3 s = normalize(lightPosEye - in.posEye);

float diff = max(dot(s, n), 0.0);

float3 diffuse = diff * lightColor * 0.2 * 0;

// specular

float3 v = normalize(-in.posEye);

// Blinn

float3 halfwayDir = normalize(v + s);

float specB = pow(max(dot(halfwayDir, n), 0.0), 64.0);

// Phong

float3 reflectDir = reflect(-s, n);

float specR = pow(max(dot(reflectDir, v), 0.0), 8.0);

float3 specular = specB * lightColor;

// attenuation

float distance = length(lightPosEye - in.posEye);

float attenuation = 1.0 / (distance * distance);

diffuse *= attenuation;

specular *= attenuation;

float3 lighting = diffuse + specular;

float3 color = tex2D.sample(sampler2D, in.texCoords).xyz;

color *= lighting + 0.1;

return float4(float3(color), 1);

}