This is the first of at least two posts regarding my evaluation of the addition of an In-Memory(RAM) Key-Value Database system to my server architecture. If you're unfamiliar, check out https://en.wikipedia.org/wiki/Key-value_database, for some broad spectrum info.

I'm beginning with Redis, it runs on Linux which is my scale-up server platform of choice, and this database will be the key to the scalability of my server architecture. It's also open-source and has been around for a while now.

Download: https://redis.io/download

Documentation: https://redis.io/documentation

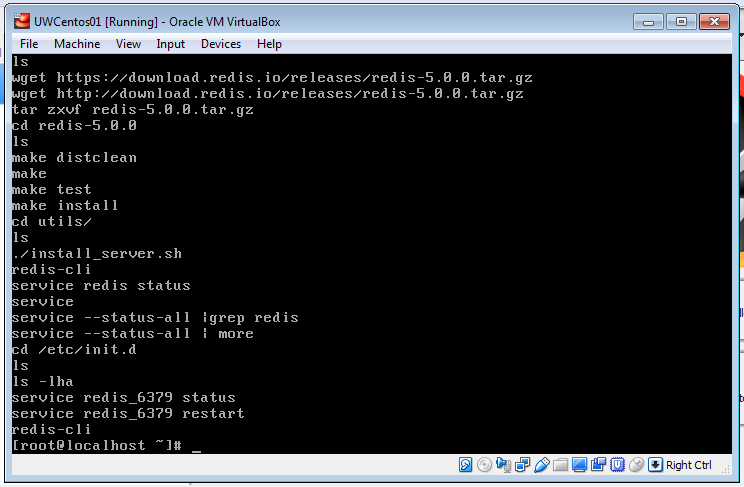

Installation was pretty straight forward, I created a Centos7 VM using Oracle's VirtualBox software (https://www.virtualbox.org/ one of the easiest ways to use vms locally I've used on Windows),

It has been a couple years since I worked on a Linux machine, but I still managed to figure it out:

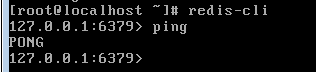

So, that's a working installation. Easy as 1,2,3..

Okay, a simple benchmark,VM is using a single core and 4G of ram, and I'm using the StackExchange.Redis client from NuGet:

using System;

using System.Collections.Generic;

using System.Diagnostics;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using StackExchange.Redis;

namespace TestRedis

{

class Program

{

static Dictionary<string, string> TestDataSet = new Dictionary<string, string>();

static ConnectionMultiplexer redis = ConnectionMultiplexer.Connect("192.168.56.101");

static void Main(string[] args)

{

//Build Test Dataset

Console.Write("Building Test Data Set.");

int cnt = 0;

for (int i = 0; i < 1000000; i++)

{

cnt++;

TestDataSet.Add(Guid.NewGuid().ToString(), Guid.NewGuid().ToString());

if (cnt > 999)

{

cnt = 0;

Console.Write(i.ToString() + "_");

}

}

Console.WriteLine("Done");

IDatabase db = redis.GetDatabase();

Stopwatch sw = new Stopwatch();

sw.Start();

//Console.WriteLine("Starting 'write' Benchmark");

//foreach (KeyValuePair<string, string> kv in TestDataSet)

//{

// db.StringSet(kv.Key, kv.Value);

//}

Console.WriteLine("Starting Parallel 'write' Benchmark.");

Parallel.ForEach(TestDataSet, td =>

{

db.StringSet(td.Key, td.Value);

});

Console.WriteLine("TIME: " + (sw.ElapsedMilliseconds / 1000).ToString());

sw.Restart();

Console.WriteLine("Testing Read Verify.");

//foreach (KeyValuePair<string, string> kv in TestDataSet)

//{

// if (db.StringGet(kv.Key) != kv.Value)

// {

// Console.WriteLine("Error Getting Value for Key: " + kv.Key);

// }

//}

Console.WriteLine("Testing Parallel Read Verify");

Parallel.ForEach(TestDataSet, td =>

{

if (db.StringGet(td.Key) != td.Value)

{

Console.WriteLine("Error Getting Value for Key: " + td.Key);

}

});

Console.WriteLine("TIME: " + (sw.ElapsedMilliseconds / 1000).ToString());

sw.Stop();

Console.WriteLine("Press any key..");

Console.ReadKey();

}

}

}Basically I'm creating a 1million record Dictionary filled with Guids(keys & values). Then testing both a standard foreach and a parallel.foreach loop to write to Redis and then read from Redis/compare to dictionary value.

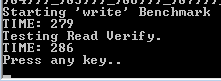

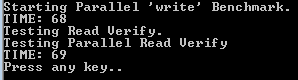

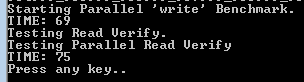

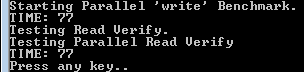

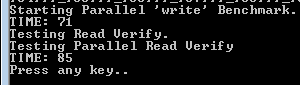

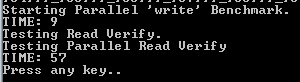

Result times are all in seconds.

Standard foreach loop(iterating through dictionary to insert into redis):

Almost 5minutes to write and another almost 5 minutes to verify..

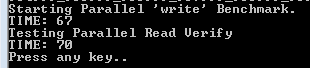

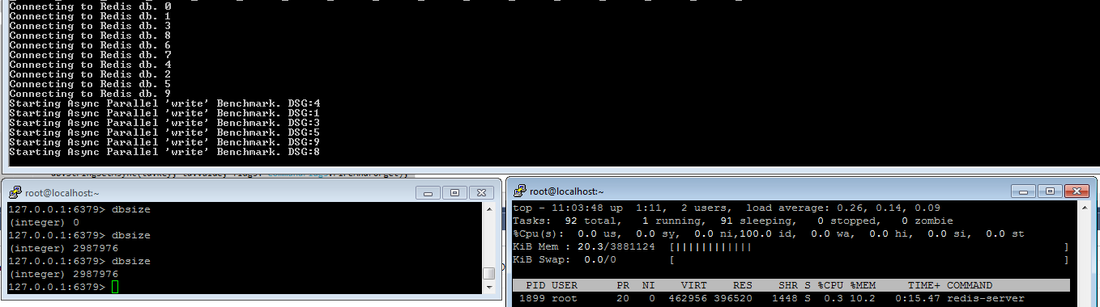

Using the Parallel.Foreach method:

and then again with 1M records already present in DB:

And then with 2M records present in DB:

And then with 3M:

and 4M:

Well, you get the picture.. ![]()

So far, I think I really like Redis.

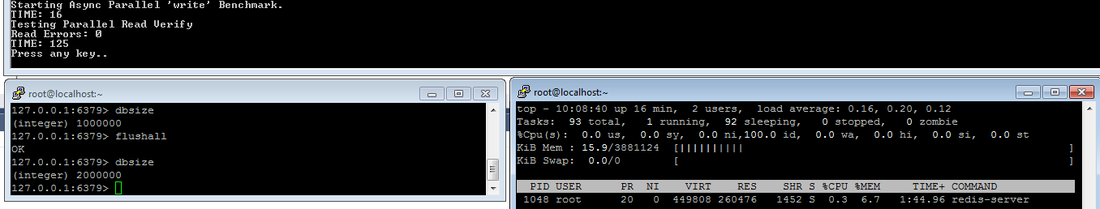

And now with the async "fire and forget" set method (db write without response), on empty db:

Console.WriteLine("Starting Parallel 'write' Benchmark.");

Parallel.ForEach(TestDataSet, td =>

{

//db.StringSet(td.Key, td.Value);

db.StringSetAsync(td.Key, td.Value, flags: CommandFlags.FireAndForget);

});Easy enough code change.

Well, that's pretty fancy. 1 Million records added in under 10 seconds, and not a single error.. ![]()

So, even though I think this is my winning candidate, I should at least perform the same benchmarks on NCache to see if it somehow blows Redis out of the water.

So, keep posted, as NCache will be my next victim. It may be somewhat unfair though since I'll be installing NCache on my Windows machine directly and Redis was sequestered into a tiny vm with very limited resources, so if I need to(if NCache is ridiculously faster ootb) I'll be re-running these benchmarks again on a bigger VM. ![]()

A few more benchmark results(11/1/18):

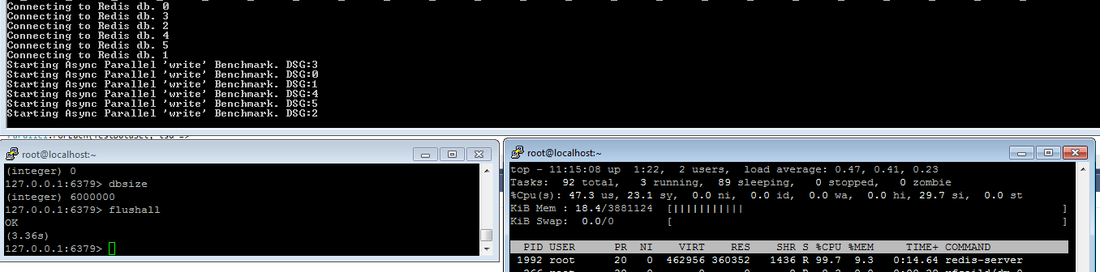

I've repeated the final "fire and forget" with a couple bigger data sets. Same VM, so 1 single core, and 4G ram.

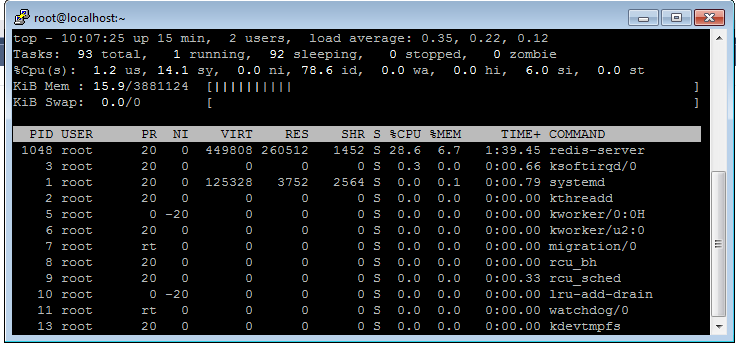

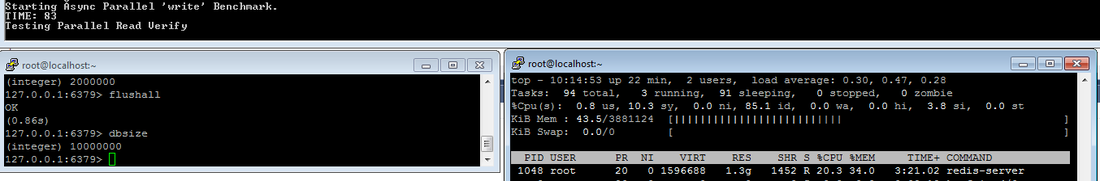

2 Million Records:

This shows the top output snapped somewhere in the middle of the "write" test.

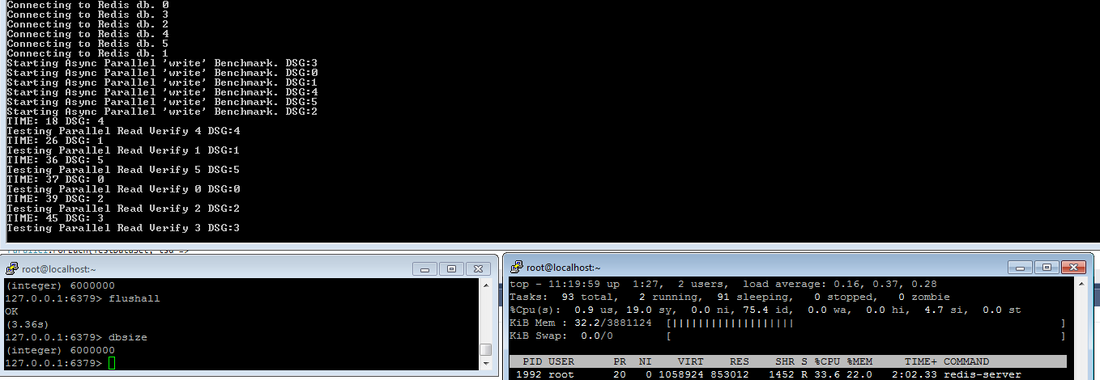

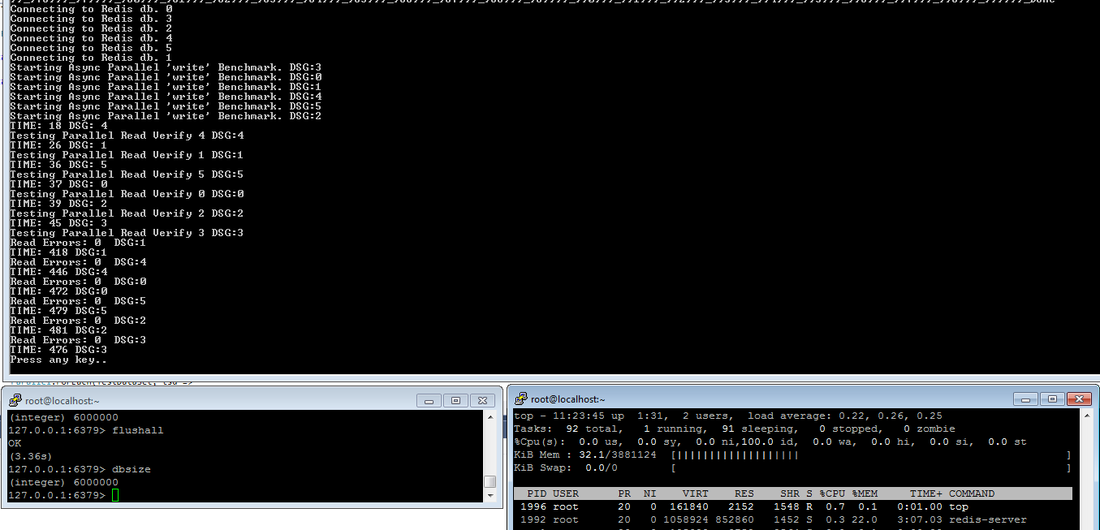

This shows the benchmark output, the Redis cli console, and top for the Redis server. Post benchmark.

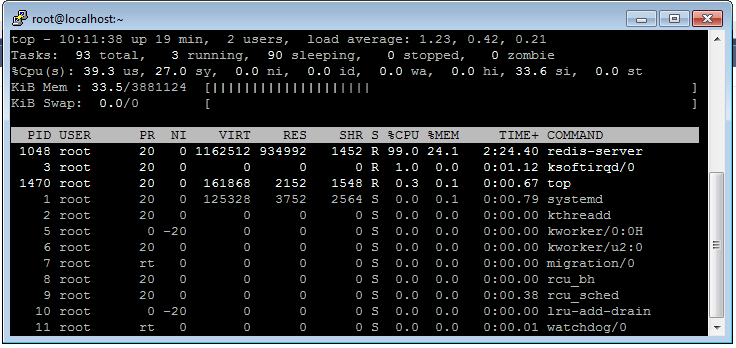

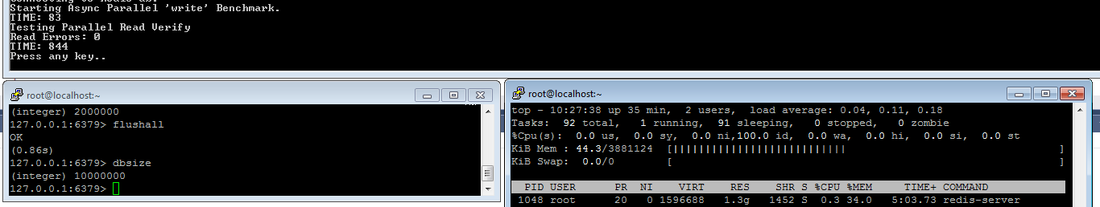

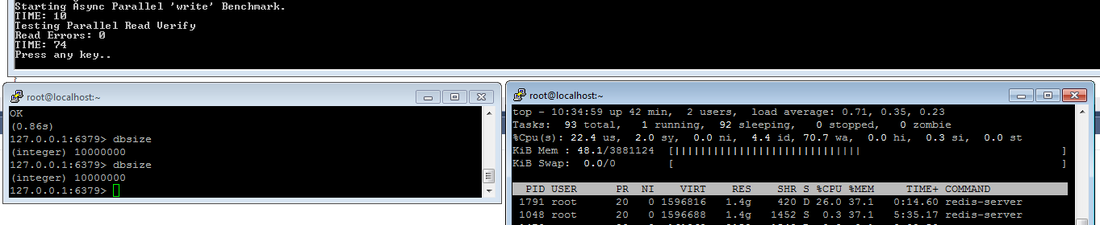

Now 10Million Records:

Redis Server Top output somewhere in the middle of the "write" test. We're hitting that single core ceiling here, but it's trucking along anyhow.

Here's a snap from somewhere in the middle of the read/verify test. Working hard, but not taxing that single core. Up to 2G of ram now, 10m records.. not too bad.

And the completed test. Frankly, I'm impressed. It pegged the single core for a fraction of that 83 seconds, but it didn't lose a single record in the process.

And the final test result: a 1million record write, with 10million records already in the DB. ![]() Boom, still no errors.

Boom, still no errors.

So, let us really break it good.

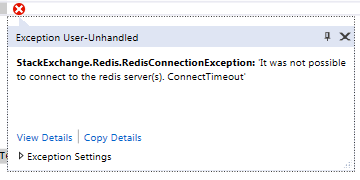

I rewrote the benchmark script to Parallel.Foreach through 10 individual 1million record benchmark tests. Roughly simulating separate concurrent clients to the Redis server.

And here is where we start finding limits in (this) installation/configuration.

So, it got to about 3Million records inserted from 6 different connections before the load on the server caused the additional connections to time out(more or less).

Okay, lets try it with 6 connections. hmm, I have 6 servers.. hmm. ![]()

Mid write, good to go.

Mid read/verify, good to go.

And would you look at that, no exceptions, no read errors. ![]()

A general observation of your use of Parallel.Foreach and StringSetAsync; the test you're performing there is actually one of how fast you are putting work onto the task scheduler - you're not awaiting the completion of the task (which itself could be problematic in the event of an exception).