In the last few weeks I've been focusing on getting the Android build of my jungle game working and tested. Last time I did this I was working from Windows, but now I've totally migrated to Linux I wasn't sure how easily everything would go. In the end, it turns out that support for Linux is great, in fact it was easier than getting things up and running on Windows, no special drivers needed.

Definitely Android studio and particularly the emulators seem to be better than last time, with x86 emulators running near native speed, and much quicker APK uploads to the emulators (although still slow to the devices, I gather I can increase this by updating them to high Android version but then less good for testing).

My devices I have at home are an old Cat B15 phone, 800x480 with a GPU that seems to date from 2006(!), a Nexus 7 2012 tablet, and finally an Amlogic S905X TV media player (2017). Funnily enough the TV box has been the most involved to get working.

CPU issues

My first issue to contend with was I got a 'SIGBUS illegal alignment' error when running on the phone. After tracking it down, it turns out the particular Arm CPU is very picky about the alignment of data. It is usually good practice to keep structures aligned well, but the x86 is very forgiving, and I use quite a few structs #pragma packed to 1 byte, particularly in serialization. Some padding in the structures sorted this.

Next I had spent many hours trying to figure out a strange bug whereby the object lighting worked fine on emulators, but looked wrong on the device. I had a suspicion it was a signed / unsigned issue in values for diffuse light in a shader input, but I couldn't see anything wrong with the code. Almost unbelievably, when I tracked it down, it turned there wasn't anything wrong with the code. The problem was that on the x86 compiler, a 'char' defaults to be a signed char, but on the ARM compiler, 'char' defaults to unsigned!!

This is an interesting choice (apparently on the ARM chip the unsigned may be faster) but it goes against the usual convention for short, int etc. It was easy enough to fix by flipping a compiler switch. I guess I should really be using explicit signed / unsigned types. It has always struck me as somewhat wierd that C is so vague with the in-built types, with number of bits and the sign, given that changing these usually gives bugs.

GPU issues

The biggest problem I tend to have with OpenGL ES devices is the 'precision' specifiers in shaders. You can fill them however you want on the desktop, but it just ignores them and uses high precision. However different devices have different capabilities for lowp, mediump and highp both in vertex and fragment shaders.

What would be really helpful if the guys making the emulators / OpenGL ES on the desktop could allow it to emulate the lower precision, allowing us to debug precision on the desktop. Alas no, I couldn't figure out a way to get this to work. It may be impossible using the hardware OpenGL ES, but the emulator also can use SwiftShader so maybe they could implement this?

My biggest problems were that my worst performing device for precision was actually my newest, the TV box. It is built for super fast decoding video at high resolution, but the fragment shaders are a minimal 10 bit precision affair, and the fill rate is poor for a 1080P device. This was coupled with the problem I couldn't usb connect up to the desktop for debugging, I literally was compiling an APK, putting it on a usb stick (or dropbox), taking to bedroom, installing, running. This is not ideal and I will look into either seeing if ADB will run over my LAN or getting another low precision device for testing.

I won't go into detail on the precision issues, I wrote more on this on a post here:

https://www.gamedev.net/forums/topic/694188-debugging-precision-issues-in-opengl-es-2

As a quick summary, 10 bits of precision in the fragment shader can lead to sampling error in any maths done there, especially in texture coordinate math. I was able to fix some of my problems by moving the tex coordinate calculations to the vertex shader, which has more precision. Then, it turns out that my TV box (and presumably many such chipsets) support an extra high precision path in the fragment shader, *as long as you don't touch the input data*. This allows them to do accurate uv coords on large texture maps, because they don't use the 10 bit precision.

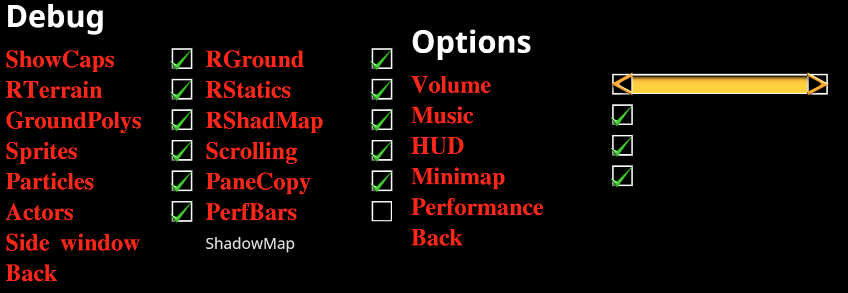

Menus

I've written a rudimentary menu system for the game, with tickboxes, sliders and listboxes. This has enabled me to put in a bunch of debugging features I can turn on and off on devices, to try and find out what affects performance, without recompiling. Another trick from my console days is I have put in some simple graphical performance bars. I record the last 60 frames into a circle buffer and store things like the frame duration, and when certain game tasks took place. In my case the big issue is when a 'scroll' event takes place, as I render horizontal and vertical tiles of the landscape as you move about it.

In the diagram the blue bar is where a scroll happens, a green bar is where the ground scroll happens, and the red is the frame duration. It doesn't show much on the desktop as the GPU is fast, but on the slow devices I often get a dropped frame on the scrolls, so I am trying to reduce this.

I can turn on and off various aspects of the scrolling / rendering to track down what causes performance issues. Certainly PCF shadows are a big ask on mobiles, as is the ground (terrain) shader.

On my first incarnation of the game I pre-rendered everything (graphics + shadows) out to a massive texture at loadup and just scrolled through it as you moved. This is great for performance, but unfortunately uses a shedload of memory if you want big maps. And phones don't have lots of memory.

So a lot of technical effort has gone into writing the scrolling system which redraws the background in horizontal and vertical tiles as you move about. This is much more tricky with an angled landscape than with a top-down 90 degree view, and even more tricky when you have to render shadow maps as you move.

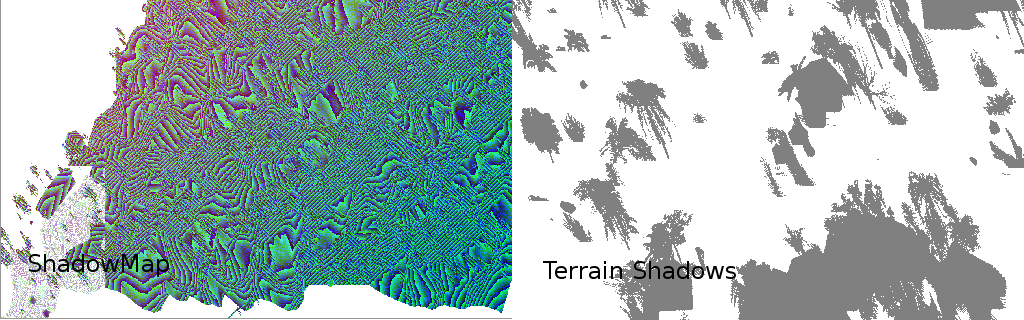

Having identified the shadow map pass as being a bottleneck, I did some quick calculations for my max map size (approx 16384x16384) and decided that I could probably get away with pre-rendering the shadow map to a 2048x2048 texture. Alright it isn't very high resolution, but it beats turning shadows off completely.

This is working fine, and avoids a lot of ugly issues from scrolling the shadow map. To render out the shadow map I render a bunch of 256x256 tiles and copy them to the final shadowmap.

This fixed some of the slowness, then I realised I could go a step further. Much of the PCF shadows slowdown was from rendering the landscape shadows. The buildings and objects are much rarer so I figured I could pre-render a low-res landscape shadow texture, and use this when scrolling, then only need to do expensive PCF / simple shadows on the static objects, and dynamic objects.

This worked a treat, and incidentally solves at a stroke precision issues I was having with the shadow shader on the 10 bit hardware.

Joysticks

As well as supporting touchscreens and keyboards, I want to support gamepads, so I bought a bluetooth / wireless gamepad for xmas. It works great with the TV box with wireless dongle, unfortunately, the bluetooth doesn't seem to work with my old phone and tablet, or my desktop. So it has been very difficult / impossible to debug to get analog joystick working.

And, in an oversight(?) for the emulator, there doesn't seem to be an option for emulating a gamepad. I can get a D pad but I don't think it is analog. So after some stabs in the dark with docs I am still facing gamepad focus issues so will have to wait till I have a suitable device to debug this.

That's all for now folks! ![]()

A D-pad is most definitely not analog. Also, you mentioned needing special drivers on Windows but not on Linux. You need to keep drivers updated on both OS's for testing purposes. I'm using OpenGL also and it is automatically done for me on Windows, so not sure what you mean by that. Nice update and keep up the good work.