Newgamemodder said:

I also understand that a rule of graphics is 1 triangle per pixel.

Not sure what you mean, but if we use teh GPU hardware to render one triangle per pixel, that's inefficient.

GPUs assume that the triangles are larger, and there are always 4 pixel shader threads combined to work on a quad of 2x2 pixels. Often some of those pixels are not inside the triangle, but the threads do the calculations anyway, which is a waste. So if we render one pixel sized triangles, only 25% of GPU threads contribute to the visible image.

That's the primary reason why Nanite uses a software rasterizer implemented in compute for small triangles, which is faster than hardware acceleration in this case.

Newgamemodder said:

Lets say i have X amount of triangles in my frustum. Will only 8,294,400 worth of triangles be in view?

We can exceed this limit in multiple ways:

Using MSAA multiple triangles can contribute to a single pixel, e.g. at most 4 with 2x2MSAA, or 16 with 4x4MSAA.

Using TAA it can be even more, which uses typically 64 samples. Even if in one render only one triangle can win the pixel, by combining multiple frames multiple triangles contribute to one visible pixel.

Same for other anti aliasing techniques. Any form of AA requires that multiple triangles can contribute to one pixel.

But ofc. this does not mean you could see subpixel details on your screen. The only reasonable goal here is smooth images. Ideally our tirangles are not smaller than a pixel. If we do this, we create a new potential source of aliasing. If the screen resolution is not high enough for our details, the pixel starts to flicker because just one triangle wins it, out of a selection of many tiny subpixel triangles with all different colors.

So it's not just a waste of resources, it also looks bad.

Newgamemodder said:

Now if that is the truth how the expletive is this company manageing to render 1.8 billion triangles?

Newgamemodder said:

And how is it that this youtuber manages 800 million triangles at 12K? Shouldn't it be a maximum of 79,626,240?

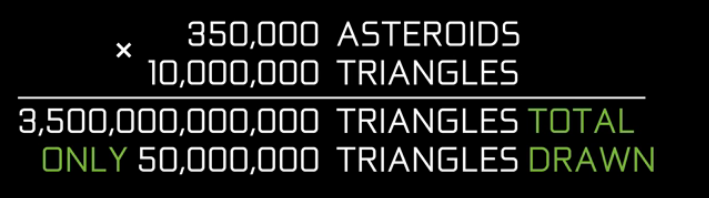

Having a scene of X triangles at the highest level of detal does not mean all of them are actually visible. Some may be off screen, some will be face backwards, others will be too small to intersect a pixel center, and most important: Many of them will be occluded by other triangles in front of them, and many are replaced by a lower level of detail for a current perspective.

So they talk about 3D datasets and how much of it they can manage and process.

Notice this is independent from the final presentation on a flat 2D screen.

If i want to impress, i'll make two related statements:

- My scene has 24 trillion triangles.

- But i only need to render 3 millions of them per frame, showing all the glorious details.

The first they tell to consumers, the second they tell to devs at GDC.