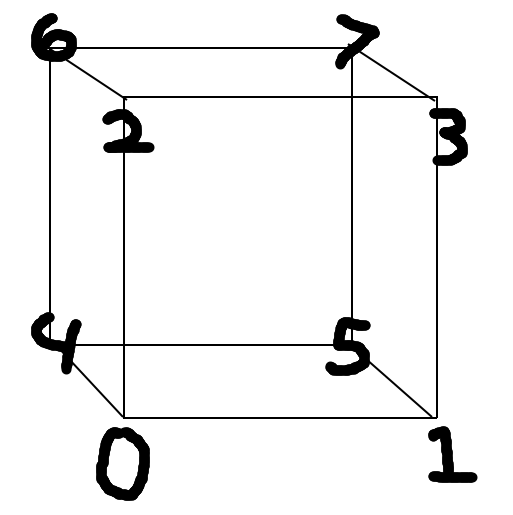

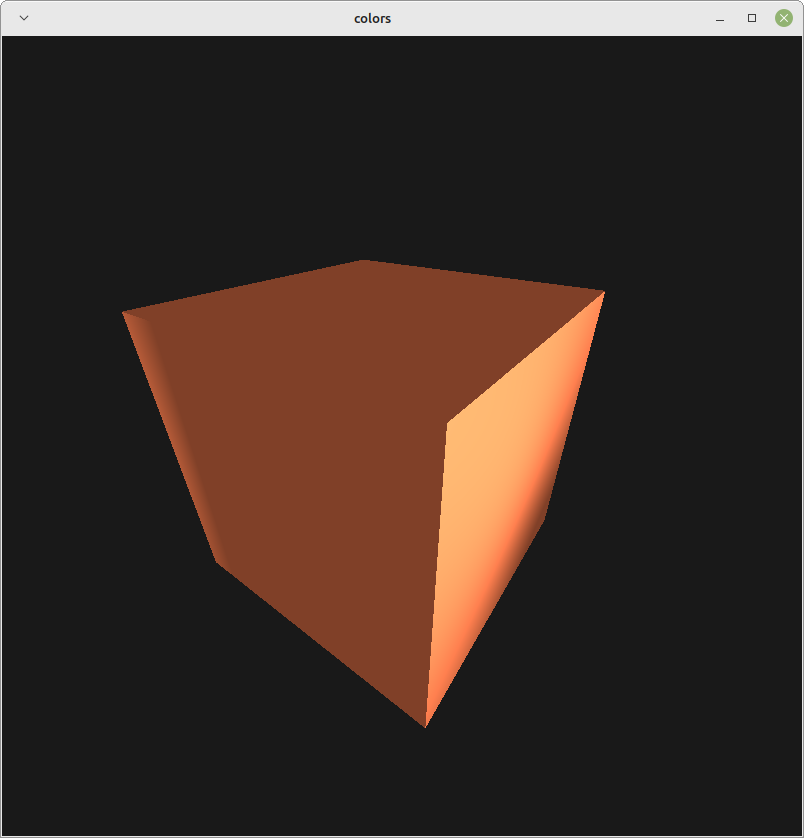

I am trying to properly generate normals for a cube that I originally specify with just 8 vertices, visualized like so:

I define the vertices in order and how they are indexed:

vec3 vertices[] = {

/* 0, 1, 2, 3 */

{ -0.5f, -0.5f, 0.5f },

{ 0.5f, -0.5f, 0.5f },

{ -0.5f, 0.5f, 0.5f },

{ 0.5f, 0.5f, 0.5f },

/* 4, 5, 6, 7 */

{ -0.5f, -0.5f, -0.5f },

{ 0.5f, -0.5f, -0.5f },

{ -0.5f, 0.5f, -0.5f },

{ 0.5f, 0.5f, -0.5f },

};

GLubyte indices[] = {

0, 1, 3, /* front */

0, 3, 2,

1, 5, 7, /* right */

1, 7, 3,

5, 4, 6, /* back */

5, 6, 7,

4, 0, 2, /* left */

4, 2, 6,

4, 5, 1, /* bottom */

4, 1, 0,

2, 3, 7, /* top */

2, 7, 6,

};

/* array to hold all vertex positions and normals */

vec3 vertAttribs[sizeof(indices)*2];

This loop is supposed to make 36 total vertices from the original 8 (+36 normal vectors) and calculate a normal for each triangle to make a cube that is flat shaded.

int i;

for (i = 0; i < sizeof(indices); i += 3) {

GLubyte vertA = indices[i];

GLubyte vertB = indices[i+1];

GLubyte vertC = indices[i+2];

vec3 edgeAB;

vec3 edgeAC;

/* AB = B - A

* AC = C - A */

glm_vec3_sub(vertices[vertB], vertices[vertA], edgeAB);

glm_vec3_sub(vertices[vertC], vertices[vertA], edgeAC);

/* normal = norm(cross(AB, AC)) */

vec3 normal;

glm_vec3_crossn(edgeAB, edgeAC, normal);

/* copy vertex postions into first half array */

glm_vec3_copy(vertices[vertA], vertAttribs[i]);

glm_vec3_copy(vertices[vertB], vertAttribs[i+1]);

glm_vec3_copy(vertices[vertC], vertAttribs[i+2]);

/* vertex normals stored in second half of array */

glm_vec3_copy(normal, vertAttribs[i+36]);

glm_vec3_copy(normal, vertAttribs[i+1+36]);

glm_vec3_copy(normal, vertAttribs[i+2+36]);

}Then I buffer the data:

glBufferData(GL_ARRAY_BUFFER, sizeof(vertAttribs), vertAttribs, GL_STATIC_DRAW);

/* postions */

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, 0, 0);

glEnableVertexAttribArray(0);

/* normals */

glVertexAttribPointer(1, 3, GL_FLOAT, GL_FALSE, 0, (void*)(36 * sizeof(float)));

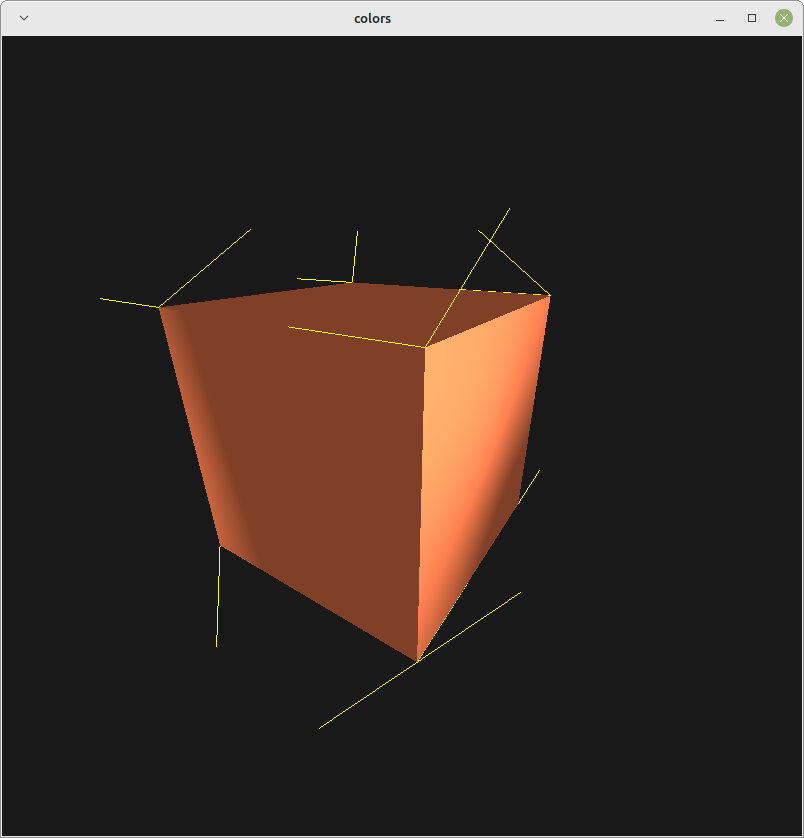

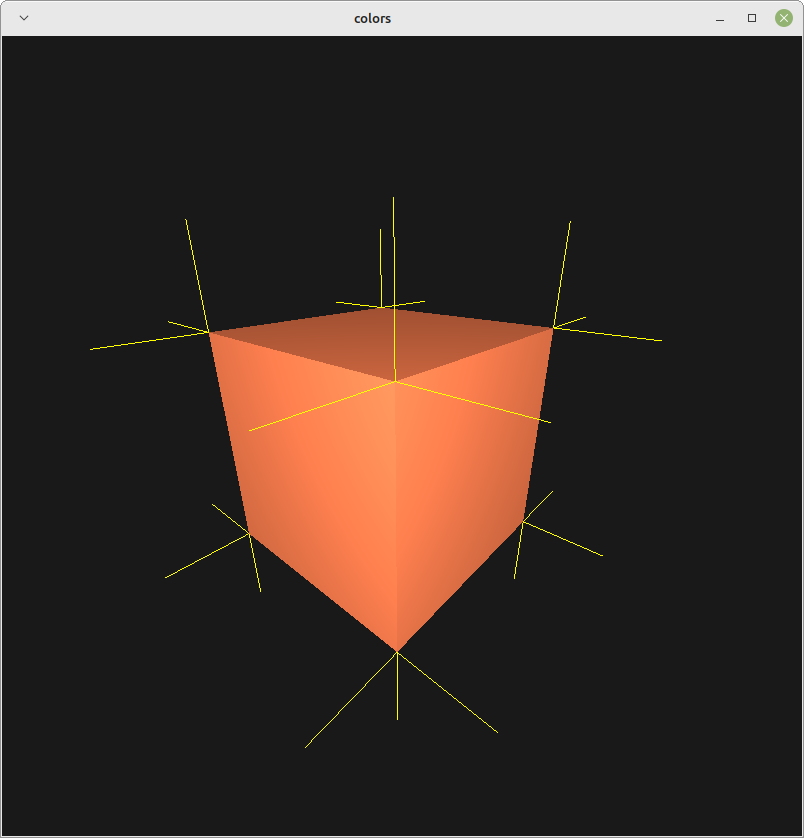

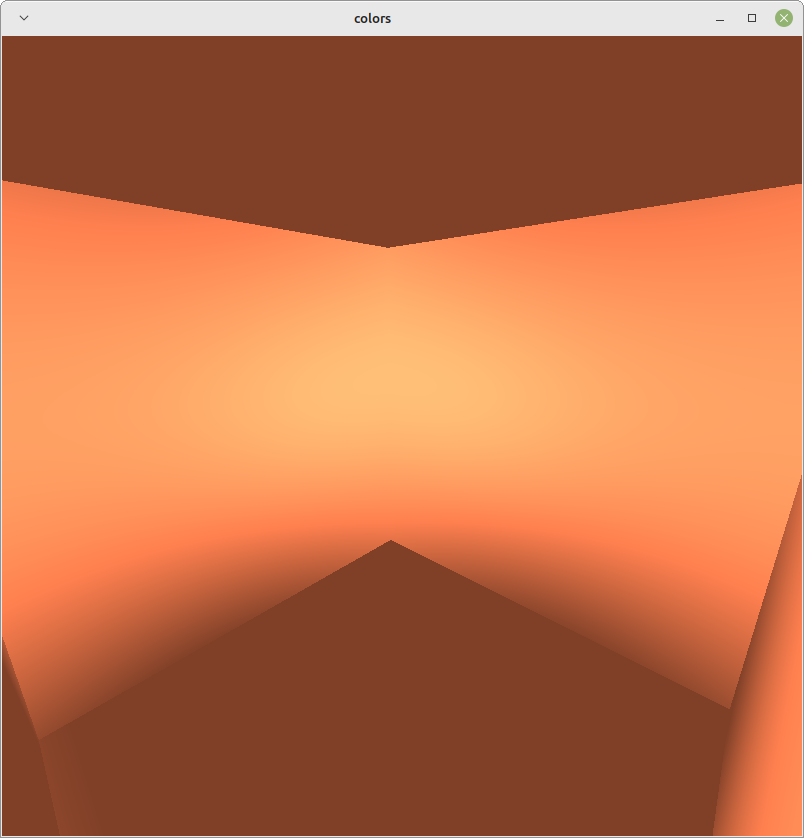

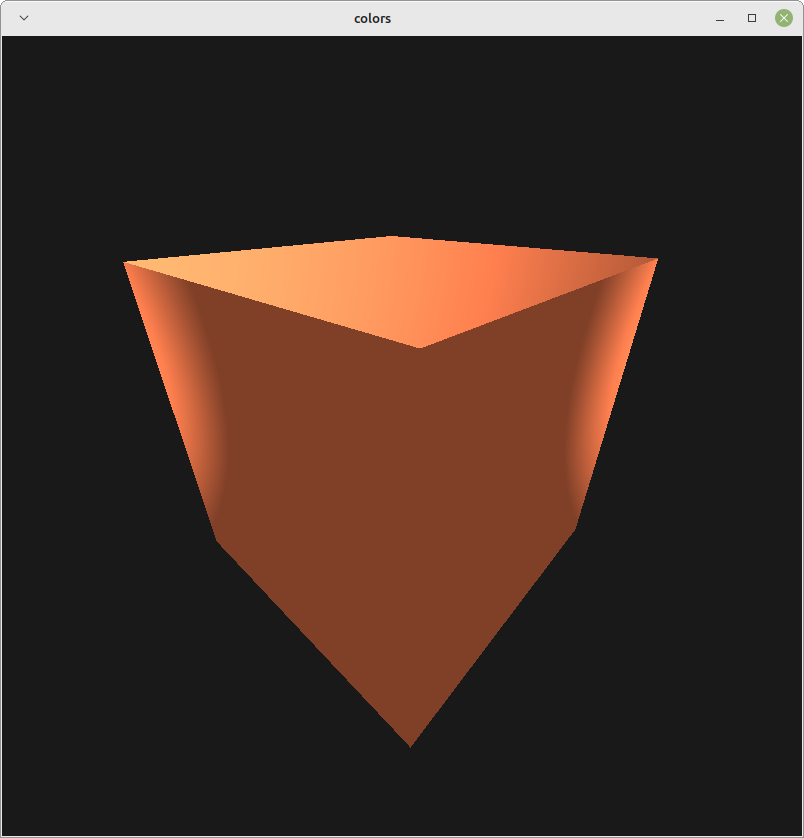

glEnableVertexAttribArray(1);Unfortunately, it does not seem to produce proper lighting:

Also for reference, I am attempting to follow the tutorial on learnopengl.com for lighting (https://learnopengl.com/Lighting/Basic-Lighting), replicating only the diffuse section for now. My shaders are set up the same way as the site instructs, and the lighting does indeed work fine when manually providing the normals. I understand that my setup is not exactly as it is on the page, I am trying an alternative method for practice.

Because the lighting works as intended when the normals are specified manually, the mistake is probably somewhere within my loop, but I've looked it over up and down and can't seem to spot what's wrong. I'd appreciate a second set of eyes to help me see what I'm doing wrong, thank you.