Josh Klint said:

Any general tips on how to reduce light leakage and errors?

This was the reason i gave up on my voxel GI experiments.

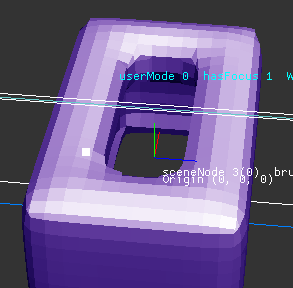

Surfels can prevent leakage robustly: I have a hierarchy of surfels on the surface, which looks like a quadtree. Going higher up the hierarchy, multiple disjoint surfaces merge and issues creep in.

But sticking at the surface example, 4 surfels usually merge to one larger parent surfel. So it's similar to mip maps and allows the same prefiltering.

If you imagine a wall with an area of 2x2 meter, the whole may be just one surfel. But because the surfel is flat, it can still approximate the thin wall and prevent leakage.

Contrary, with voxels we may have a 16^3 volume, and one slice of solid voxels to represent the same wall. A single voxel at the top of the mip chain will have low density, and there is no way to know from which direction light should not pass through.

I tried to improve this with including directional information, so i had a mip chain of normals times surface area encoded in SH2.

But this did not work either, because SH2 can not represent both the front and back sides of a wall which may all fall into a single voxel.

To encode front and back, we would need SH3, which already has 9 coeffcients, but still fails on complex geometry e.g. a corner where two walls meet at right angles.

So i gave up on it. The volumetric approach was much slower than the surface approach using surfels anyway, accuracy was much worse too, so the only advantage of volumes would had been simplicity.

But it's not that i would recommend surfels either. It took me years to make a preprocessing tool to build the surfel hierarchy.

I had to work on the very hard geometry problem of quadrangulation to get nice quad tree alike tree topology on arbitrary models.

The tracing also is harder, as it is the same as classical raytracing using BVH. Not as simple as sphere tracing in a grid.

Though, maybe we could use surfels with a world aligned grid for a com-promise. Would be simple, but a bad approximation of geometry, causing bad accuracy and the usual quantization issues.

Maybe it would be an idea to replace my spherical harmonics approach with some quantized format, which lacks directional accuracy but prevents leakage successfully. I could imagine something like Valves Ambient Cube, distributing a patch of surface to the 3 narmal aligned faces of a voxel cube. A higher mip of such format could cause overocclusion but prevent leakage, which would be a success.

Otherwise, the only option i see would be to use many rays instead a single cone, and have high resolution voxelization even for distant objects. But i guess that's still not practical at current day, and DXR would be just better in any way.