SVOGI Implementation Details

Josh Klint said:

In scattering problems you can track the energy loss of a packet of photons, but you can't really do that in gather problems, so it is possible for light to bounce back and forth, increasing in energy, unless you use heavy damping which darkens the scene excessively. For this reason you need to augment the formula for the indirect light to better approximate infinite bounces.

Hmm - my surfel stuff is gathering too, but i have no such problems. No energy gain or loss, and it matches path tracing pretty closely. Probably the approximations i do cause much less error than VCT. My primary sources of error are binary visibility (a surfel is either 100% or 0% visible), and a small one from form factor calculation ignoring perspective projection. With VCT that's much worse: Mapping of surface between voxel representation isn't robust, and mips cause all those blending and leaking errors. So don't wonder energy conservation fails.

Interesting: From the videos i was assuming you use one bounce, not infinite bounces. Likely the inaccuracy is just too large to represent multiple bounces. You get some washed out extra light at very low frequencies, but that's it.

Can you do a reference render with a real large number of cones? This would show some VCT error directly, and it would show which kind of error can't be fixed.

Btw, i remember i had oscillation issues at some point. Those went away after switching to stochastic updates (update only a random smaller set of surfels, not all of them in one frame). Though, that's really another problem ofc.

Still - do you do this as well? If not, maybe you can, and this way you'd free up enough performance to do some more cones for higher accuracy. It looked fine for me to update only 10% of the scene per frame. It increases lag, but i do more updates where lighting changes, and less where it doesn't.

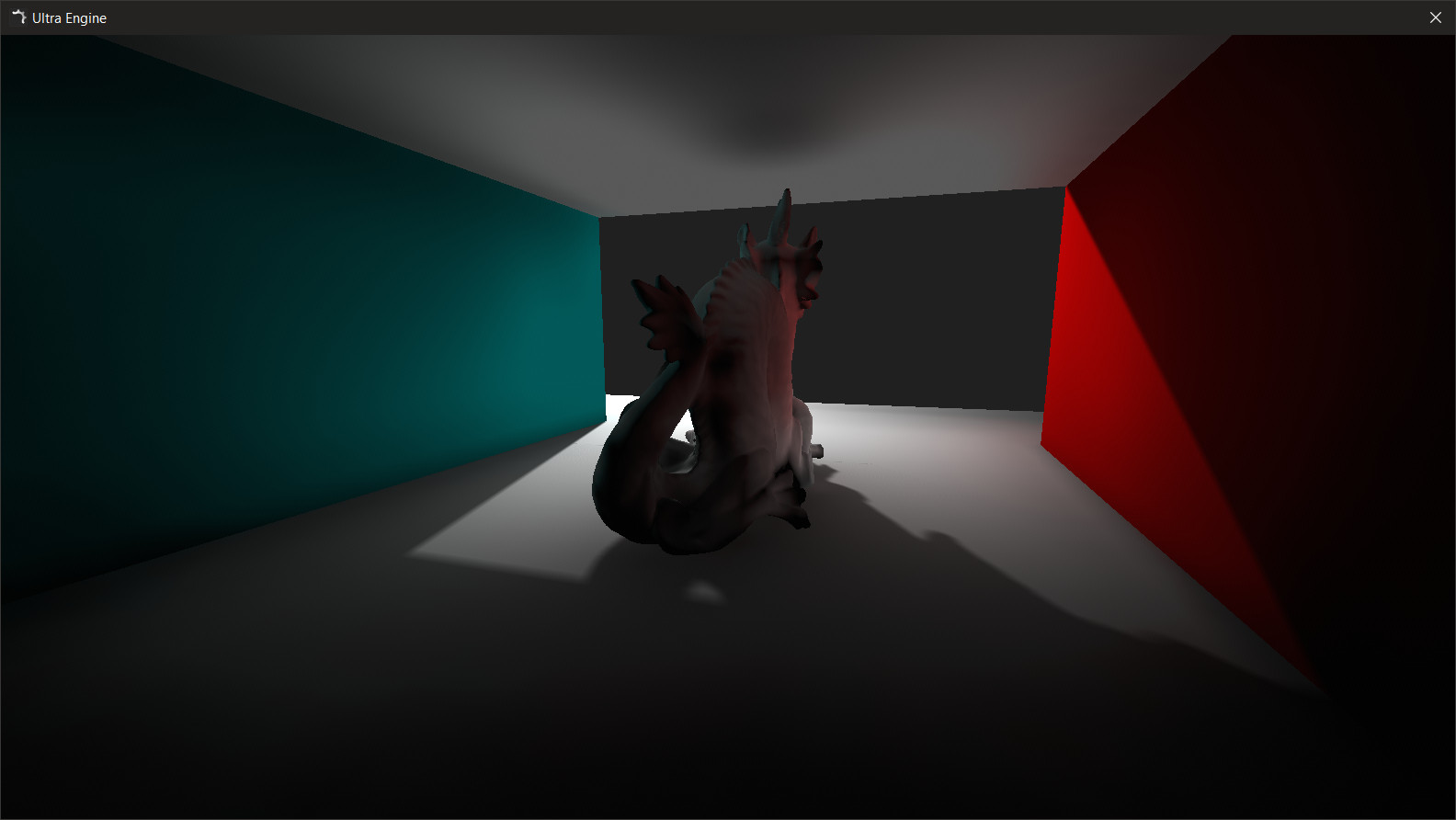

It's doing a much better job now of filling the room with light.

10x Faster Performance for VR: www.ultraengine.com

Just saw this, a hobby project from industry experts o a realtime GI renderer.

They mention to use voxels, so maybe a useful reference.

I ended up combining this with screen-space reflections. The transition between the two techniques is much more seamless than I expected:

10x Faster Performance for VR: www.ultraengine.com

This is a good example of it in use:

10x Faster Performance for VR: www.ultraengine.com

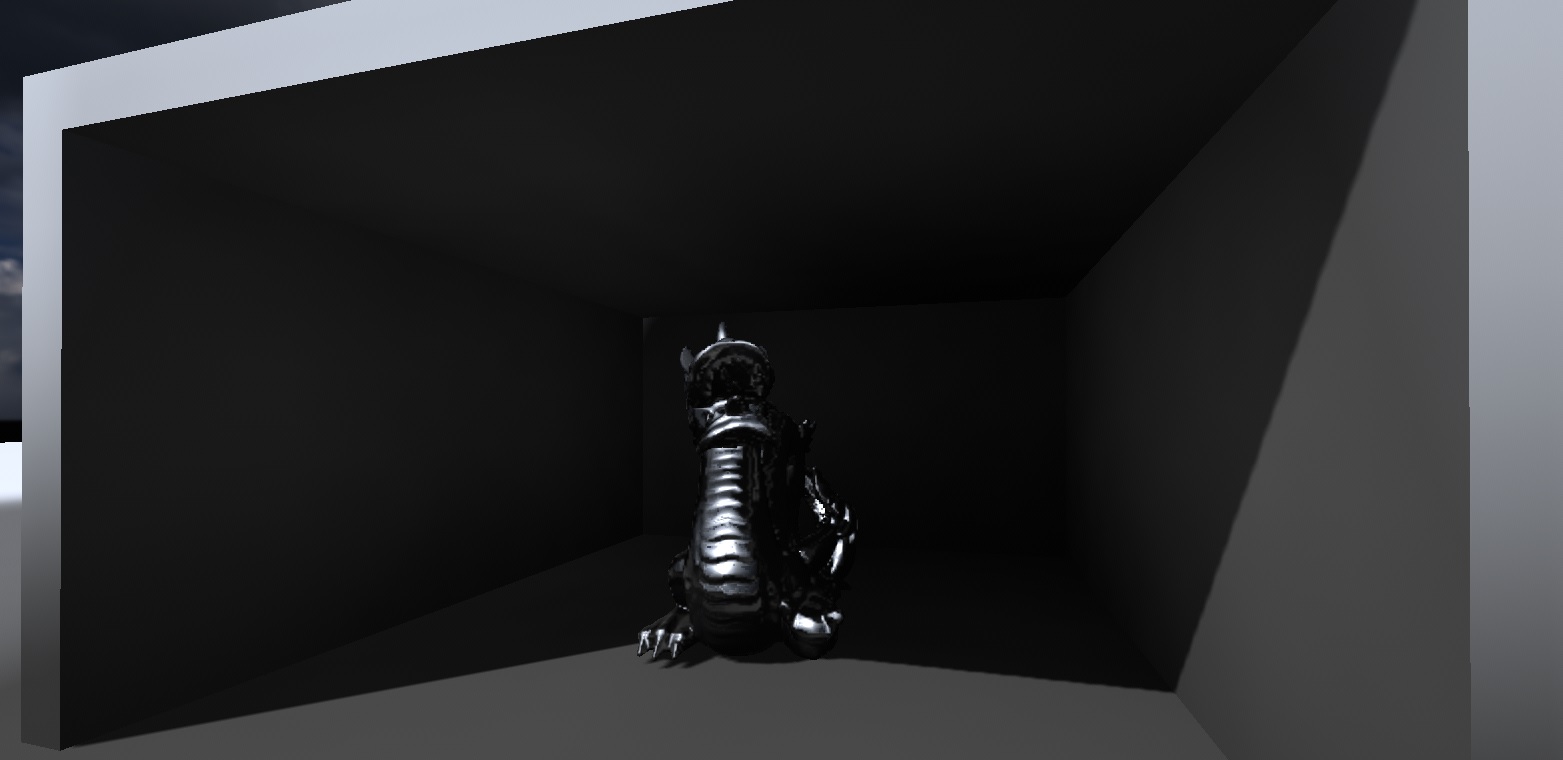

The latency here is a pretty good tradeoff to maintain speed:

10x Faster Performance for VR: www.ultraengine.com

I'm very pleased with my final results, after A LOT of work. Without the volumetric data of the surrounding scene, PBR looks terrible because the sky reflection penetrates into enclosed spaces.

10x Faster Performance for VR: www.ultraengine.com

A little tuning to fix some errors…

10x Faster Performance for VR: www.ultraengine.com