First off, here's a video of what I'm about to explain:

I've managed to get a PBR pipeline setup that's using image based lighting. However I have a question regarding models that are locked to the camera, such as elements of a first person shooter such as arms/weapons/etc. In my particular case, I have a separate camera setup that does not translate via a view matrix so all elements of the first person “Stage” have transforms relative to screen space.

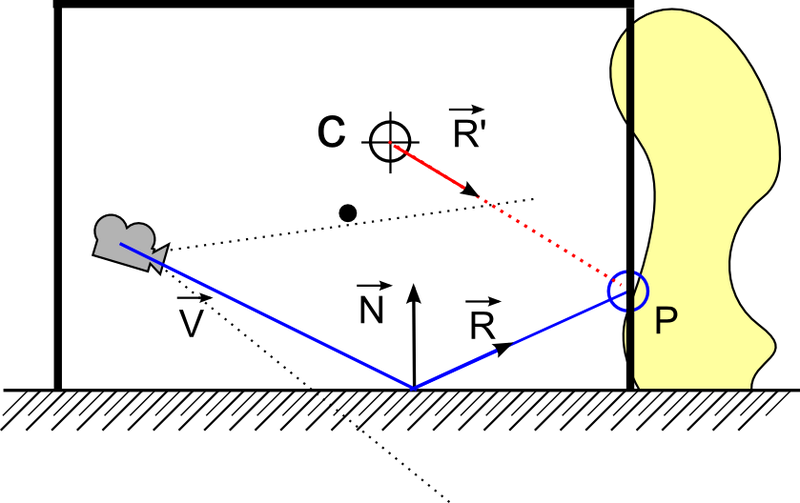

In order to apply the correct IBL information to the assets within this first person stage, I pass an offset matrix which is the inverse of the view matrix of the camera for the world stage, to be used in the fragment shader calculations. Which appears to work as expected. However since the IBL is being calculated relative to the camera position, when moving around the reflectance stays the same (when in reality it would be changing) until rotating as you can see in the video above.

Therefore I suppose my question becomes: Since an IBL cubemap remains fixed, and IBL/reflectance is relative to camera position…how does one accurately calculate light value for model which is fixed to camera?

I will paste my shader code below. Can post additional code upon request.

(removed vertex shader as it wasn't really necessary and was just making the post longer)

fragment shader (essentially a stripped down version of the learnopengl.com pbr shader):

#version 450 core

out vec4 FragColor;

in vec2 TexCoords;

in vec3 WorldPos;

in vec3 Normal;

// material parameters

uniform sampler2D albedoMap;

uniform sampler2D normalMap;

uniform sampler2D metallicMap;

uniform sampler2D roughnessMap;

uniform sampler2D aoMap;

// IBL

uniform samplerCube irradianceMap;

uniform samplerCube prefilterMap;

uniform sampler2D brdfLUT;

uniform vec3 camPos;

const float PI = 3.14159265359;

// ----------------------------------------------------------------------------

// Easy trick to get tangent-normals to world-space to keep PBR code simplified.

// Don't worry if you don't get what's going on; you generally want to do normal

// mapping the usual way for performance anways; I do plan make a note of this

// technique somewhere later in the normal mapping tutorial.

vec3 getNormalFromMap()

{

vec3 tangentNormal = texture(normalMap, TexCoords).xyz * 2.0 - 1.0;

vec3 Q1 = dFdx(WorldPos);

vec3 Q2 = dFdy(WorldPos);

vec2 st1 = dFdx(TexCoords);

vec2 st2 = dFdy(TexCoords);

vec3 N = normalize(Normal);

vec3 T = normalize(Q1*st2.t - Q2*st1.t);

vec3 B = -normalize(cross(N, T));

mat3 TBN = mat3(T, B, N);

return normalize(TBN * tangentNormal);

}

// ----------------------------------------------------------------------------

vec3 fresnelSchlickRoughness(float cosTheta, vec3 F0, float roughness)

{

return F0 + (max(vec3(1.0 - roughness), F0) - F0) * pow(max(1.0 - cosTheta, 0.0), 5.0);

}

// ----------------------------------------------------------------------------

void main()

{

// material properties

vec3 albedo = pow(texture(albedoMap, TexCoords).rgb, vec3(2.2));

float alpha = texture(albedoMap, TexCoords).a;

float metallic = texture(metallicMap, TexCoords).r;

float roughness = texture(roughnessMap, TexCoords).r;

float ao = texture(aoMap, TexCoords).r;

// input lighting data

vec3 N = getNormalFromMap();

vec3 V = normalize(camPos - WorldPos);

vec3 R = reflect(-V, N);

// calculate reflectance at normal incidence; if dia-electric (like plastic) use F0

// of 0.04 and if it's a metal, use the albedo color as F0 (metallic workflow)

vec3 F0 = vec3(0.04);

F0 = mix(F0, albedo, metallic);

// ambient lighting (we now use IBL as the ambient term)

vec3 F = fresnelSchlickRoughness(max(dot(N, V), 0.0), F0, roughness);

vec3 kS = F;

vec3 kD = 1.0 - kS;

kD *= 1.0 - metallic;

vec3 irradiance = texture(irradianceMap, N).rgb;

vec3 diffuse = irradiance * albedo;

// sample both the pre-filter map and the BRDF lut and combine them together as per the

// Split-Sum approximation to get the IBL specular part.

const float MAX_REFLECTION_LOD = 4.0;

vec3 prefilteredColor = textureLod(prefilterMap, R, roughness * MAX_REFLECTION_LOD).rgb;

vec2 brdf = texture(brdfLUT, vec2(max(dot(N, V), 0.0), roughness)).rg;

vec3 specular = prefilteredColor * (F * brdf.x + brdf.y);

vec3 ambient = (kD * diffuse + specular) * ao;

vec3 color = ambient;

// HDR tonemapping

color = color / (color + vec3(1.0));

// gamma correct

color = pow(color, vec3(1.0/2.2));

//FragColor = vec4(color, 1.0);

FragColor = vec4(color, alpha);

}