Hi everyone, how is it going?

I'm working on a shader that uses Equirectangular reflection mapping to add reflections to a material.

The shader is for a image compositing software, not a game so it doesn't need to be anywhere near real-time speeds.

The shader uses an Equirectangular HDRI map to get the reflections by converting the reflection vector to UV coordinates and sampling the texture.

The platform I'm using can only sample textures, but no LODs. I also can't use cubemaps, pre-filtering, multiple passes, uint datatypes, or bitwise operators like in the radical inverse function used everywhere.

So I had to use a different method to blur the reflections(code attached below).

It works something like this, for a number of samples(32-256). Create a random vec3 vector(range -0.5 to 0.5) and multiply it by the roughness value. Add the random vector to the reflection vector. Renormalize the vector, convert the new reflection vector to UVs and sample the texture, add the sample to the accumulator and after the loop, divide by the number of samples.

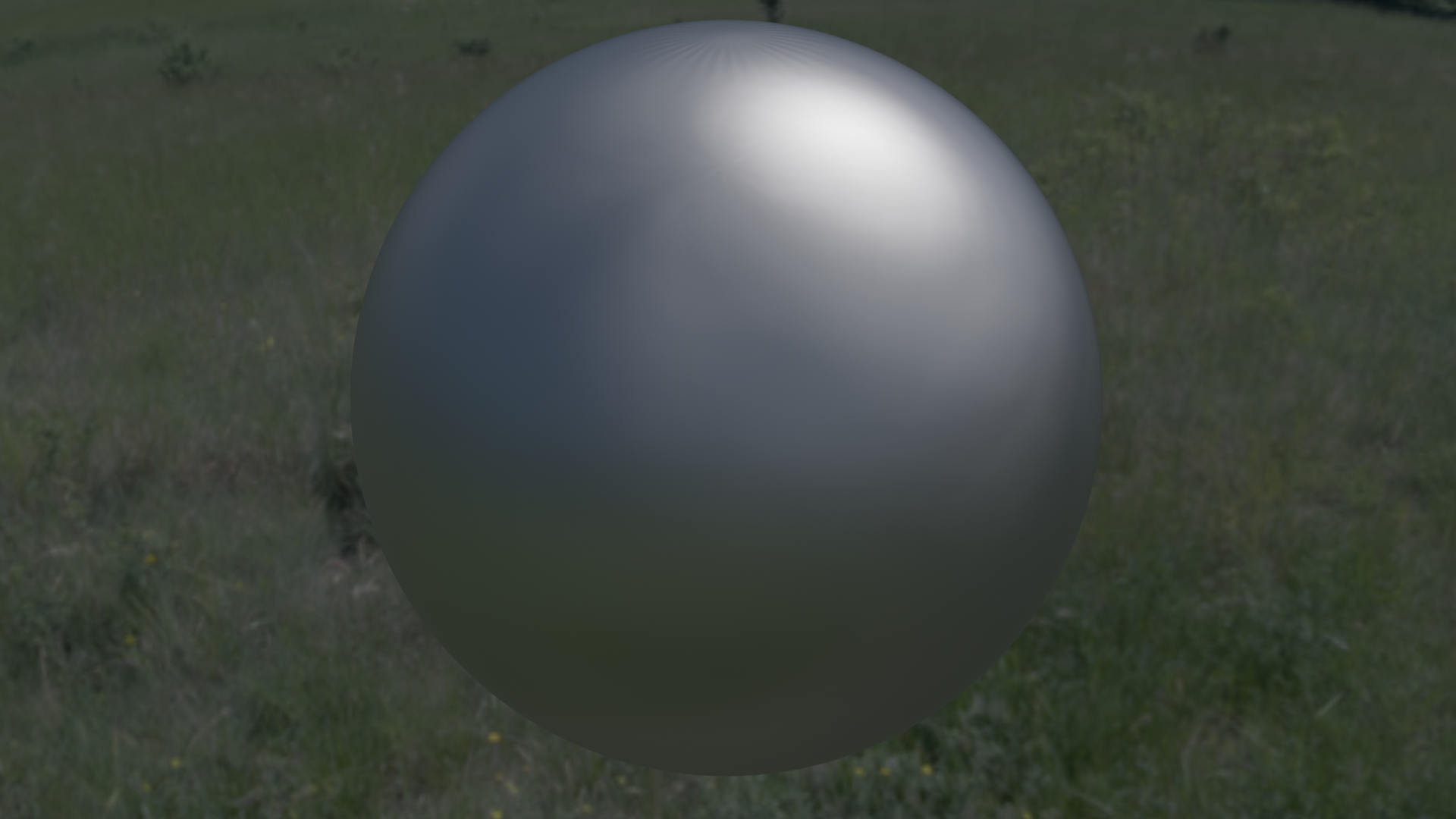

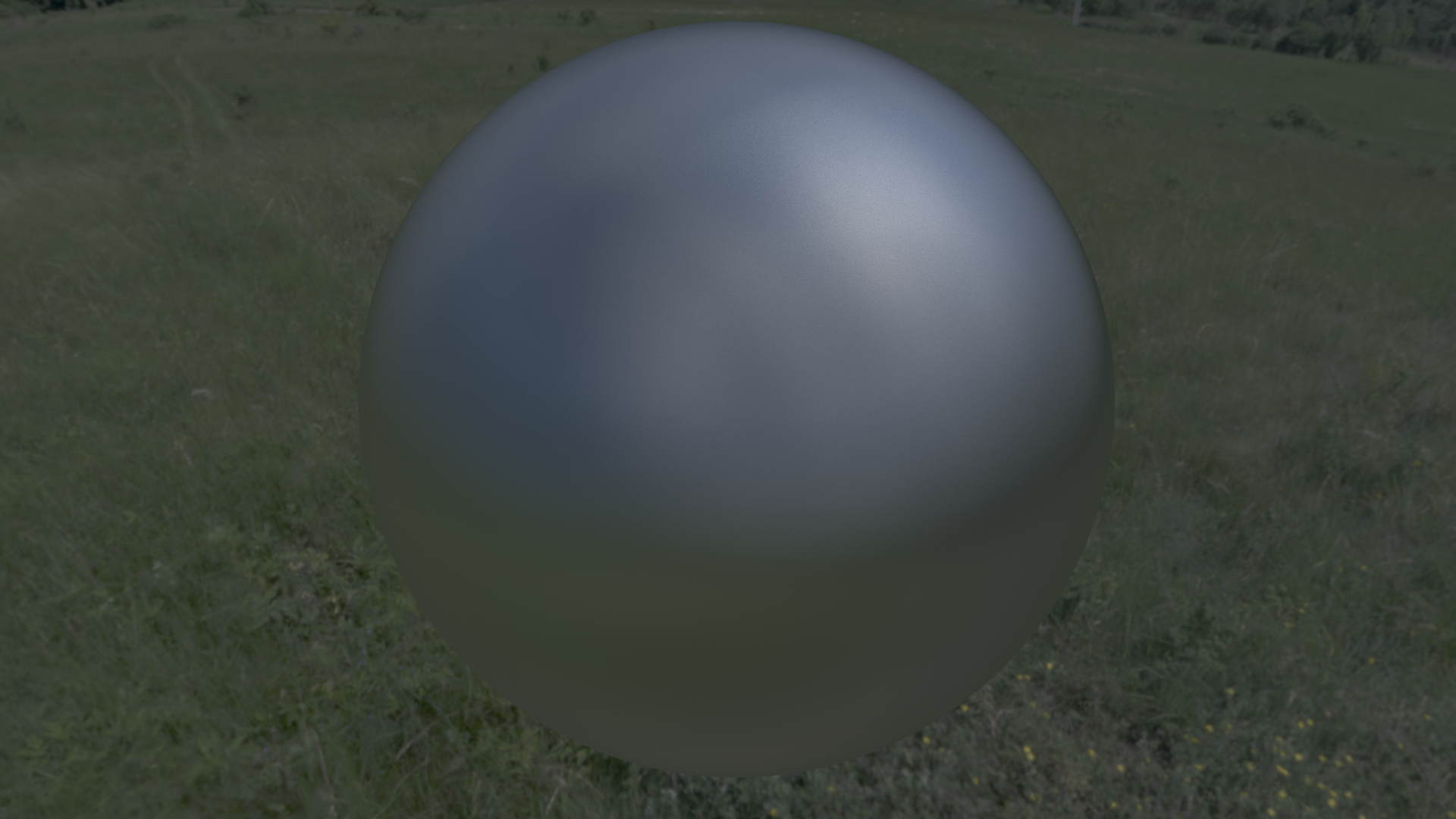

This works very well since there are no seams and no distortion, but the result is still a little lackluster. Especially when the material is set to metallic and all you see is the specular reflection.

Even though the image I'm using is an HDR and the textures are set to support HDR, the result is missing that nice hotspot falloff that you get with specular reflection convolutions.

Is there any way that I can incorporate a nice falloff to this method. Attached is the code that I use for the reflection blur, and the result that I get and the result that I would like to get.

Could someone please point me in the right direction?

float random3(vec3 co) {

return fract(sin(dot(co,vec3(12.9898, 78.233, 94.785))) * 43758.5453);

}

vec3 randomize3(vec3 r) {

vec3 newR = fract(r * vec3(12.9898, 78.233, 56.458));

return fract(sqrt(newR.x + .001) * vec3(sin(newR.y * 6.283185), cos(newR.y * 6.283185), tan(newR.z * 6.283185)));

}

vec2 VectorToUV(vec3 vector) {

vector = (RotationMatrix * vec4(vector, 1.0)).xyz;

vector = normalize(vector);

vec2 uv = vec2(0.0, 0.0);

uv.x = atan(vector.x, -vector.z) * 0.159154 + 0.5;

uv.y = asin(vector.y) * 0.318308 + 0.5;

return uv;

}

vec3 convolve(vec3 vector, float radius, int samples)

{

vec3 rnd = vec3(random3(vector));

vec3 acc = vec3(0.0);

for (int i = 0; i < samples; i++) {

vec3 R = normalize(vector + ((randomize3(rnd + vec3(i)) - vec3(0.5))* vec3(radius)));

vec2 uv = VectorToUV(R);

acc += texture(EnvMap, uv).xyz;

}

vec3 final = acc / vec3(samples);

return final;

}

Thanks in advanced,

Shem Namo