JoeJ said:

JoeJ said:

So its VXGI with SDF objects and screenspace to increase accuracy.

I'm no longer sure about this.

I'm convinced they use a hierarchy (e.g. SVO) to store geometry: Leaf nodes store triangles, internal nodes store points for splatting.

Streaming then requires only to load top levels for distant objects. It all makes sense.

But if they have this, then why use crappy VXGI with poor voxelization and tracing performance. Why not something like Many LoDs or imperfect SM?

Eventually they do. And they store probes in a regular grid (So DF video is still correct) because they lack surface parametrization, and with no support for dynamic stuff like characters they need volume lighting anyways.

On the other hand, can SVO help with faster voxelization? Probably yes, but GI geometry would remain quantized, which i did not noticed to happen in the video. Though the openening ceiling seems the only really dynamic object at all, and temporal filter could hide a blocky movement of that.

SVO performance is from all my tests inferior in terms of performance to using directly 3D texture or cascaded VXGI the quality gain isn't worth it unless your scene keeps this heavily in mind. Cone tracing SVO and SVO building is just way more expensive compared to simple stupid 3D texture.

Now from here:

https://www.eurogamer.net/articles/digitalfoundry-2020-unreal-engine-5-playstation-5-tech-demo-analysis

"Lumen uses ray tracing to solve indirect lighting, but not triangle ray tracing," explains Daniel Wright, technical director of graphics at Epic. "Lumen traces rays against a scene representation consisting of signed distance fields, voxels and height fields. As a result, it requires no special ray tracing hardware."

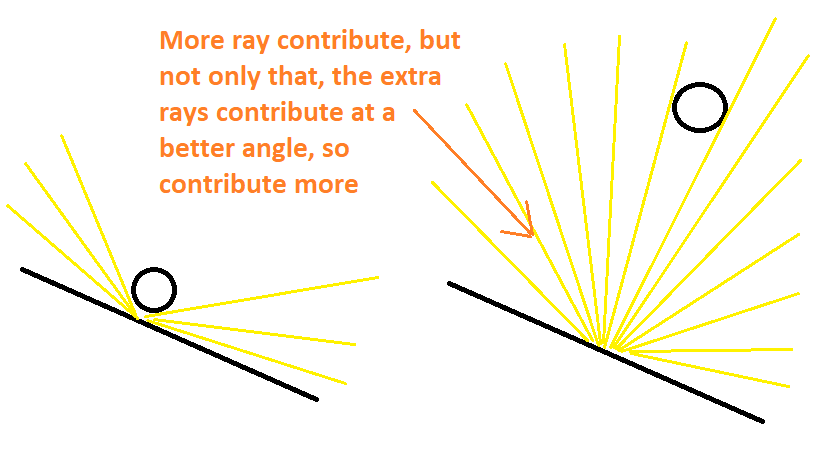

To achieve fully dynamic real-time GI, Lumen has a specific hierarchy. "Lumen uses a combination of different techniques to efficiently trace rays," continues Wright. "Screen-space traces handle tiny details, mesh signed distance field traces handle medium-scale light transfer and voxel traces handle large scale light transfer."

Lumen uses a combination of techniques then: to cover bounce lighting from larger objects and surfaces, it does not trace triangles, but uses voxels instead, which are boxy representations of the scene's geometry. For medium-sized objects Lumen then traces against signed distance fields which are best described as another slightly simplified version of the scene geometry. And finally, the smallest details in the scene are traced in screen-space, much like the screen-space global illumination we saw demoed in Gears of War 5 on Xbox Series X. By utilising varying levels of detail for object size and utilising screen-space information for the most complex smaller detail, Lumen saves on GPU time when compared to hardware triangle ray tracing.

Thinking about it - it might be sort of similar to cone tracing. If you remember how cone tracing looks like (quick naive example from one of mine HLSL files - https://pastebin.com/k1kaGa0n ), they might be doing exactly this, but for first few steps (before your cone radius gets big enough to contain size of your voxels) use SSGI information and SDF hierarchy. This might give them higher precision in small details.

I'll need to see demo to play with it. But the GI solution just doesn't look that good and robust to me (I might be wrong, although … you can't beat unbiased path tracing, no matter how hard you try).

Whole video running at 30 fps doesn't convince me either (60 fps is a minimum these days, especially with VR on the market - where you need more than that).

…

Don't get me wrong, the demo is nice - but it doesn't seem to be some major breakthrough. Also, my 50c, engine is mainly about tools and they haven't showed them yet.