I am doing terrain tessellation and I have two ways of approaching normals:

1) Compute the normal in the domain shader using a Sobel filter

2) Precompute normals in a compute shader with the same Sobel filter and then sample it in the domain shader. Texture format is R10G10B10A2_UNORM

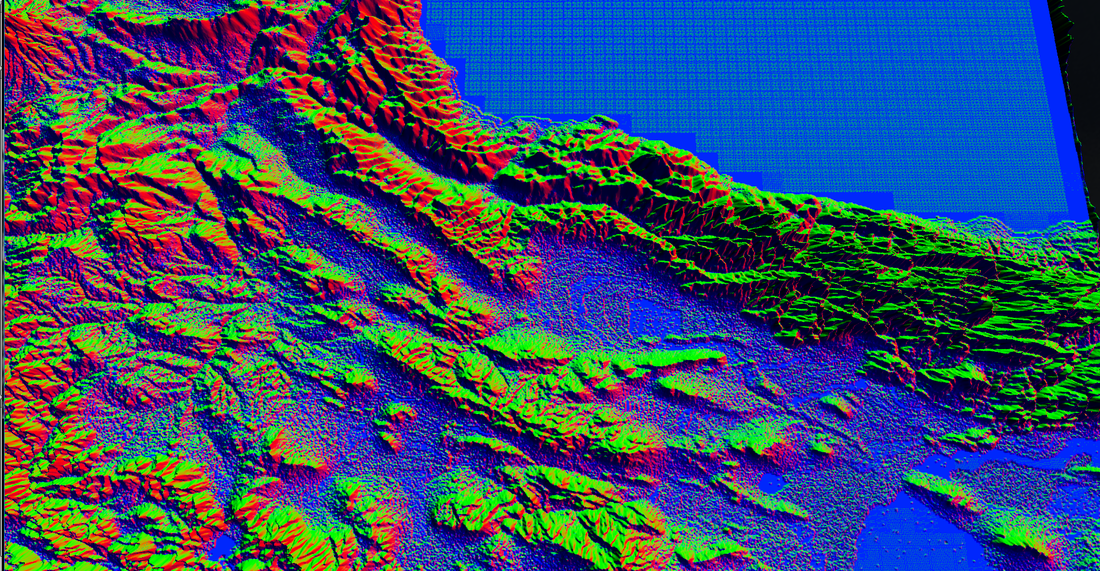

This is the normals (in view space) from 1), which looks correct

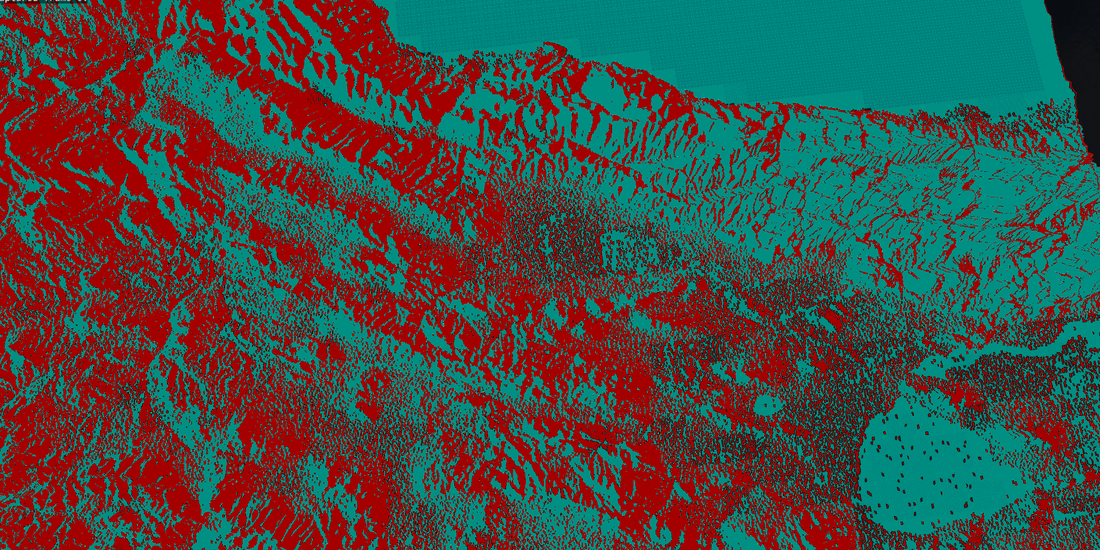

This is normals when sampled from the precomputed normal map:

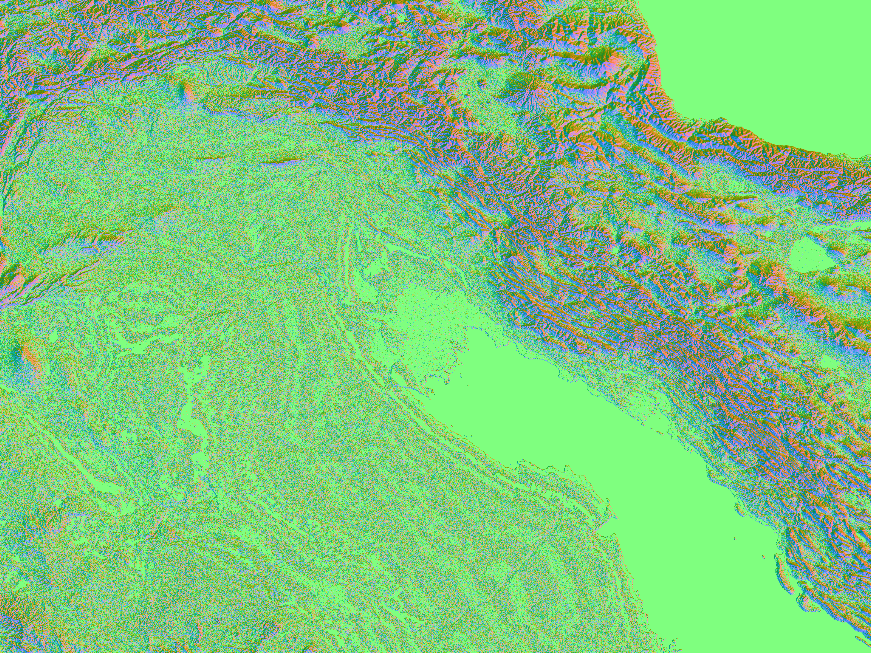

This is what the computed normal map looks like

This is the sobel filter I use in the compute shader

float3 SobelFilter( int3 texCoord )

{

float h00 = gHeightmap.Load( texCoord, int2( -1, -1 ) ).r;

float h10 = gHeightmap.Load( texCoord, int2( 0, -1 ) ).r;

float h20 = gHeightmap.Load( texCoord, int2( 1, -1 ) ).r;

float h01 = gHeightmap.Load( texCoord, int2( -1, 0 ) ).r;

float h21 = gHeightmap.Load( texCoord, int2( 1, 0 ) ).r;

float h02 = gHeightmap.Load( texCoord, int2( -1, 1 ) ).r;

float h12 = gHeightmap.Load( texCoord, int2( 0, 1 ) ).r;

float h22 = gHeightmap.Load( texCoord, int2( 1, 1 ) ).r;

float Gx = h00 - h20 + 2.0f * h01 - 2.0f * h21 + h02 - h22;

float Gy = h00 + 2.0f * h10 + h20 - h02 - 2.0f * h12 - h22;

// generate missing Z

float Gz = 0.01f * sqrt( max( 0.0f, 1.0f - Gx * Gx - Gy * Gy ) );

return normalize( float3( 2.0f * Gx, Gz, 2.0f * Gy ) );

}The simple compute shader itself:

[numthreads(TERRAIN_NORMAL_THREADS_AXIS, TERRAIN_NORMAL_THREADS_AXIS, 1)]

void cs_main(uint3 groupID : SV_GroupID, uint3 dispatchTID : SV_DispatchThreadID, uint3 groupTID : SV_GroupThreadID, uint groupIndex : SV_GroupIndex)

{

float3 normal = SobelFilter( int3( dispatchTID.xy, 0) );

normal += 1.0f;

normal *= 0.5f;

gNormalTexture[ dispatchTID.xy ] = normal;

}The snippet in the domain shader that samples the normal map:

const int2 offset = 0;

const int mipmap = 0;

ret.mNormal = gNormalMap.SampleLevel( gLinearSampler, midPointTexcoord, mipmap, offset ).r;

ret.mNormal *= 2.0;

ret.mNormal -= 1.0;

ret.mNormal = normalize( ret.mNormal );

ret.mNormal.y = -ret.mNormal.y;

ret.mNormal = mul( ( float3x3 )gFrameView, ( float3 )ret.mNormal );

ret.mNormal = normalize( ret.mNormal );

-----------------------------------------------

Now, if I compute the normals directly in the domain shader, different sampling method in the Sobel filter

float3 SobelFilter( float2 uv )

{

const int2 offset = 0;

const int mipmap = 0;

float h00 = gHeightmap.SampleLevel( gPointSampler, uv, mipmap, int2( -1, -1 ) ).r;

float h10 = gHeightmap.SampleLevel( gPointSampler, uv, mipmap, int2( 0, -1 ) ).r;

float h20 = gHeightmap.SampleLevel( gPointSampler, uv, mipmap, int2( 1, -1 ) ).r;

float h01 = gHeightmap.SampleLevel( gPointSampler, uv, mipmap, int2( -1, 0 ) ).r;

float h21 = gHeightmap.SampleLevel( gPointSampler, uv, mipmap, int2( 1, 0 ) ).r;

float h02 = gHeightmap.SampleLevel( gPointSampler, uv, mipmap, int2( -1, 1 ) ).r;

float h12 = gHeightmap.SampleLevel( gPointSampler, uv, mipmap, int2( 0, 1 ) ).r;

float h22 = gHeightmap.SampleLevel( gPointSampler, uv, mipmap, int2( 1, 1 ) ).r;

float Gx = h00 - h20 + 2.0f * h01 - 2.0f * h21 + h02 - h22;

float Gy = h00 + 2.0f * h10 + h20 - h02 - 2.0f * h12 - h22;

// generate missing Z

float Gz = 0.01f * sqrt( max( 0.0f, 1.0f - Gx * Gx - Gy * Gy ) );

return normalize( float3( 2.0f * Gx, Gz, 2.0f * Gy ) );

} And then just computing it in the domain shader:

ret.mNormal = SobelFilter( midPointTexcoord );

ret.mNormal = mul( ( float3x3 )gFrameView, ( float3 )ret.mNormal );

ret.mNormal = normalize( ret.mNormal );

I am sure there is a simple answer to this and I am missing something... but what? Whether I sample a precomputed value or compute it in the shader, it should be the same?