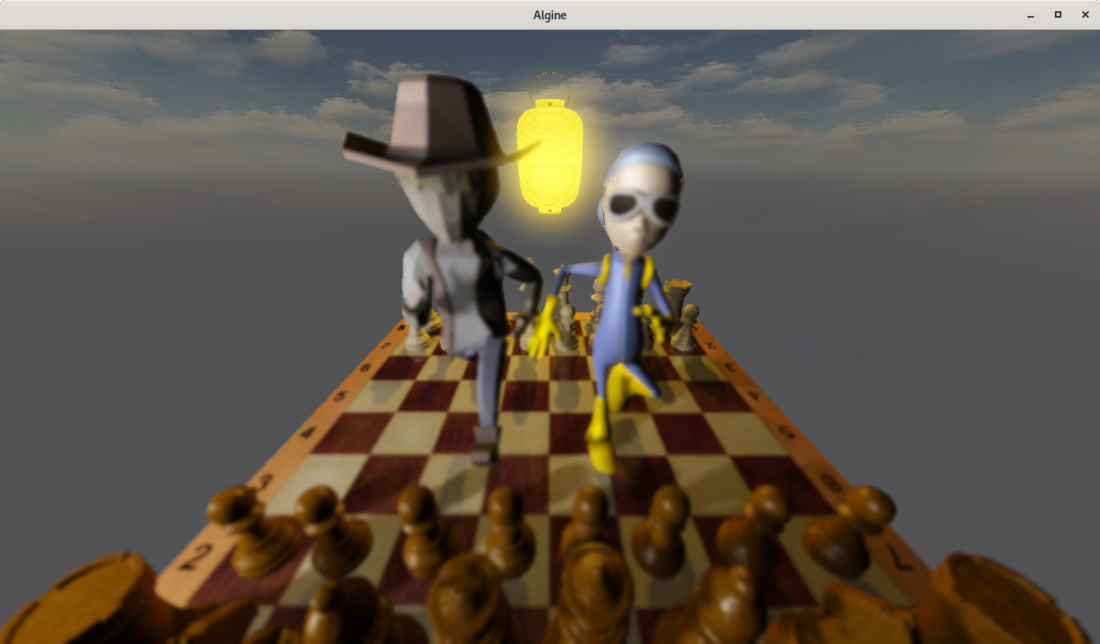

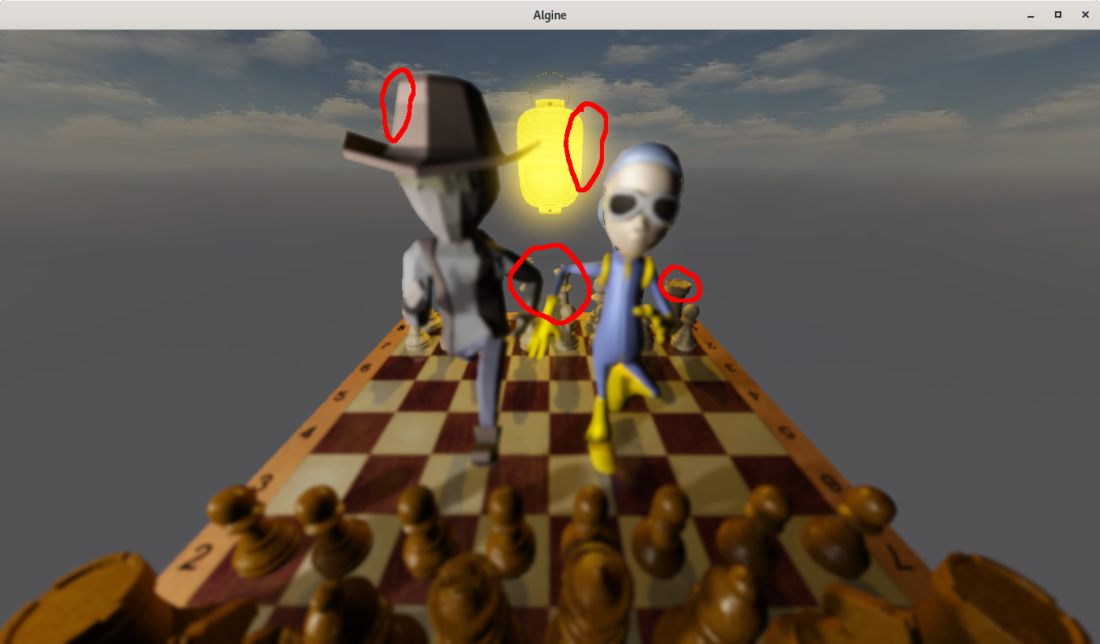

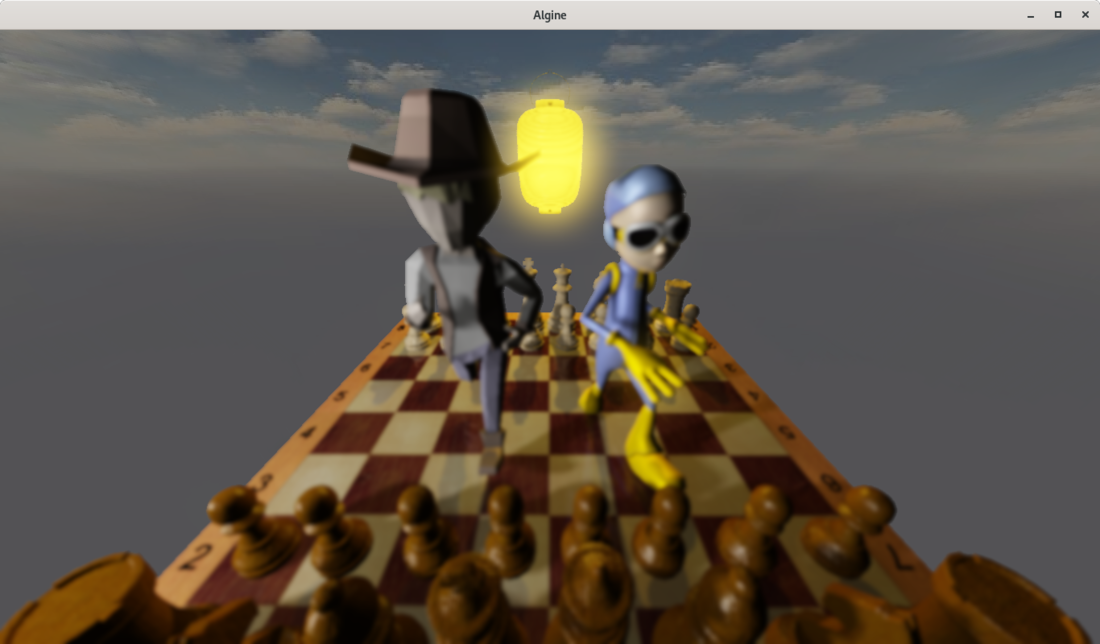

Hello, during the DOF testing, artifacts were revealed as shown in the picture:

Shader code:

#version 330

#algdef

in vec2 texCoord;

layout (location = 1) out vec3 dofFragColor;

uniform sampler2D scene; // dof

uniform sampler2D dofBuffer;

float dof_kernel[DOF_KERNEL_SIZE];

const int DOF_LCR_SIZE = DOF_KERNEL_SIZE * 2 - 1; // left-center-right (lllcrrr)

const int DOF_MEAN = DOF_LCR_SIZE / 2;

void makeDofKernel(float sigma) {

float sum = 0; // For accumulating the kernel values

for (int x = DOF_MEAN; x < DOF_LCR_SIZE; x++) {

dof_kernel[x - DOF_MEAN] = exp(-0.5 * pow((x - DOF_MEAN) / sigma, 2.0));

// Accumulate the kernel values

sum += dof_kernel[x - DOF_MEAN];

}

sum += sum - dof_kernel[0];

// Normalize the kernel

for (int x = 0; x < DOF_KERNEL_SIZE; x++) dof_kernel[x] /= sum;

}

void main() {

makeDofKernel(texture(dofBuffer, texCoord).r);

dofFragColor = texture(scene, texCoord).rgb * dof_kernel[0];

#ifdef HORIZONTAL

for(int i = 1; i < DOF_KERNEL_SIZE; i++) {

dofFragColor +=

dof_kernel[i] * (

texture(scene, texCoord + vec2(texOffset.x * i, 0.0)).rgb +

texture(scene, texCoord - vec2(texOffset.x * i, 0.0)).rgb

);

}

#else

for(int i = 1; i < DOF_KERNEL_SIZE; i++) {

dofFragColor +=

dof_kernel[i] * (

texture(scene, texCoord + vec2(0.0, texOffset.y * i)).rgb +

texture(scene, texCoord - vec2(0.0, texOffset.y * i)).rgb

);

}

#endif

}How can I fix this problem? I would be grateful for help.