Hi all, I was changing my instance input from a D3DVector3 to D3DXMATRIX and I'm running into trouble.

I chose DXGI_FORMAT_R16G16B16A16_FLOAT as the format for my matrix input, I don't know if this is right but it's not complaining, its for 64 bits and my matrix is 64 bits

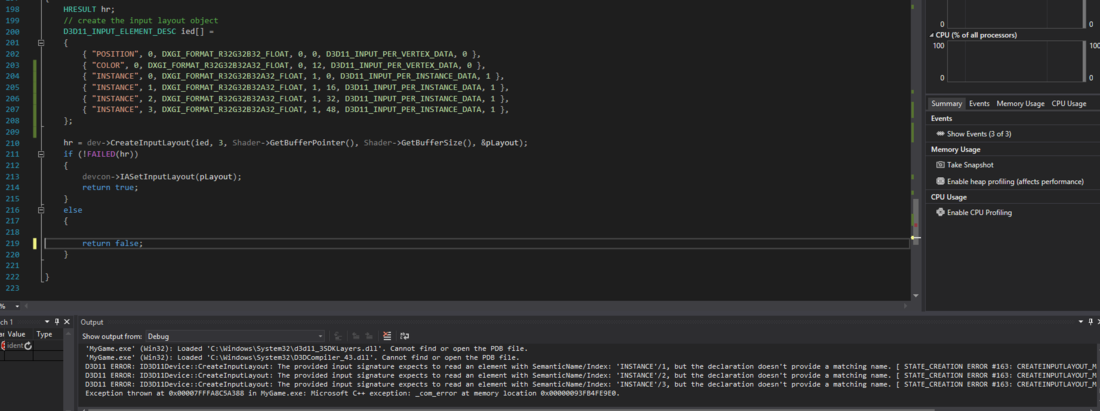

So I am running into this error:

D3D11 ERROR: ID3D11Device::CreateInputLayout: The provided input signature expects to read an element with SemanticName/Index: 'INSTANCE'/1, but the declaration doesn't provide a matching name. [ STATE_CREATION ERROR #163: CREATEINPUTLAYOUT_MISSINGELEMENT]

Actually 3 of them, 'INSTANCE'/1 'INSTANCE'/2 'INSTANCE'3

It's saying the declaration doesn't provide a matching name, I'm assuming its talking about this?

VOut VShader(float4 position : POSITION, float4 color : COLOR, float4x4 instancePosition : INSTANCE)

But its there?

Here is all the code, this is probably a really silly oversight:

My instance struct in my C++ code:

struct Instance

{

D3DXMATRIX position;

};

The input layout creation:

bool Renderer::createInputLayout(ID3D11Device * dev, ID3D11DeviceContext * devcon, ID3D10Blob * Shader)

{

HRESULT hr;

// create the input layout object

D3D11_INPUT_ELEMENT_DESC ied[] =

{

{ "POSITION", 0, DXGI_FORMAT_R32G32B32_FLOAT, 0, 0, D3D11_INPUT_PER_VERTEX_DATA, 0 },

{ "COLOR", 0, DXGI_FORMAT_R32G32B32A32_FLOAT, 0, 12, D3D11_INPUT_PER_VERTEX_DATA, 0 },

{ "INSTANCE", 0, DXGI_FORMAT_R16G16B16A16_FLOAT, 1, 0, D3D11_INPUT_PER_INSTANCE_DATA, 1 },

};

hr = dev->CreateInputLayout(ied, 3, Shader->GetBufferPointer(), Shader->GetBufferSize(), &pLayout);

if (!FAILED(hr))

{

devcon->IASetInputLayout(pLayout);

return true;

}

else

{

return false;

}

}

Finally, the shader code (Note, I also still need to figure out what to do with my new float4x4 as opposed to my float3 I was previously using):

cbuffer ProjectionConstantBuffer : register(b0)

{

float4x4 projection;

}

cbuffer WorldConstantBuffer : register(b1)

{

float4x4 world;

}

struct VOut

{

float4 position : SV_POSITION;

float4 color : COLOR;

};

VOut VShader(float4 position : POSITION, float4 color : COLOR, float4x4 instancePosition : INSTANCE)

{

VOut output;

// Apply all instance position translations

//position.x += instancePosition.x;

//position.y += instancePosition.y;

//position.z += instancePosition.z;

output.position = position;

output.position = mul(position, world); // Apply world translation matrix

output.position = mul(position, instancePosition); // Apply instance translation matrix

output.position = mul(output.position, projection); // Apply ortho projection matrix

output.color = color; // Apply color from vertex input

return output;

}

Thanks for your help!

EDIT:

I learned that 'INSTANCE'/1, 'INSTANCE'/2, and 'INSTANCE'/3

could possibly be referring to something like this:

D3D11_INPUT_ELEMENT_DESC ied[] =

{

{ "POSITION", 0, DXGI_FORMAT_R32G32B32_FLOAT, 0, 0, D3D11_INPUT_PER_VERTEX_DATA, 0 },

{ "COLOR", 0, DXGI_FORMAT_R32G32B32A32_FLOAT, 0, 12, D3D11_INPUT_PER_VERTEX_DATA, 0 },

{ "INSTANCE", 0,DXGI_FORMAT_R32G32B32A32_FLOAT, 1, 0, D3D11_INPUT_PER_INSTANCE_DATA, 1 },

{ "INSTANCE", 1,DXGI_FORMAT_R32G32B32A32_FLOAT, 1, 0, D3D11_INPUT_PER_INSTANCE_DATA, 1 },

{ "INSTANCE", 2,DXGI_FORMAT_R32G32B32A32_FLOAT, 1, 0, D3D11_INPUT_PER_INSTANCE_DATA, 1 },

{ "INSTANCE", 3,DXGI_FORMAT_R32G32B32A32_FLOAT, 1, 0, D3D11_INPUT_PER_INSTANCE_DATA, 1 },

};But unfortunately, I'm still missing something, as I am getting the same error. Is there something I need to do with the aligned byte offset? There is some science here I'm definitely not picking up on.