Hi all,

I know that most inverted texture issue is because of inverted coordinate system but this case maybe different.

so here are the facts.

1. I am rendering a 2D sprite using a quad and a texture in orthographic projection.

I was just following the code from learnopengl.com here

https://learnopengl.com/In-Practice/2D-Game/Rendering-Sprites

have a look at initRenderData()

void SpriteRenderer::initRenderData()

{

// Configure VAO/VBO

GLuint VBO;

GLfloat vertices[] = {

// Pos // Tex

0.0f, 1.0f, 0.0f, 1.0f,

1.0f, 0.0f, 1.0f, 0.0f,

0.0f, 0.0f, 0.0f, 0.0f,

0.0f, 1.0f, 0.0f, 1.0f,

1.0f, 1.0f, 1.0f, 1.0f,

1.0f, 0.0f, 1.0f, 0.0f

};

...

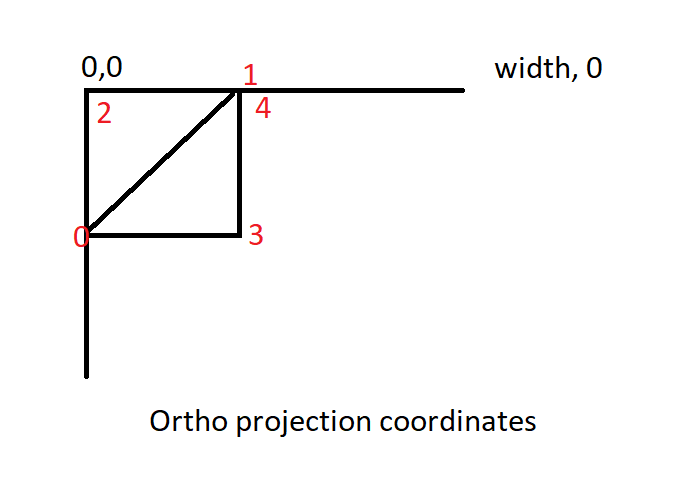

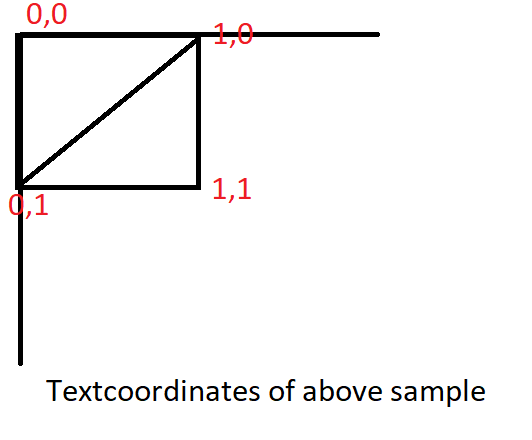

}Please take note that this was rendered in orthographic projection,

which means the coordinates of this quads are in screenspace (top left are 0,0 and bottom right are width,height)

and please observed the texture coordinate as well, instead of 0,0 at the bottom left, with width, height at top right

the coordinates is inverted instead.

SO if we are using orthographic projection, does this mean texture coordinates are inverted as well?

Is this assumption correct?

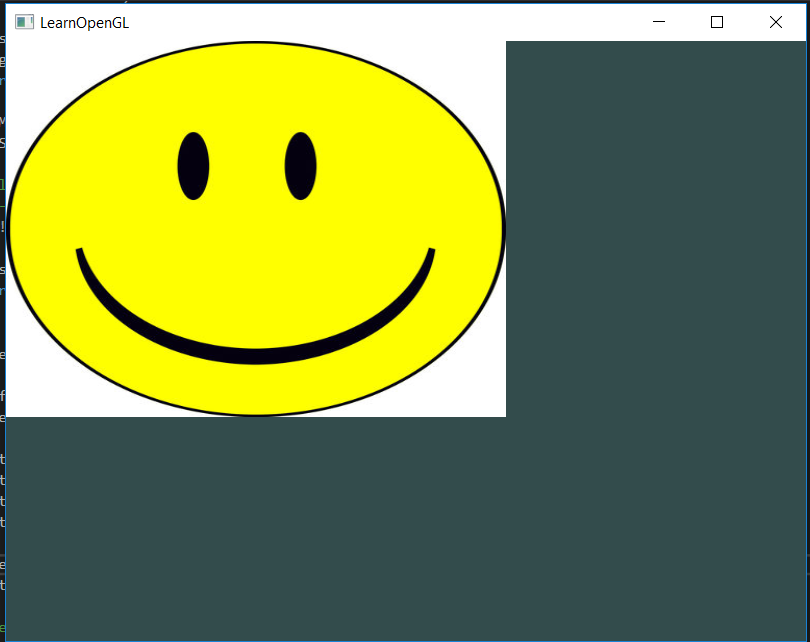

2. When rendering this quad straight, everything is OK and rendered with proper orientation

void Sprite2D_Draw(int x, int y, int width, int height, GLuint texture)

{

// Prepare transformations

glm::mat4 model = glm::mat4(1.0f); //initialize to identity

// First translate (transformations are: scale happens first,

// then rotation and then finall translation happens; reversed order)

model = glm::translate(model, glm::vec3(x, y, 0.0f));

//Resize to current scale

model = glm::scale(model, glm::vec3(width, height, 1.0f));

spriteShader.Use();

int projmtx = spriteShader.GetUniformLocation("projection");

spriteShader.SetUniformMatrix(projmtx, projection);

int modelmtx = spriteShader.GetUniformLocation("model");

spriteShader.SetUniformMatrix(modelmtx, model);

int textureID = spriteShader.GetUniformLocation("texture");

glUniform1i(textureID, 0);

glActiveTexture(GL_TEXTURE0);

glBindTexture(GL_TEXTURE_2D, texture);

glBindVertexArray(_quadVAO);

glBindBuffer(GL_ARRAY_BUFFER, _VBO);

glEnableVertexAttribArray(0);

glVertexAttribPointer(0, 4, GL_FLOAT, GL_FALSE, 4 * sizeof(GLfloat), (GLvoid*)0);

glDrawArrays(GL_TRIANGLES, 0, 6);

glBindBuffer(GL_ARRAY_BUFFER, 0);

}draw call

glClearColor(0.2f, 0.3f, 0.3f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT);

//display to main screen

Sprite2D_Draw(0, 0, 500, 376, _spriteTexture);result

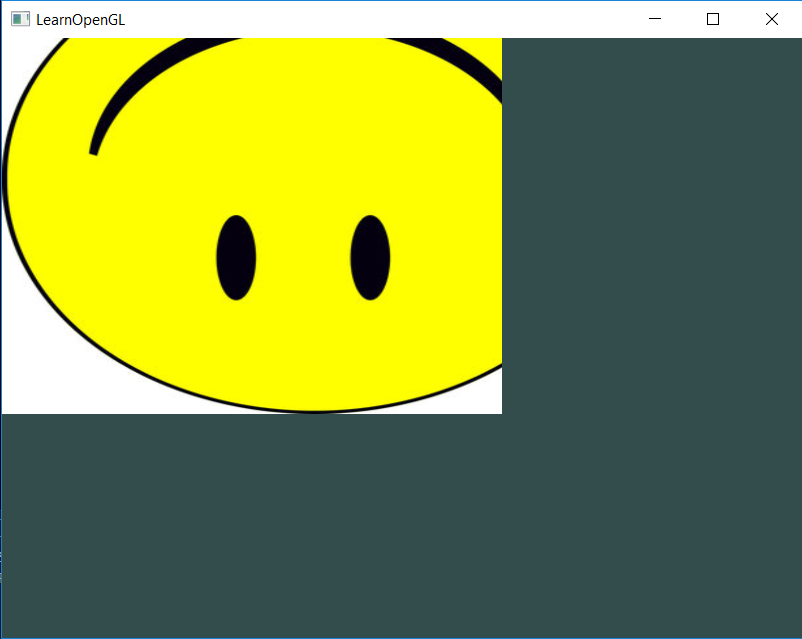

BUT

when rendering that image into a frame buffer, the result is inverted!

here is the code

#if 1

frameBuffer.Bind();

glClearColor(0.2f, 0.3f, 0.3f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT);

//... display backbuffer contents here

//draw sprite at backbuffer 0,0

Sprite2D_Draw(0, 0, 1000, 750, _spriteTexture);

//-----------------------------------------------------------------------------

// Restore frame buffer

frameBuffer.Unbind(SCR_WIDTH, SCR_HEIGHT);

#endif

glClearColor(0.2f, 0.3f, 0.3f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT);

//display to main screen

Sprite2D_Draw(0, 0, 500, 376, frameBuffer.GetColorTexture());result, why?

for reference here is the FBO code

int FBO::Initialize(int width, int height, bool createDepthStencil)

{

int error = 0;

_width = width;

_height = height;

// 1. create frame buffer

glGenFramebuffers(1, &_frameBuffer);

glBindFramebuffer(GL_FRAMEBUFFER, _frameBuffer);

// 2. create a blank texture which will contain the RGB output of our shader.

// data is set to NULL

glGenTextures(1, &_texColorBuffer);

glBindTexture(GL_TEXTURE_2D, _texColorBuffer);

glTexImage2D(

GL_TEXTURE_2D, 0, GL_RGB, _width, _height, 0, GL_RGB, GL_UNSIGNED_BYTE, NULL

);

error = glGetError();

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

// 3. attached our texture to the frame buffer, note that our custom frame buffer is already active

// by glBindFramebuffer

glFramebufferTexture2D(

GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_2D, _texColorBuffer, 0

);

error = glGetError();

// 4. we create the depth and stencil buffer also, (this is optional)

if (createDepthStencil) {

GLuint rboDepthStencil;

glGenRenderbuffers(1, &rboDepthStencil);

glBindRenderbuffer(GL_RENDERBUFFER, rboDepthStencil);

glRenderbufferStorage(GL_RENDERBUFFER, GL_DEPTH24_STENCIL8, _width, _height);

}

error = glGetError();

// Set the list of draw buffers. this is not needed?

//GLenum DrawBuffers[1] = { GL_COLOR_ATTACHMENT0 };

//glDrawBuffers(1, DrawBuffers); // "1" is the size of DrawBuffers

error = glGetError();

if (glCheckFramebufferStatus(GL_FRAMEBUFFER) != GL_FRAMEBUFFER_COMPLETE)

{

return -1;

}

// Restore frame buffer

glBindFramebuffer(GL_FRAMEBUFFER, 0);

return glGetError();;

}

void FBO::Bind()

{

// Render to our frame buffer

glBindFramebuffer(GL_FRAMEBUFFER, _frameBuffer);

glViewport(0, 0, _width, _height); // use the entire texture,

// this means that use the dimension set as our total

// display area

}

void FBO::Unbind(int width, int height)

{

glBindFramebuffer(GL_FRAMEBUFFER, 0);

glViewport(0, 0, width, height);

}

int FBO::GetColorTexture()

{

return _texColorBuffer;

} this is how it was initialized

FBO frameBuffer;

frameBuffer.Initialize(1024, 1080);

spriteShader.Init();

spriteShader.LoadVertexShaderFromFile("./shaders/basic_vshader.txt");

spriteShader.LoadFragmentShaderFromFile("./shaders/basic_fshader.txt");

spriteShader.Build();

hayleyTex = LoadTexture("./textures/smiley.jpg");

Sprite2D_InitData();Any one knows what is going on in here?