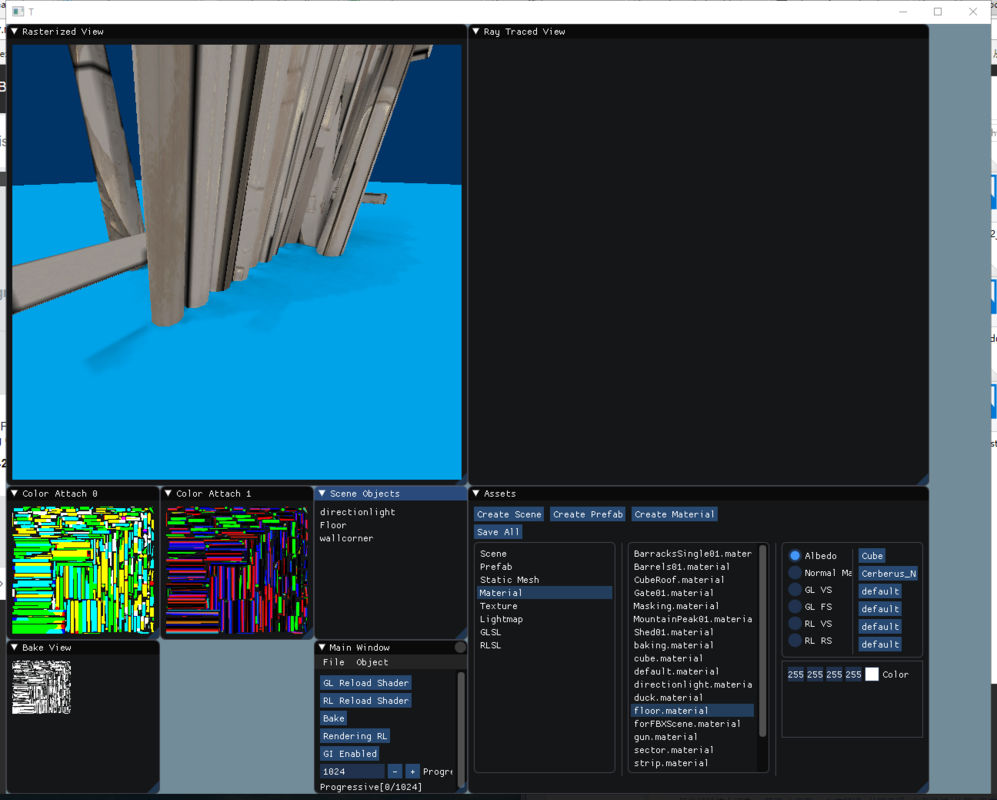

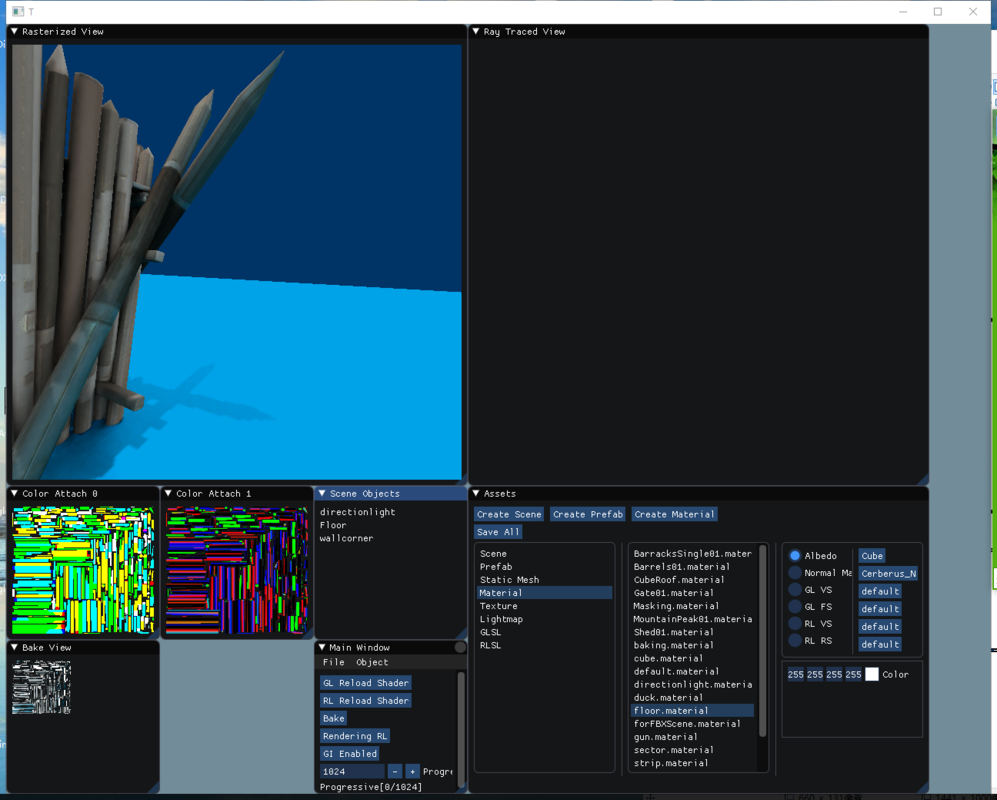

The two images above are the result of baking don not take albedo into account when baking, without bleeding.

Data in light map when using Patch Tracing

I see. It's a minor issue easy to fix. My own tool is broken right now so i can't make a screenshot showing only irradiance vs. the final visible result.

But imagine a grey and a green wall on a red floor. The irradiance map of both walls would have red color bleeding from the floor, but in the final image only the grey wall would show it because of the multiplication with aldebo.

So while tracing you account for emitting objects aldebo as you did before, but when saving the data you ignore aldebo and store only the irradiance.

I copy the code i've mentioned to this post. In this regard you can do the same although you use a path tracer.

The Visualize() function refers to runtime shading, and it mixes aldebo('sample.color') and irradiance ('sample.received') as said.

The Simulate() function refers to the path tracer. Notice the same mixing happens here for the emitter samples, but the receiving sample only accumulates its received light ignoring any surface properties of its own.

If your path tracer combines those things internally for some reason, you'd need to change this so the information does not get lost.

Edit: In short: You keep aldebo and irradiance seperated everywhere, and only combine it temporary when you want to know the outcoming light. (= radiance or 'reflect' in my code)

struct Radiosity

{

typedef sVec3 vec3;

inline vec3 cmul (const vec3 &a, const vec3 &b)

{

return vec3 (a[0]*b[0], a[1]*b[1], a[2]*b[2]);

}

struct AreaSample

{

vec3 pos;

vec3 dir;

float area;

vec3 color;

vec3 received;

float emission; // using just color * emission to save memory

};

AreaSample *samples;

int sampleCount;

void InitScene ()

{

// simple cylinder

int nU = 144;

int nV = int( float(nU) / float(PI) );

float scale = 2.0f;

float area = (2 * scale / float(nU) * float(PI)) * (scale / float(nV) * 2);

sampleCount = nU*nV;

samples = new AreaSample[sampleCount];

AreaSample *sample = samples;

for (int v=0; v<nV; v++)

{

float tV = float(v) / float(nV);

for (int u=0; u<nU; u++)

{

float tU = float(u) / float(nU);

float angle = tU * 2.0f*float(PI);

vec3 d (sin(angle), 0, cos(angle));

vec3 p = (vec3(0,tV*2,0) + d) * scale;

sample->pos = p;

sample->dir = -d;

sample->area = area;

sample->color = ( d[0] < 0 ? vec3(0.7f, 0.7f, 0.7f) : vec3(0.0f, 1.0f, 0.0f) );

sample->received = vec3(0,0,0);

sample->emission = ( (d[0] < -0.97f && tV > 0.87f) ? 35.0f : 0 );

sample++;

}

}

}

void SimulateOneBounce ()

{

for (int rI=0; rI<sampleCount; rI++)

{

vec3 rP = samples[rI].pos;

vec3 rD = samples[rI].dir;

vec3 accum (0,0,0);

for (int eI=0; eI<sampleCount; eI++)

{

vec3 diff = samples[eI].pos - rP;

float cosR = rD.Dot(diff);

if (cosR > FP_EPSILON)

{

float cosE = -samples[eI].dir.Dot(diff);

if (cosE > FP_EPSILON)

{

float visibility = 1.0f; // todo: In this example we know each surface sees any other surface, but in Practice: Trace a ray from receiver to emitter and set to zero if any hit (or use multiple rays for accuracy)

if (visibility > 0)

{

float area = samples[eI].area;

float d2 = diff.Dot(diff) + FP_TINY;

float formFactor = (cosR * cosE) / (d2 * (float(PI) * d2 + area)) * area;

vec3 reflect = cmul (samples[eI].color, samples[eI].received);

vec3 emit = samples[eI].color * samples[eI].emission;

accum += (reflect + emit) * visibility * formFactor;

}

}

}

}

samples[rI].received = accum;

}

}

void Visualize ()

{

for (int i=0; i<sampleCount; i++)

{

vec3 reflect = cmul (samples[i].color, samples[i].received);

vec3 emit = samples[i].color * samples[i].emission;

vec3 color = reflect + emit;

//float radius = sqrt (samples[i].area / float(PI));

//Vis::RenderCircle (radius, samples[i].pos, samples[i].dir, color[0],color[1],color[2]);

float radius = sqrt(samples[i].area * 0.52f);

Vis::RenderDisk (radius, samples[i].pos, samples[i].dir, color[0],color[1],color[2], 4); // this renders a quad

}

}

};

Thanks for your share, JoeJ.

After reading your post for a while, I add another rayattribute in my RLSL ray shader which contains albedo only. I multiply albedo by rl_InRay.albedo at each bounce. And at last, at accumulation stage, I accumulate rl_InRay.color * rl_InRay.albedo for bounced rays and accumulate rl_InRay.color for non bounced rays.It works well. : )

Here are two images.

On 8/16/2018 at 8:18 PM, orange451 said:@MJPIs there any information of why writing the final light color after dividing by PI? Not sure how to search this, but I have seen it floating around a lot.

Does this remain true for things like deferred renderers?

I actually talked about it a bit in one of my blog posts: https://mynameismjp.wordpress.com/2016/10/09/sg-series-part-1-a-brief-and-incomplete-history-of-baked-lighting-representations/

The 1 / Pi factor comes from the diffuse lambertian BRDF, which is equal to diffuseAlbedo / Pi. You need the 1 / Pi in the BRDF to ensure energy conservation: if you integrate cos(theta) (where cos(theta) == N dot L) about the entire hemisphere, you get a result of Pi. Therefore if you want to ensure that you don't reflect more energy than is coming in from your lighting environment, the most you can reflect for a lambertian BRDF is 1 / Pi.

For lightmaps you don't necessarily need to bake the 1 / Pi into the lightmap. If you wanted to store just the pure irradiance value in the lightmap, you can do that and then compute the final diffuse lighting in your pixel/fragment shader by doing irradiance * diffuseAlbedo / Pi. Pre-dividing by Pi just saves you a divide at runtime, which makes it slightly cheaper. For older games before HDR lightmaps and rendering were a thing, pre-dividing by Pi would also make sense so that you could store [0, 1] values in your lightmap and directly output that to the screen.