Hello,

I have a custom binary ImageFile, it is essentially a custom version of DDS made up of 2 important parts:

struct FileHeader

{

dword m_signature;

dword m_fileSize;

};

struct ImageFileInfo

{

dword m_width;

dword m_height;

dword m_depth;

dword m_mipCount; //atleast 1

dword m_arraySize; // atleast 1

SurfaceFormat m_surfaceFormat;

dword m_pitch; //length of scanline

dword m_byteCount;

byte* m_data;

};It uses a custom BinaryIO class i wrote to read and write binary, the majority of the data is unsigned int which is a dword so ill only show the dword function:

bool BinaryIO::WriteDWord(dword value)

{

if (!m_file && (m_mode == BINARY_FILEMODE::READ))

{

//log: file null or you tried to read from a write only file!

return false;

}

byte bytes[4];

bytes[0] = (value & 0xFF);

bytes[1] = (value >> 8) & 0xFF;

bytes[2] = (value >> 16) & 0xFF;

bytes[3] = (value >> 24) & 0xFF;

m_file.write((char*)bytes, sizeof(bytes));

return true;

}

//-----------------------------------------------------------------------------

dword BinaryIO::ReadDword()

{

if (!m_file && (m_mode == BINARY_FILEMODE::WRITE))

{

//log: file null or you tried to read from a write only file!

return NULL;

}

dword value;

byte bytes[4];

m_file.read((char*)&bytes, sizeof(bytes));

value = (bytes[0] | (bytes[1] << 8) | (bytes[2] << 16) | bytes[3] << 24);

return value;

}So as you can Imagine you end up with a loop for reading like this:

byte* inBytesIterator = m_fileInfo.m_data;

for (unsigned int i = 0; i < m_fileInfo.m_byteCount; i++)

{

*inBytesIterator = binaryIO.ReadByte();

inBytesIterator++;

}And finally to read it into dx11 buffer memory we have the following:

//Pass the Data to the GPU: Remembering Mips

D3D11_SUBRESOURCE_DATA* initData = new D3D11_SUBRESOURCE_DATA[m_mipCount];

ZeroMemory(initData, sizeof(D3D11_SUBRESOURCE_DATA));

//Used as an iterator

byte* source = texDesc.m_data;

byte* endBytes = source + m_totalBytes;

int index = 0;

for (int i = 0; i < m_arraySize; i++)

{

int w = m_width;

int h = m_height;

int numBytes = GetByteCount(w, h);

for (int j = 0; j < m_mipCount; j++)

{

if ((m_mipCount <= 1) || (w <= 16384 && h <= 16384))

{

initData[index].pSysMem = source;

initData[index].SysMemPitch = GetPitch(w);

initData[index].SysMemSlicePitch = numBytes;

index++;

}

if (source + numBytes > endBytes)

{

LogGraphics("Too many Bytes!");

return false;

}

//Divide by 2

w = w >> 1;

h = h >> 1;

if (w == 0) { w = 1; }

if (h == 0) { h = 1; }

}

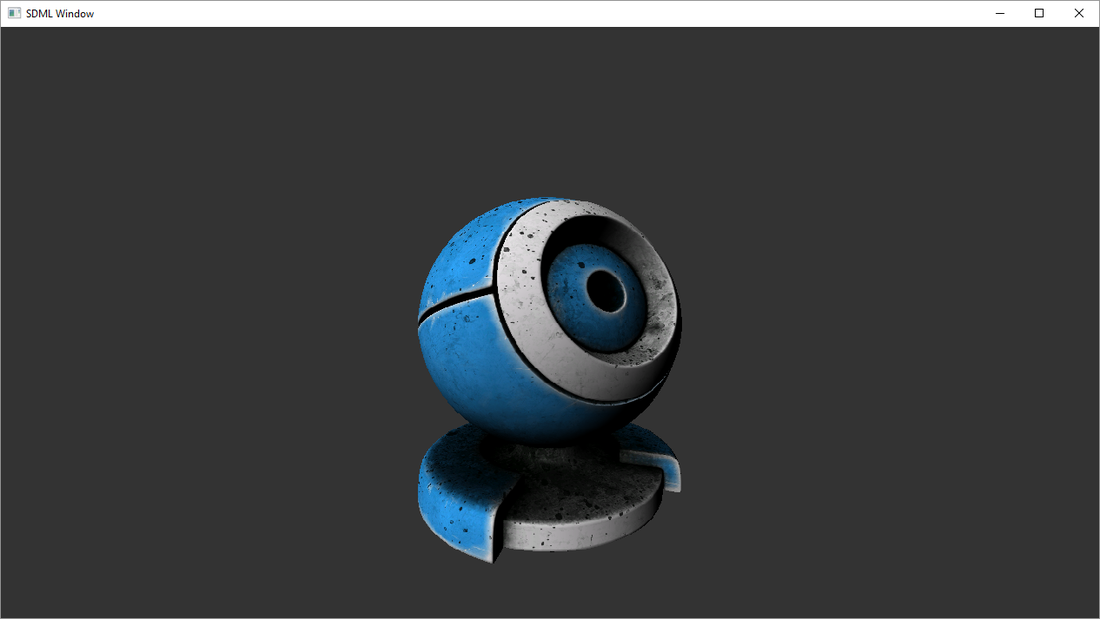

}It seems rather slow particularly for big textures, is there any way i could optimize this? as the render grows too rendering multiple textured objects the loading times may become problematic. At the moment it takes around 2 seconds to load a 4096x4096 texture, you can see the output in the attached images.

Thanks.