Hello fellow programmers,

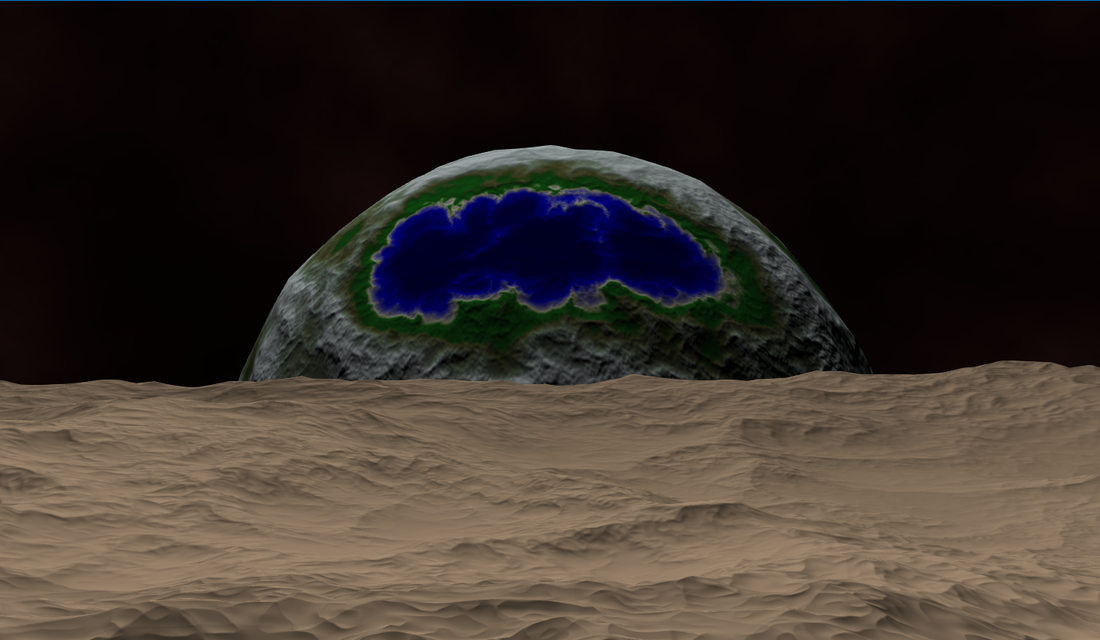

For a couple of days now i've decided to build my own planet renderer just to see how floating point precision issues

can be tackled. As you probably imagine, i've quickly faced FPP issues when trying to render absurdly large planets.

I have used the classical quadtree LOD approach;

I've generated my grids with 33 vertices, (x: -1 to 1, y: -1 to 1, z = 0).

Each grid is managed by a TerrainNode class that, depending on the side it represents (top, bottom, left right, front, back),

creates a special rotation-translation matrix that moves and rotates the grid away from the origin so that when i finally

normalize all the vertices on my vertex shader i can get a perfect sphere.

T = glm::translate(glm::dmat4(1.0), glm::dvec3(0.0, 0.0, 1.0));

R = glm::rotate(glm::dmat4(1.0), glm::radians(180.0), glm::dvec3(1.0, 0.0, 0.0));

sides[0] = new TerrainNode(1.0, radius, T * R, glm::dvec2(0.0, 0.0), new TerrainTile(1.0, SIDE_FRONT));

T = glm::translate(glm::dmat4(1.0), glm::dvec3(0.0, 0.0, -1.0));

R = glm::rotate(glm::dmat4(1.0), glm::radians(0.0), glm::dvec3(1.0, 0.0, 0.0));

sides[1] = new TerrainNode(1.0, radius, R * T, glm::dvec2(0.0, 0.0), new TerrainTile(1.0, SIDE_BACK));

// So on and so forth for the rest of the sidesAs you can see, for the front side grid, i rotate it 180 degrees to make it face the camera and push it towards the eye;

the back side is handled almost the same way only that i don't need to rotate it but simply push it away from the eye.

The same technique is applied for the rest of the faces (obviously, with the proper rotations / translations).

The matrix that result from the multiplication of R and T (in that particular order) is send to my vertex shader as `r_Grid'.

// spherify

vec3 V = normalize((r_Grid * vec4(r_Vertex, 1.0)).xyz);

gl_Position = r_ModelViewProjection * vec4(V, 1.0);The `r_ModelViewProjection' matrix is generated on the CPU in this manner.

// No the most efficient way, but it works.

glm::dmat4 Camera::getMatrix() {

// Create the view matrix

// Roll, Yaw and Pitch are all quaternions.

glm::dmat4 View = glm::toMat4(Roll) * glm::toMat4(Pitch) * glm::toMat4(Yaw);

// The model matrix is generated by translating in the oposite direction of the camera.

glm::dmat4 Model = glm::translate(glm::dmat4(1.0), -Position);

// Projection = glm::perspective(fovY, aspect, zNear, zFar);

// zNear = 0.1, zFar = 1.0995116e12

return Projection * View * Model;

}I managed to get rid of z-fighting by using a technique called Logarithmic Depth Buffer described in this article; it works amazingly well, no z-fighting at all, at least not visible.

Each frame i'm rendering each node by sending the generated matrices this way.

// set the r_ModelViewProjection uniform

// Sneak in the mRadiusMatrix which is a matrix that contains the radius of my planet.

Shader::setUniform(0, Camera::getInstance()->getMatrix() * mRadiusMatrix);

// set the r_Grid matrix uniform i created earlier.

Shader::setUniform(1, r_Grid);

grid->render();My planet's radius is around 6400000.0 units, absurdly large, but that's what i really want to achieve;

Everything works well, the node's split and merge as you'd expect, however whenever i get close to the surface

of the planet the rounding errors start to kick in giving me that lovely stairs effect.

I've read that if i could render each grid relative to the camera i could get better precision on the surface, effectively

getting rid of those rounding errors.

My question is how can i achieve this relative to camera rendering in my scenario here?

I know that i have to do most of the work on the CPU with double, and that's exactly what i'm doing.

I only use double on the CPU side where i also do most of the matrix multiplications.

As you can see from my vertex shader i only do the usual r_ModelViewProjection * (some vertex coords).

Thank you for your suggestions!