Good day sir/maam. I am developing a game for my thesis and im done with multiplayer and plan to start the implementation of AI but i dont know how/where to start. Please give an advice. I am developing it in C# using UNITY.

Im am now collected all pieces that has possible moves but i am stuck on which best move to select. I hope you will help me. This is link explained the game https://en.wikipedia.org/wiki/Game_of_the_Generals

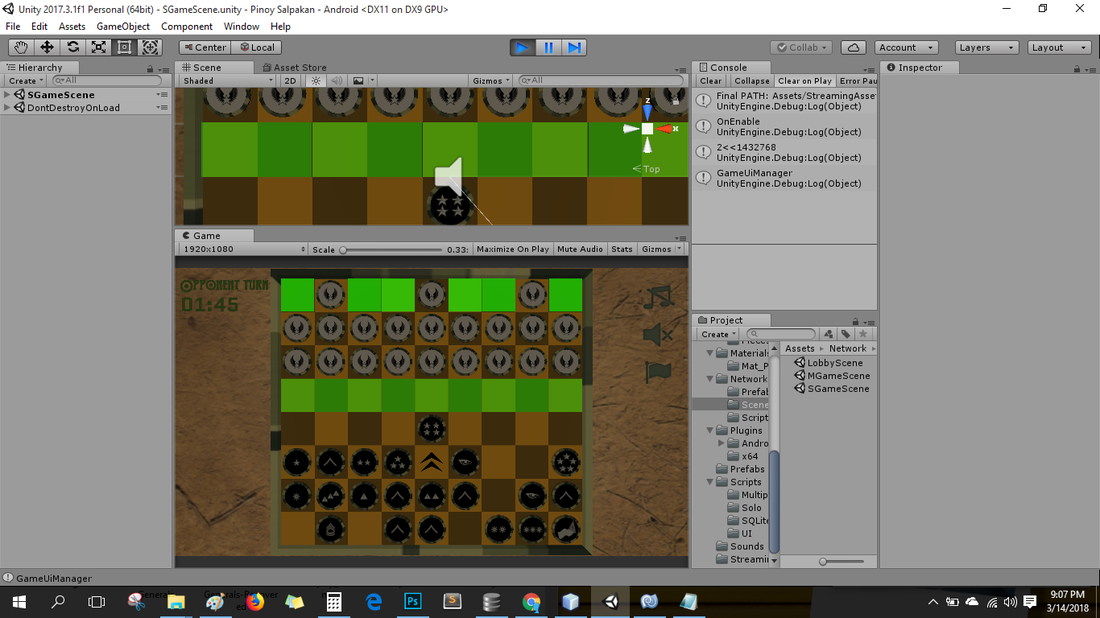

Game of the General AI Implementation

Are you familiar with Chess AI, and standard minimax approaches? It is more complex when you have hidden information, as in this game, but an AI can often do well by using estimated information (e.g. based on a heuristic) instead of the known values.

(Also, this is the wrong forum for technical questions, so I'll move it to a more relevant place.)

I haven't written AI for this game, but the last time I thought about it I could see a way to implement decent AI using MCTS. The hidden information part makes it trickier, but I think the difficulties are surmountable. I'll be happy to discuss some details, but I would like to know what you have thought of so far.

Now that we have DeepMind's AlphaZero approach, it can probably be applied here as well, but I have to think about it a bit, and it might take too many resources for a thesis.

I am trying to apply this one in my implementation https://medium.freecodecamp.org/simple-chess-ai-step-by-step-1d55a9266977. But im stuck in evaluation function i dont know how to evaluate the bestMove due to hidden pieces in the board. Forgive i am lack of knowledge in AI.

You can't use the traditional methods for chess AI because they only apply to full-information games. That's why I suggested Monte Carlo methods. Have you looked into that at all?

Here's my very brief recipe. The main ingredient is a "policy function". That is a [quick] function that, given a game situation, returns a probability distribution over the moves. If you had full information, you would try each of the moves available to you, run 1,000 "playouts" --where both players use the policy function to pick the next move for the rest of the game-- and pick the move with the best results. A few modifications of this very basic method would get you to Monte Carlo Tree Search (MCTS).

Since you don't have full information, you have to first "read" what the opponent may have. You can do that by generating random configurations, assigning them weights and then running the playouts from a variety of configurations. The configurations should be weighted by their probability, which can be computed as the product of the probabilities of all the moves by the opponent until this point in the game, using the policy function (this can be justified using Bayes' theorem). You also have to zero out the weight of any configuration that is incompatible with information that has been revealed already (like the identity of some pieces after they have been captured, if I remember the game correctly (sorry, I've never played)).

The tricky part is that once the game has gone on for a while and you have learned the identity of some of the opponent's pieces, the vast majority of random configurations will be incompatible with the game history. So you should generate only plausible configurations, and in doing so you should be careful not to bias the probability distribution in any way.

The fact that you will be running playouts on different configurations means that you can't reasonably accumulate statistics for future positions the way you do in MCTS, so you will probably have to use a basic scheme without tree search.

One more thing: It's possible to use a neural network to play the role of the policy function, and this might be feasible these days. If I were you, I would start with a hand-crafted policy function. Something that returns the uniform distribution over all legal moves would be a good place to start. You can then up the probability of obvious good moves. Eventually you can train a neural network to maximize the likelihood of the moves in some database of games (which could be generated with your own program playing itself) and use it as policy network.

7 hours ago, alvaro said:Here's my very brief recipe. The main ingredient is a "policy function". That is a [quick] function that, given a game situation, returns a probability distribution over the moves. If you had full information, you would try each of the moves available to you, run 1,000 "playouts" --where both players use the policy function to pick the next move for the rest of the game-- and pick the move with the best results. A few modifications of this very basic method would get you to Monte Carlo Tree Search (MCTS).

How can i implement the probability distribution in each pieces?. How to redistribute the probability if my enemy piece has been eliminated?. Sorry i cant figure it out.

Sorry for unclear question sir. These are all the pieces in this game. How can i get the probability of of each pieces?

You don't assign probabilities to pieces, but to moves. As I said before, you can start with a policy function that simply generates all the legal moves and assigns them equal probabilities. Later on you can refine this with some heuristics so good moves have higher probability, or you can train a neural network to produce the probability distribution for you.

The game is somewhat similar to Stratego, which is quite well studied, but quite complex. (e.g. the thread below).

If the focus of your thesis is not the AI, I'd be tempted just to stick to a simple tree search. Each AI player needs a function which can answer the question "What's the chance that Piece A defeats Piece B". This is where those probabilities come in - if you have a General, the result is 1.0 (it always wins, if I understand the rules correctly), if you have a Major, the result is 0.619 (because it can beat 61.9% of the pieces on the board), etc. That's based on the quantities of pieces at the start - you would improve the system by adjusting the probabilities as pieces are removed, and you can further improve the system by remembering things about specific pieces (so you can return 1.0 or 0.0 instead of an estimate) and weighting different attacks based on how useful they are (e.g. defeating a general is more useful than defeating a private).