I still am much confused what problem you are trying to solve here. To eliminate the confusion, please only talk about the image, and not about systems that may display it.

An image has a size in pixels (in 2 directions), and each pixel has a colour, usually in 3 or 4 channels (normally RGB and an A channel for opaqness). Each channel has a bit depth (number of bits available to express the fraction of eg Red). Further limitations may be that only a limited number of unique RGB combinations may be used (eg gif has 256 colours available from the 24bit RGB space), or that the set of available RGB combinations is fixed (eg the system has already fixed the palette).

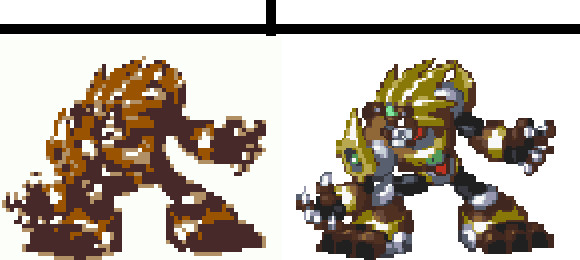

EDIT: For valid sizes, you may want to use cropped image sizes, eg not the large white area that you have in some of the examples, as that is non-relevant to the problem.

Since you mostly excluded reduction in colours from the question, I assume you are talking about a reduction in size. You can map several pixels from the source image onto one pixel in the destination, by blending their colours.

As an example, converting an image from 10x1 to 5x1 can be done by blending the colours of the first two pixels into the first pixel of the destination, the colours of the 3rd and 4th pixel into the 2nd pixel at the destination, and so on.

The reduction doesn't have to be a power of 2, if you go from 10 pixels to 6 pixels, each pixel in the destination gets 10/6th pixels from the source, using a weighted average, for example. Enlarging is the same process, except you'll very little colour information available.

None of these automated techniques will give you good quality images. The computer doesn't understand that one line has more importance than a filled area near to it, for example. In any case, you'll need to improve, or even redraw the images, if you want good quality.