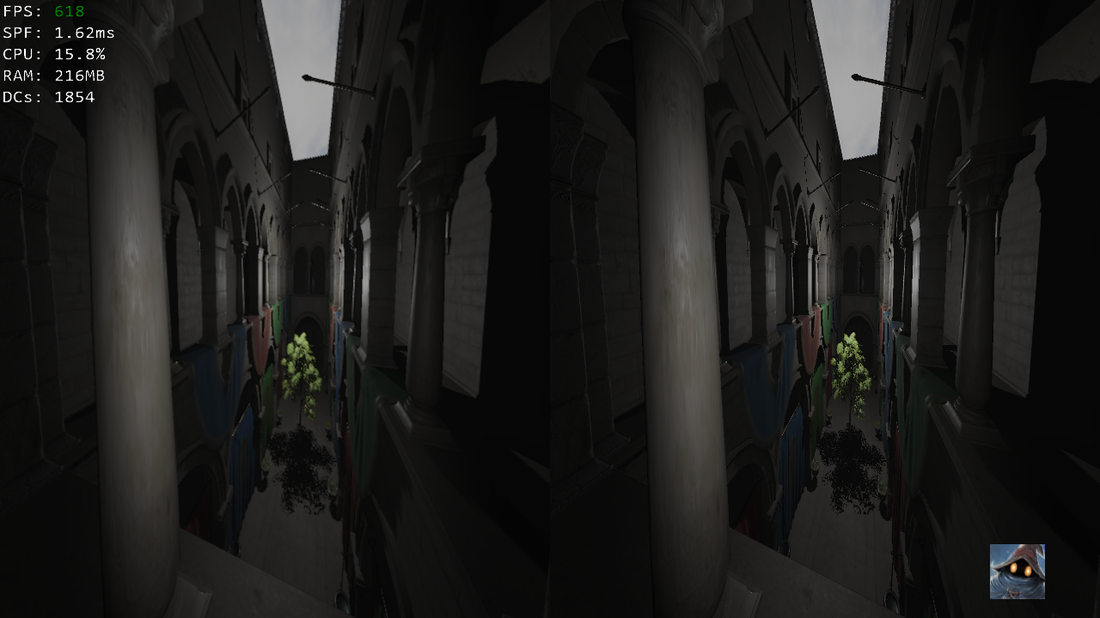

Looks nice ![]()

But if my suggestion removes all blur, this implies that blur_factor_i is too small to have an effect in either case?

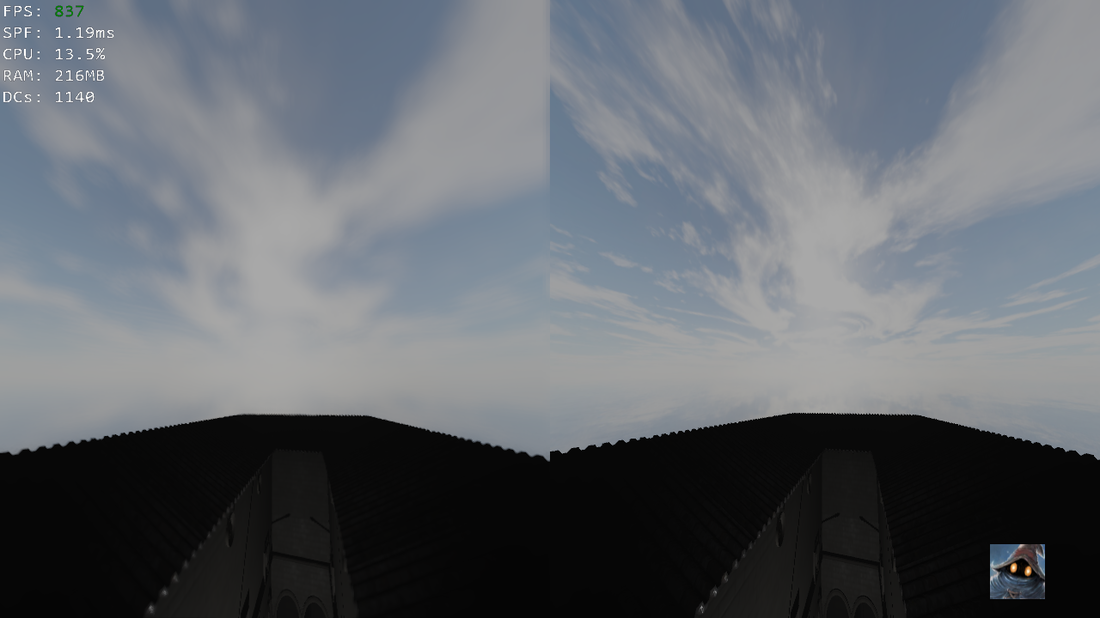

The artefact i'd want to avoid does not show up in your shots. (it was very noticeable in old games that did not read the depthbuffer.) Maybe in the middle screenshot the sky should receive less darkness from the roof edge and avoiding sampling from near pixels would avoid that.

Another idea is to utilize blurred mips from bloom - less samples and noise at the flag poles.