@frob I think he's asking about what integer type to use in general, not whether the overloading in the example is portable (and since the functions have different names, there is no issue with overloading there).

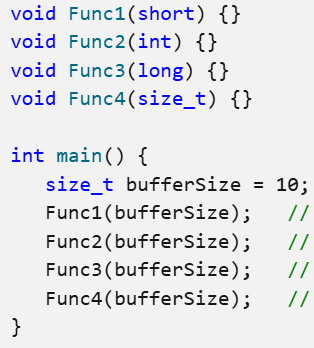

There are differing opinions on using size_t vs. int vs. other types. Some people say to always use int for uniformity, but I don't find those arguments to be convincing. Using int has several significant drawbacks:

- Pros:

- Can represent negative integer values. However negative integers are often a bug, since most integers represent a number of things or index which cannot be negative.

- Some operations (e.g. cast from float to int) are faster with int than with unsigned types like size_t, at least on Intel CPUs. The cast is 1 instruction for 32-bit int, while other types require multiple instructions to cast (float to size_t/uint64_t is pretty bad).

- Cons:

- A smaller range of possible values (half as many), due to the type being signed.

- The size can vary depending on the platform. It might be 16, 32 or 64 bits.

- If used to represent an index or count, bounds checking requires checking ≥0 and <N, rather than just <N as with unsigned types.

- All std namespace data structures use size_t. If you don't use size_t as well, you will get countless compiler warnings about type conversions and sign mismatches. Don't silence these warnings, they indicate potential bugs.

I prefer to use size_t almost everywhere. It has some benefits:

- Pros:

- Since size_t is always as big as a pointer, and unsigned, you always have access to the full range of values supported by the hardware.

- The size can vary based on the platform, but that's actually good for portability. You always get the largest type that the hardware can manipulate efficiently.

- No need to check for values <0 when doing bounds checking.

- Some operations can be faster (e.g. load effective address) if the value size matches the pointer size. With 32-bit int on x64 platforms, I've seen an extra instruction emitted that wasn't there with size_t. This extra leaq instruction seems to happen if loop counters are int or uint32_t, but not with size_t.

- Good compatibility with std namespace data structures.

- Cons:

- On 64-bit platforms, 8 bytes per value is overkill most of the time. This increases the size of data structures which might cause more cache misses in some situations (not likely to be a real issue most of the time).

- Potential for underflow when doing subtraction. However, this is avoidable in most cases (you should have checked the operands for A ≥ B elsewhere). If you want to saturate at 0, you can do max(A,B) - B rather than max(A-B,0).

My general advice is to use size_t most of the time. The only cases where I use fixed-size types (uint32_t, uint64_t) are when I need a specific integer size, such as when doing serialization. I almost never use signed integer types, except when the value represents something that can be negative (e.g. an offset from current file position (int64_t)). There are only a handful of places in my codebase (>million LOC) where I use plain “int”, and it's mostly for faster float <-> int conversions where I don't mind the limited range, and in that case I actually use int32_t instead of int, in case int is not 32 bits. So, I can safely say there is little good reason to use int.