Also understand that downsampling to generate mipmaps each level is decreasing fidelity each chain. A 1920x1080 texture of SAT samples contains more data than a texture half the size. Every algorithm is a trade off between memory, speed and visual fidelity.

I saw this link. It's still an optional checkbox, so like I said making everything work for every situation isn't really ideal. If you are working on a demo make a demo. If you are working on a game make a game. An engine to support infinite games, is just not worth your time on your own.

MegaLights in Unreal Engine | Unreal Engine 5.5 Documentation | Epic Developer Community | Epic Developer Community

Samplig shadow maps

NBA2K, Madden, Maneater, Killing Floor, Sims

dpadam450 said:

An engine to support infinite games, is just not worth your time on your own.

To be clear, i'm working on a realtime GI system, and i hope to sell it to the games industry.

I may be a bit late with that. If the plan fails, i'll make a custom engine and game eventually.

So currently i need to make a demo to show what it can do, how fast it is, open world support, tools, etc.

Problem is i lack gfx experience with Vulkan. I'm only good with compute.

It took me 4 days to figure out how to download the depth buffer of the shadow map to CPU. <: )

JoeJ said:

And assuming we still need to keep the original data as well, mips need less memory than SAT. (+33% vs +100%)

The general idea behind sat was using it together with VSM - which originally required either RG16F or better RG32F format (16-bit for VSM wasn't that great tbh). Therefore without any additional storage you could avoid mipmaps - and get large filter kernels (although just box filter, so…).

VSM has problem with light leaks, and that's really non-solvable (because darkening ends up in worse quality shadows).

My current blog on programming, linux and stuff - http://gameprogrammerdiary.blogspot.com

Vilem Otte said:

VSM has problem with light leaks, and that's really non-solvable (because darkening ends up in worse quality shadows).

Does ‘worse quality’ mean that the solution does not work? It does.

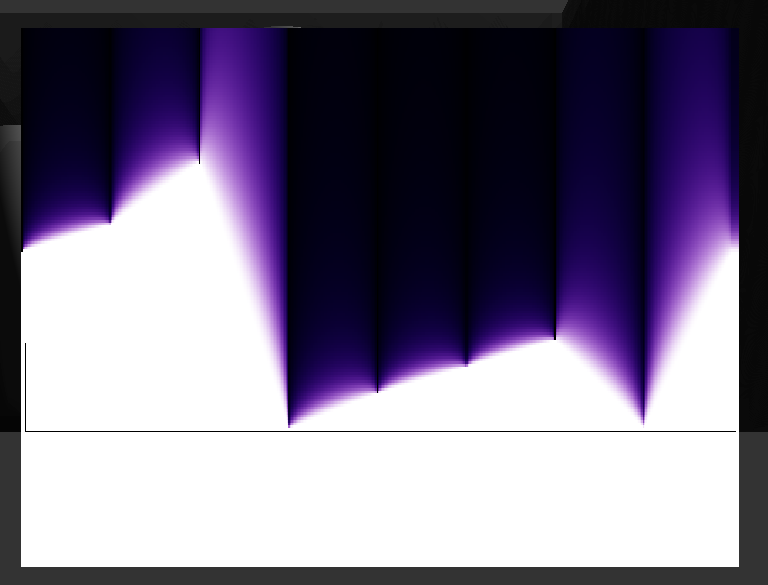

Here is a graph of original VSM:

Any slope causes a leak to infinity.

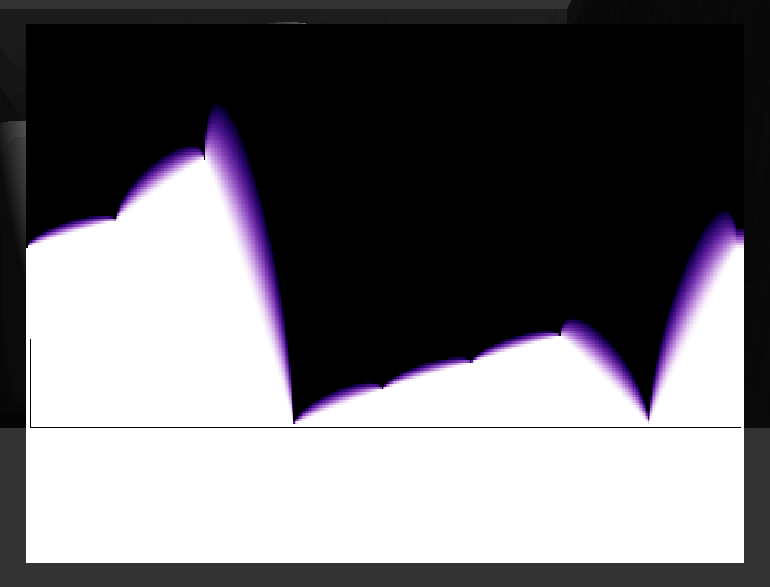

Applying a fix:

Now the signal still looks the same near the surface, and the leaks are clipped inside the occluders, ideally.

So the leaks are fixed. Here is the magic code:

float d1 = Sample(dist[mip], u);//dist[i&7] * (1-f) + dist[(i+1)&7] * f;

float d2 = Sample(distSq[mip], u);//distSq[i&7] * (1-f) + distSq[(i+1)&7] * f;

float E_x2 = d2;

float Ex_2 = d1 * d1;

float variance = E_x2 - Ex_2;

variance *= sclaVariance;

float mD = d1 - dSample;

float mD_2 = mD * mD;

float p = variance / (variance + mD_2);

float lit = std::max( p, dSample <= d1 ? 1.f : -0.f );

float fixLeak = dbgF1; // 1.0

if (fixLeak > 0.f && mD < 0.f)

{

float sv = sqrt(variance) * fixLeak;

float k = mD / (sv);

lit *= 1.f - k*k;

}However, you can just multiply your shadow by 2 for the exact same result without my fancy math. :D

But still, after that i think about it a bit differently: VSM leaks can be fixed pretty robustly, and for hard shadows the quality loss is a matter of personal taste.

But for me it's useless, since the loss cuts away one half of the filter in practice, so we can't composite soft shadows from multiple mips (or SAT). They only become less detailed, but not soft.

So in the end i agree. But at least i've learned some things from VSM. It's really cool how this works without a given slope.

The idea i currently have is this:

Prefiltered soft shadows are all about texture magnification. So we really want a higher order filter to hide the bilinear discontinuities.

And if we have so many samples ready, there should be a way to design a filter which does not leak but works for soft shadows.

But no luck so far…

How did you do your soft shadows? Saw the pot on YT.

"To be clear, i'm working on a realtime GI system, and i hope to sell it to the games industry.

I may be a bit late with that. If the plan fails, i'll make a custom engine and game eventually."

From my experiences and seeing other game engines out there, I believe there is mostly 0 chance of selling this. More and more people are using the engines that have huge backing rather than custom engines that need custom middleware. Even EA is on proprietary tech and they already had some middleware called Umbra or something for GI that has been iterated on and paid for. If you are doing it for the love of graphics programming and wanting to learn then 100% valid to pursue.

NBA2K, Madden, Maneater, Killing Floor, Sims

dpadam450 said:

From my experiences and seeing other game engines out there, I believe there is mostly 0 chance of selling this. More and more people are using the engines that have huge backing rather than custom engines that need custom middleware. Even EA is on proprietary tech and they already had some middleware called Umbra or something for GI that has been iterated on and paid for. If you are doing it for the love of graphics programming and wanting to learn then 100% valid to pursue.

I'd agree with this.

Unless you can create integrations for engines (either big or small, closed or open sourced - doesn't matter - but if you have GI solution integrated with one - there is a market (not a big one, but it exists)).

Offering integration to proprietary engines is … problematic at least. When given studio owns and builds their own engine - it might be worth a shot, but integration may be a bit hard to do … but other studios also use proprietary tech developed by someone else (which makes it a lot more complicated).

Middleware tech decreased a lot in recent years (large parts of it were simply bought by Epic, Unity Technologies, etc.) … along with that, use of proprietary engines decreased (it isn't down to 0, but a lot of studios went to UE/Unity due to pricing). Industrial customers already learned the hard way - that this was terribly bad move (giving monopoly to UE/Unity), as users got massively screwed in 2023 by Unity and in 2024 by Epic (who easily abused monopoly to extort money from them).

JoeJ said:

How did you do your soft shadows? Saw the pot on YT. Like Quote Reply

If I remember correctly it was just cleverly done PCSS + dithering (I did experiment with mipmaps a lot, and also with backprojection - neither of those 2 was good/cheap enough).

I'm currently playing a lot with RT/PT and clever (re)sampling, which has a big potential to work with many lights for shadows (I think UE Megalights do something like this). I'm even thinking about ditching shadow maps completely at some point…

I'm still mixed on shadows … I've played with many approaches so far.

- Shadow volumes

- Soft shadow volumes (Penumbra wedges)

- Shadow Maps

- Variance Shadow Maps

- Percentage-Closer-Soft-Shadows Shadow Maps

- Min-Max Shadow Maps

- Backprojection Shadow Maps

- Ray Tracing

And each has its own advantages/disadvantages. Practically only ray tracing allows for any light shape - and at the point I can do a lot of RT realtime, it is tempting to simplify the pipeline.

JoeJ said:

But still, after that i think about it a bit differently: VSM leaks can be fixed pretty robustly, and for hard shadows the quality loss is a matter of personal taste.

For hard shadows it works, the problem is, it degrades penumbra.

JoeJ said:

Prefiltered soft shadows are all about texture magnification. So we really want a higher order filter to hide the bilinear discontinuities. And if we have so many samples ready, there should be a way to design a filter which does not leak but works for soft shadows. But no luck so far…

I was trying this for a bit - but it ended up either being slow or meh. Back in 2000s there was a presentation on Min-Max shadow maps, shadow map can be considered to represent floating blockers and at various mip maps you could store min and max blocker distance.

My current blog on programming, linux and stuff - http://gameprogrammerdiary.blogspot.com

dpadam450 said:

From my experiences and seeing other game engines out there, I believe there is mostly 0 chance of selling this.

I know failure is quite likely. 10 years ago i was so far ahead, i was sure i can sell this. And right know, looking at things like Lumen or path tracing, that's still the case. But not by much anymore. Until i'm done, what i have is probably just state of the art and then i can't hope to sell it. Time will tell.

Vilem Otte said:

Middleware tech decreased a lot in recent years (large parts of it were simply bought by Epic, Unity Technologies, etc.) … along with that, use of proprietary engines decreased

Yeah, that's the other problem. The amount of potential customers is shrinking.

Vilem Otte said:

For hard shadows it works, the problem is, it degrades penumbra.

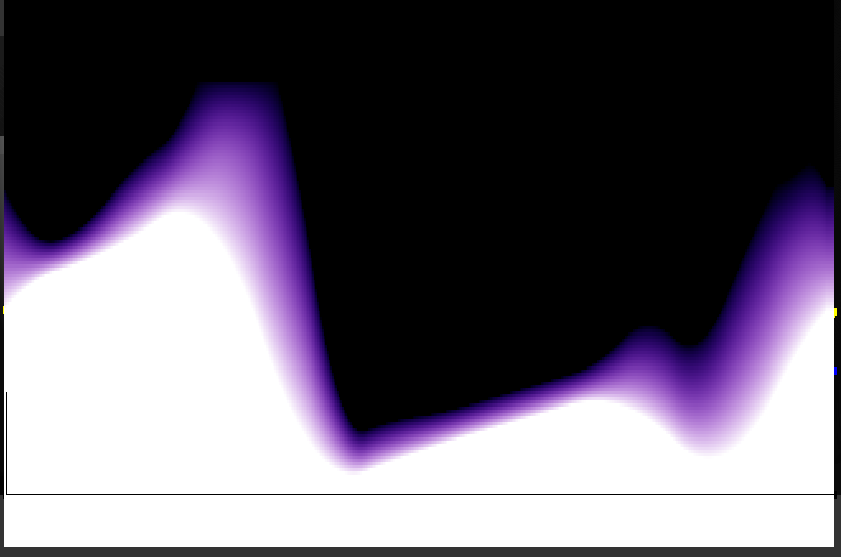

I think i have a solution which works with a 3x3 kernel, but still need to port it to 2D. Might be just too slow, though. But 3x3 is enough to calculate all required information from the given depth samples, so it's worth an attempt. Here's a plot:

Basically i get min/max from adjacency and use it to cut off the leak as seen above the peak.

To do this properly i would need 4x4 samples. Currently the cut exposes grid alignment artifacts, but ideally this will be a rare issue.

JoeJ said:

I know failure is quite likely. 10 years ago i was so far ahead, i was sure i can sell this. And right know, looking at things like Lumen or path tracing, that's still the case. But not by much anymore. Until i'm done, what i have is probably just state of the art and then i can't hope to sell it. Time will tell.

My 50c is:

- Making a game from it might be the easiest approach to sell it (even to some engine as middleware or such … you need a real world showcase and ideally a product using it; only at that point it is marketable)

- I don't want to complain here about Lumen too much … yet

- Along with pretty much every attempted approximation of GI it suffers from the same thing - not looking that good at the end of day … almost all realtime GI solutions suffer from this. Unless you do actual path tracing (too expensive) it will either fail on micro geometry, fail on macro geometry, fail on color bleeding, fail on reflections, fail on caustics, etc. Often multiple of these scenarios will look very bad compared to ground truth

- Speed is another factor - all realtime GI solutions are slow (with exception of (very high resolution or virtual) light maps … for obvious reasons, it is precomputed)

- Path tracing is feasible but to some extent … and this gets into complex answer very fast

- Speed is just not there - with a-svgf, restir, etc. … it can get usable - but it still has major problems (not to mention speed is just not there … I've tried Cyberpunk 2077/Witcher 3 on 7900GRE wtih CD Projekt RED path tracing - and it is slideshow … upscaling also makes image look like sh** honestly - and it was just 1920x1080 for test … 4K means even more problems)

- Dynamic geometry is still a massive problem (and hw rt throws a lot more problems into this) - not even talking about dynamic/cluster lod approaches, those are straight nightmare with hw rt (for compute I do have approach, but it has its problems)

The tech is still sell-able - especially if you manage to implement it into Unreal Engine or Unity and place to their stores (the market there is definitely bigger than proprietary engines). This being said, it needs to have somewhat solid marketing.

Question of the day generally is - how much of work is such integration.

My current blog on programming, linux and stuff - http://gameprogrammerdiary.blogspot.com