Repost from GMC:

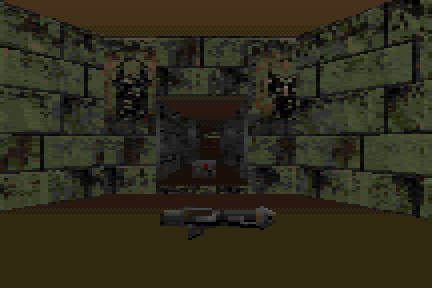

Fast, cheap, and precise shadows for Doom-like 2.5 and 3D games that are robust.

I have been looking for a mathematically elegant solution to this for months, and have come up dry again and again. I was going to sell this on the marketplace for profit, but I am ready to throw in the towel at this point. We were supposed to develop a robust lighting and shadow system by 2009... we are way overdue and now we are in the dystopian timeline...GM was supposed to have this by now. So I think its important that we find a new method, more than just hoarding the discoveries to make some money from the marketplace.

Personally, I prefer shadow volumes, but they are extremely laggy on the fillrate. Of course, more modern games just use shadowmaps instead. The problem with shadowmaps is they are meant only for objects that are orthographic, they are for Minecraft but not DOOM. The minute you place a wall that is diagonally at some angle, you automatically get pixilation in the result. So people use cheap hacks such as blurring the shadows, but that causes a new problem where you don't get naturally sharp edges near creases, and you get light tunneling in near creases.

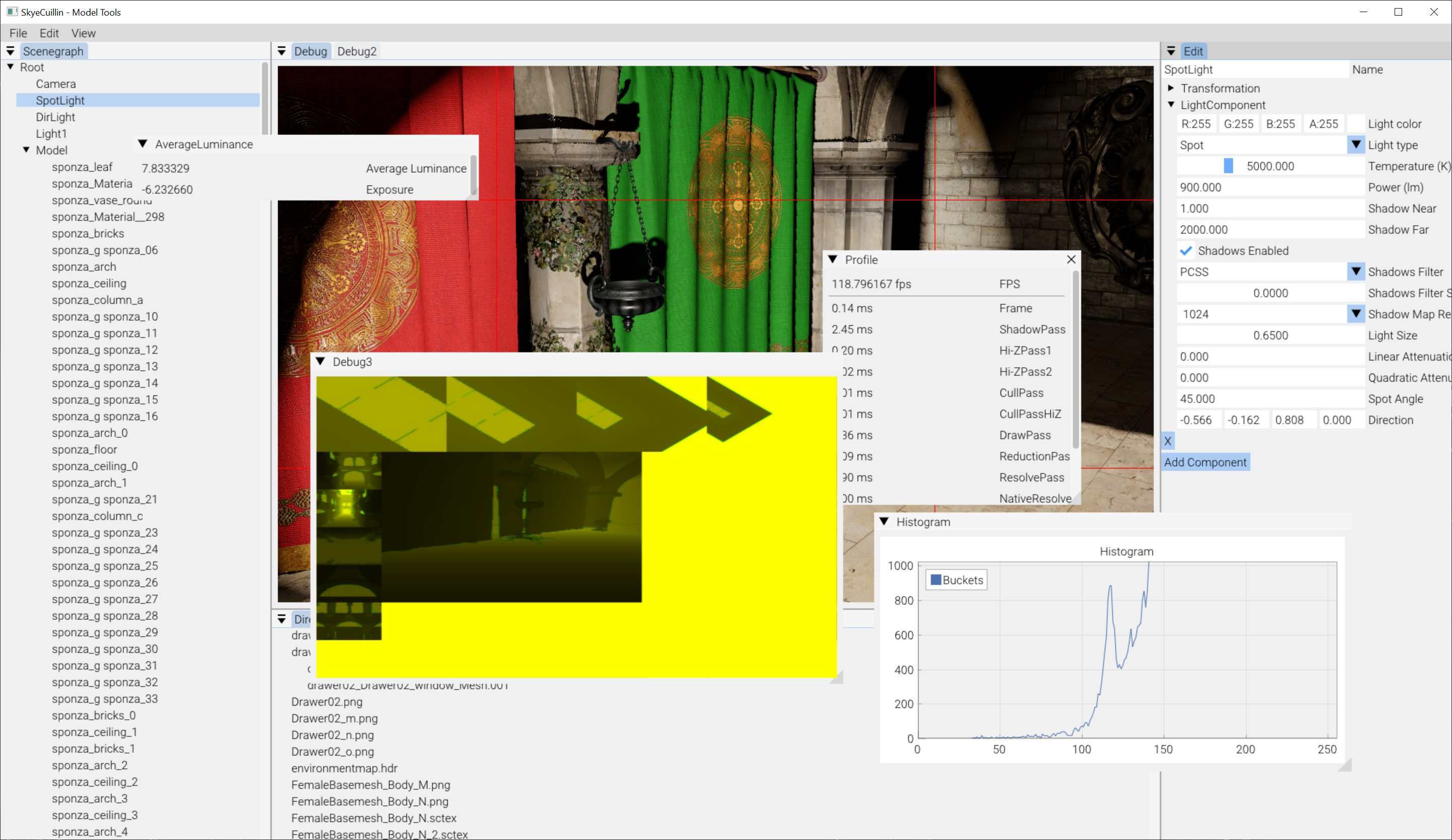

pic on the left shows an ideal candidate for shadowmapping. pic on the right would have pixilation due to angled walls

Shadowmapping is not a robust method for indoor games. For every light you need 6 textures to make a cubemap texture, and unless the lights are very short range, you might need 6 2048x2048 textures or larger. And perhaps even more perplexing, every pixel of the world mesh needs to somehow "know" what texture to sample from. So how could you have an optimized world mesh when you are limited to sampling 8 textures? You would either have to unoptimize the world mesh by dividing it into separate meshes, or create a mega texture (which would also make loading the shadowmap uv's more complicated.) Then there is the problem of having to atlas each shadowmap into the megatexture atlas, and creating an optimized texture atlas is difficult in of itself.

Then the shadowmaps often do not create very good results. For instance I played the new Mario Party game, it was either for Switch or Wii U. Even though it was a next-gen game, and top-down, the shadow maps looked terrible, like it was a graphical downgrade from previous games. So some immediately prefer ray-tracing gfx, but ray-tracing is out of the question, most people do not have ray-tracing GPUs anyway.

So I was thinking if you had a game with an overall DOOM style, you could mathematically exploit this to somehow create optimized shadows. Because DOOMs graphics are almost orthogonal in a way, only with angled walls. Maybe someone better at math than me could figure this out.