Hi,

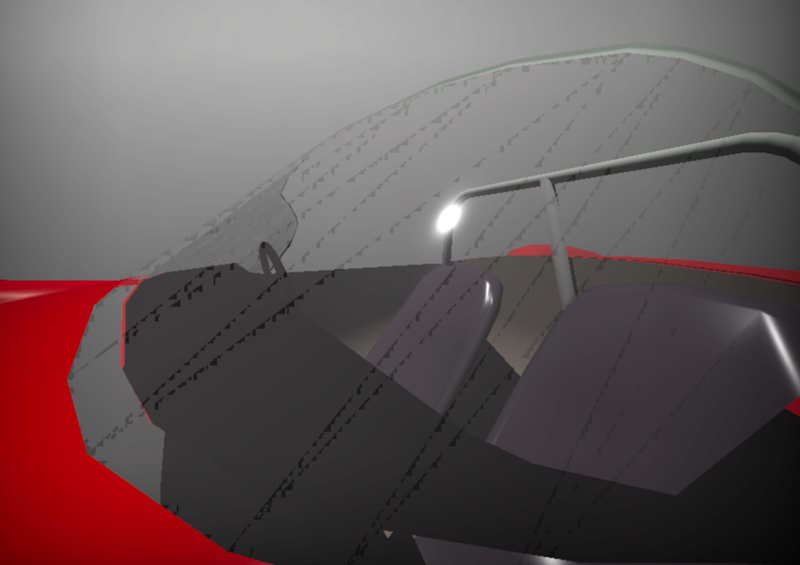

Not sure what I am doing wrong but I was trying to reduce the number of interlocked operations I perform in a pixel shader using wave operations. This is the classic per-pixel linked list order independent translucency algo mixed with the classic wave intrinsic example.

Here's the code:

void addOITSample(uint2 coord,

RWStructuredBuffer< uint > oit_rw_grid,

RWStructuredBuffer< uint > oit_rw_samples,

OITParameters oit_parameters,

float3 color,

float transmittance,

float depth)

{

// Allocate sample

#if false

uint active_offset = WavePrefixCountBits(true);

uint active_count = WaveActiveCountBits(true);

uint sample_index;

if(WaveIsFirstLane())

InterlockedAdd(oit_rw_samples[0], active_count, sample_index);

sample_index = WaveReadLaneFirst(sample_index);

sample_index += active_offset;

#else

uint sample_index;

InterlockedAdd(oit_rw_samples[0], 1, sample_index);

#endif

// If allocation succeeeded

if(sample_index < oit_parameters.sample_count)

{

// Add sample to list

uint list_index = coord.x + coord.y * oit_parameters.width;

uint next_pointer;

InterlockedExchange(oit_rw_grid[list_index], sample_index, next_pointer);

// Output sample

oit_rw_samples[1 + sample_index * 3 + 0] = packUFloat(depth, 24, 8) | packUFloat(transmittance, 8, 0);

oit_rw_samples[1 + sample_index * 3 + 1] = float3_to_r11g11b10(color);

oit_rw_samples[1 + sample_index * 3 + 2] = next_pointer;

}

}You see, If I enable the wave intrisinc version of the sample allocation I get wrong visual result and eventually a GPU crash. Any idea?