taby said:

I just stuff it all into a vector of vertices and draw those.

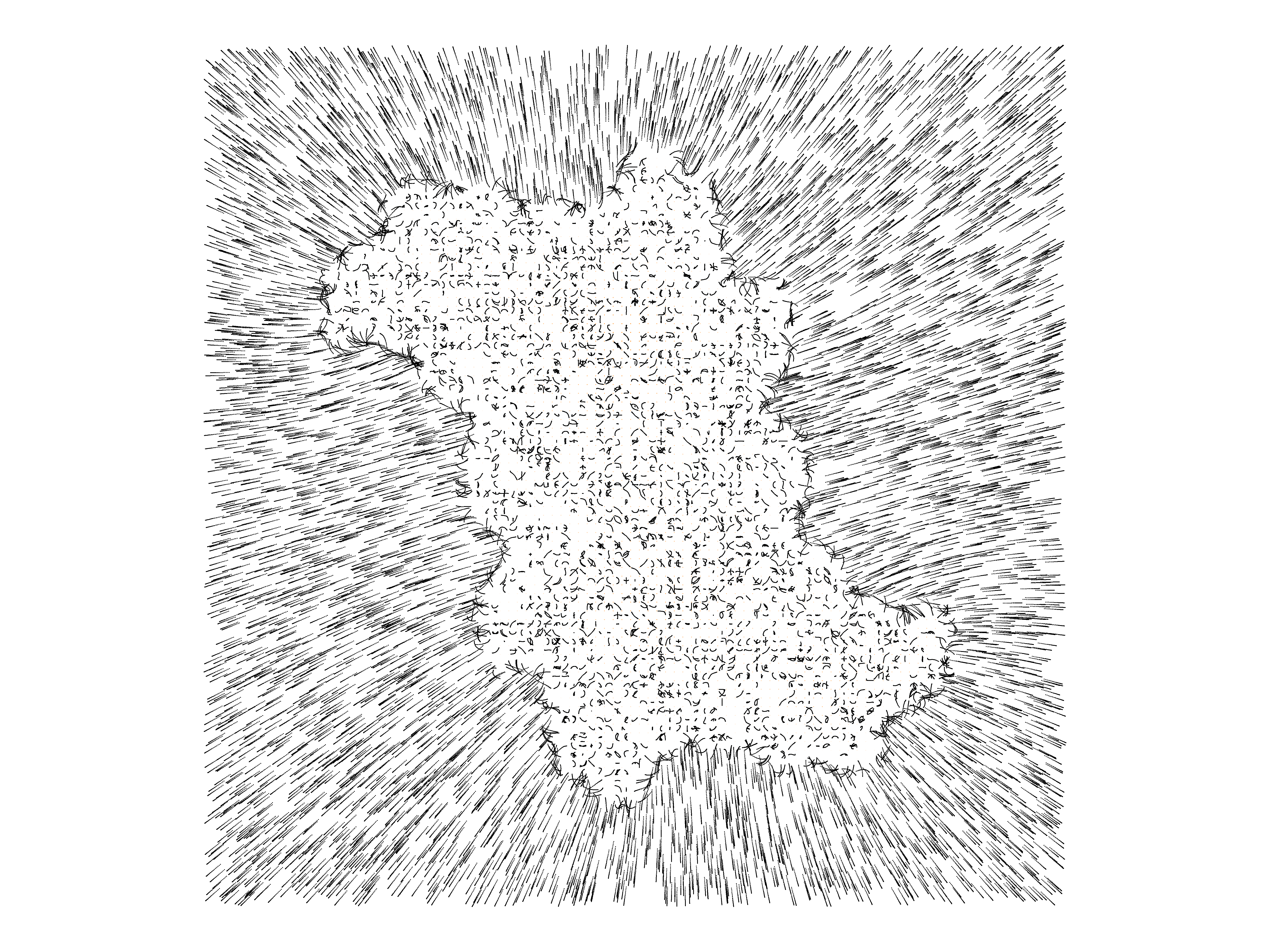

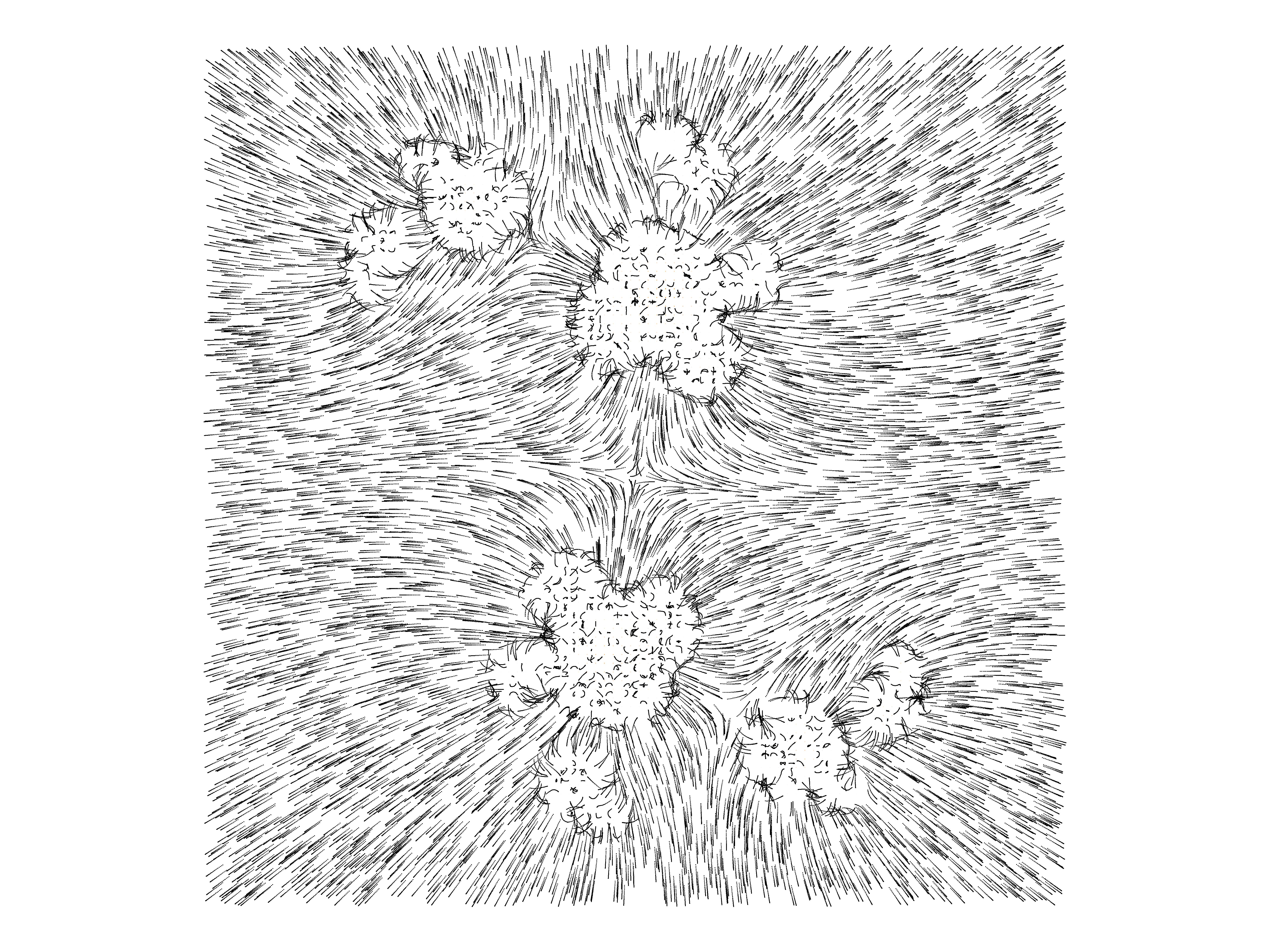

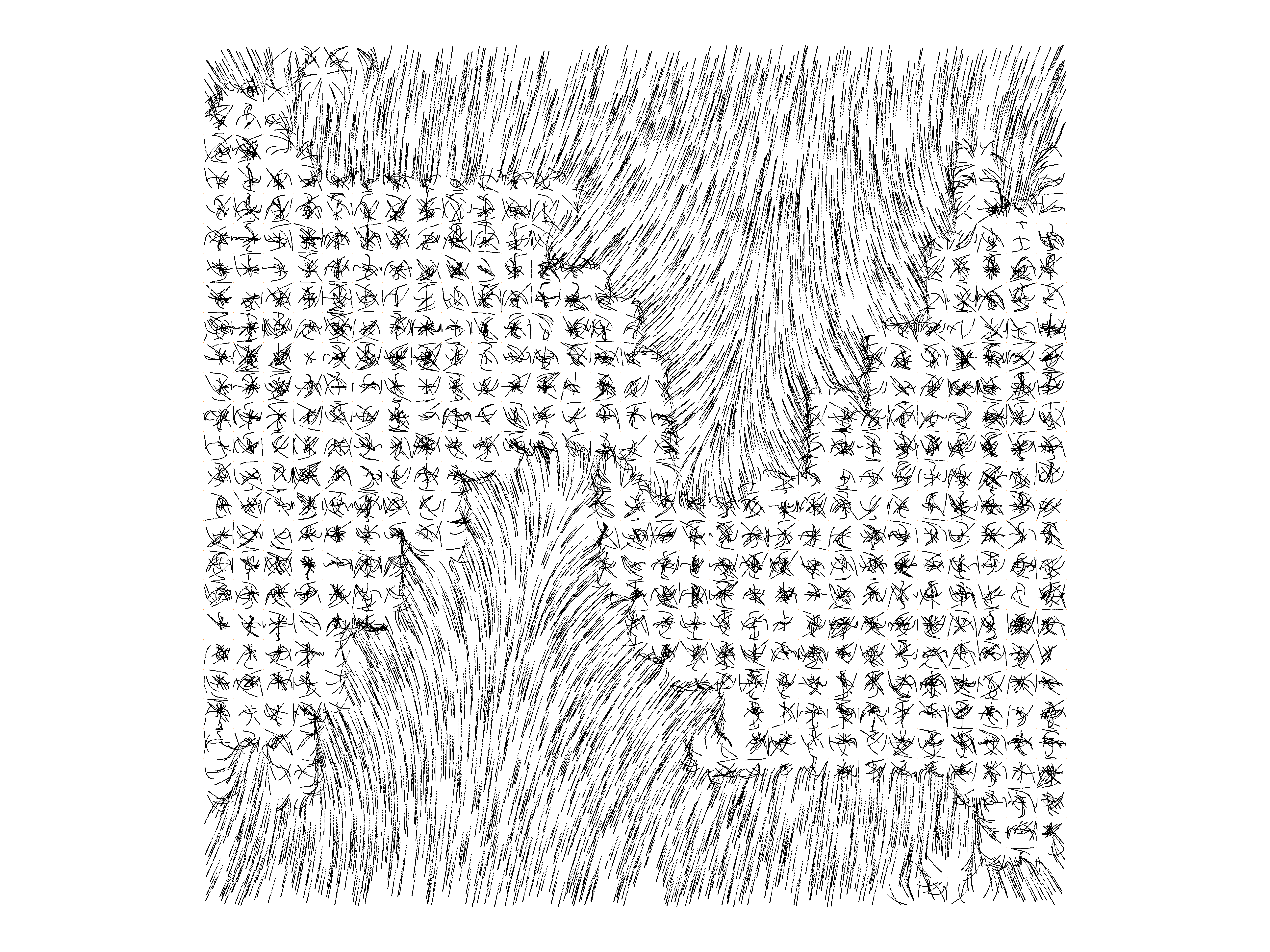

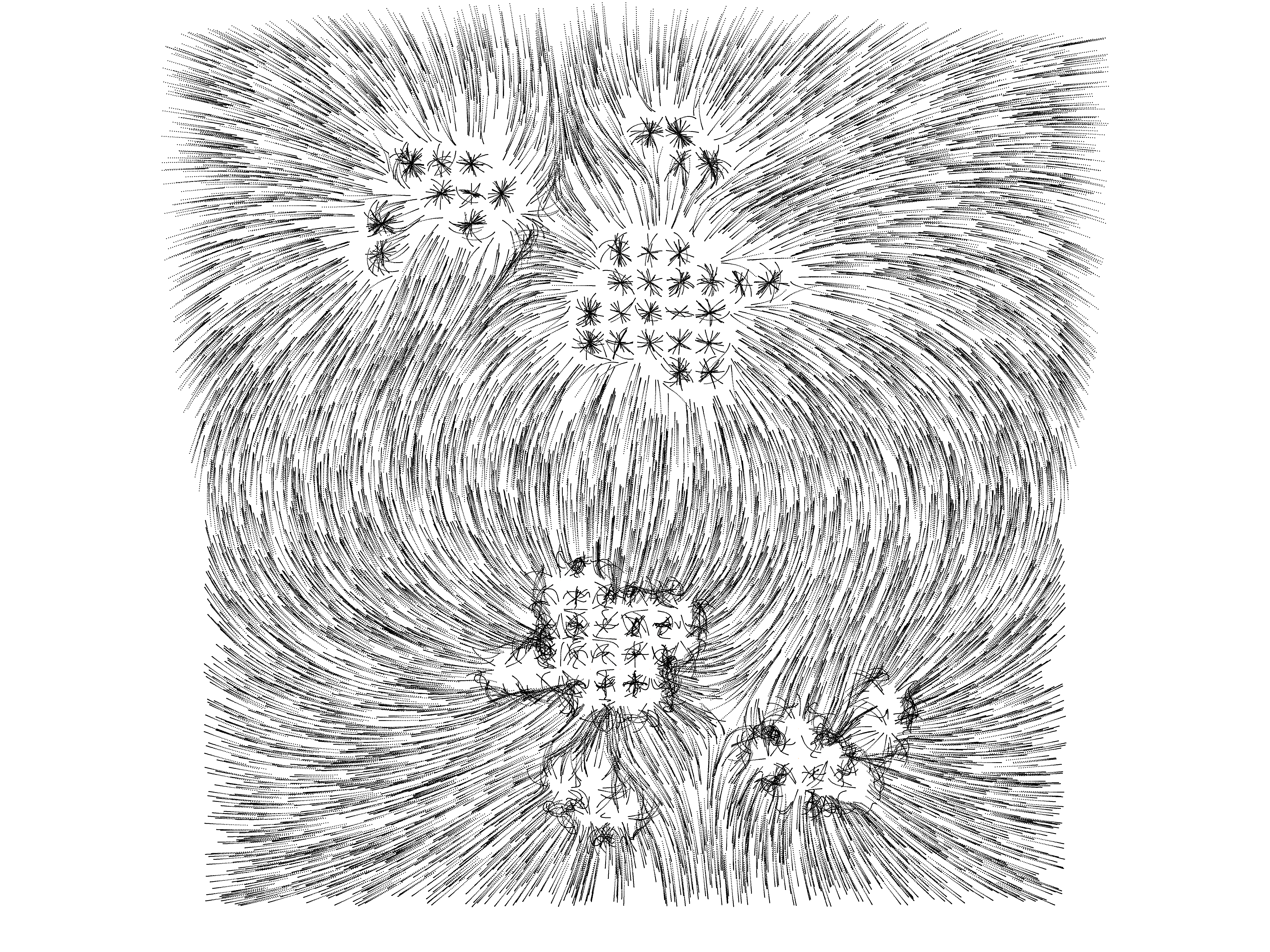

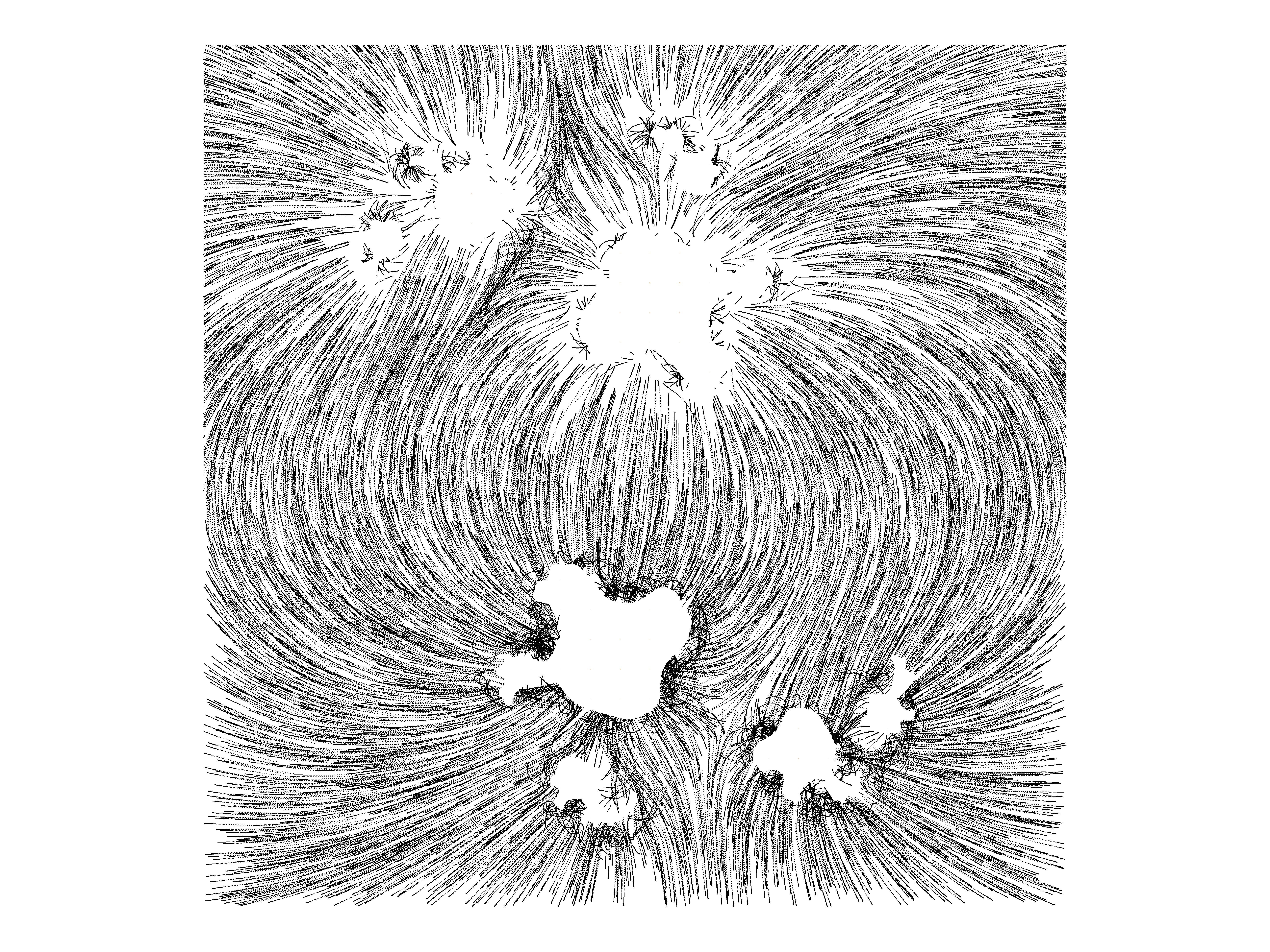

But i guess you have some regular grid, and start the trajectories from regular spaced grid cells / vertices.

Another option would be to generate poisson disc samples to break the regularity, and start from there.

Or just generate random points.

The reason i asked is i wonder about the ‘eyesore’ grid noise which had emerged before. I wonder why this grid shows a lower frequency of spacing than the original high frequency grid you probably used to start drawing the trajectories.

I mean, it did not look beautiful, but why did such grid emerge at all, not just chaos?

I love your point renderer! ?

You mean my debug visualizations.

But i seriously consider compute point splatting instead rasterizing triangles for the actual renderer since a long time.

Dreams on PS4 does that. Karis mentioned it was one of his failed approaches finally leading to Nanite. Both said holes is their major problem, otherwise it would be super fast.

I use point splatting to render environment maps for my GI stuff, and i found a really simple way to solve this holes problem. Plus, analytical anti aliasing is cheap with points, so this could avoid a need for erroneous TAA.

It's very interesting / attractive. But not for all sorts of geometry. Triangles remain better in many cases, so maybe we want to combine both.

Anyway. Likely i'll die long before i ever get to implementing the final renderer… ;D