Hello guys I've a quick question and I can't seem to find a concrete answer so I decided to ask here. First, let's suppose the following configuration on the later stages of the rendering pipeline:

- Face culling is disabled, so both sides of a triangle get rasterized

- Depth testing is enabled and configured to preserve anything with equal or less distance from camera

- Depth writing also enabled

- No stencil involved

- No blending

We render a triangle on the screen, with the camera looking straight into it. The triangle goes through the rasterizer, fragments get generated for each side (since face culling is disabled). All fragments pass the early depth test due to the depth test config mentioned above (right?). They go through the fragment shader and later they get written into the color buffer… My question is, how is the order of these fragments decided when they are being written?

I feel like I'm missing something in the theory. If I've the following fragment shader (hlsl):

fixed4 frag (fixed facing : VFACE) : SV_Target

{

if(facing > 0)

{

return fixed4(1,0,0,1); // front green

}

else

{

return fixed4(0,1,0,1); // back red

}

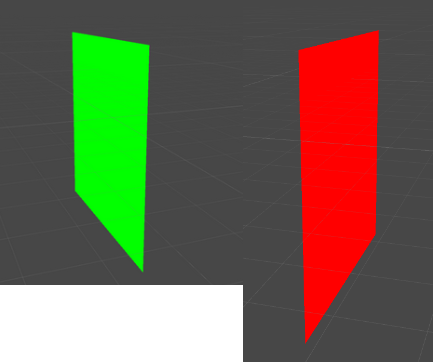

}The VFACE semantic is > 0 for front facing or <0 for back facing, and it yields the following result:

So I'm scratching my head here thinking that the fragments of both sides get written in the color buffer but there is something that makes sure that, if looking from the front side, all the green fragments get written last, or the red fragments get discarded before the green… Or I'm forgetting something important.

Is my reasoning ok? Thanks!