Changing the dimensions have some effect -

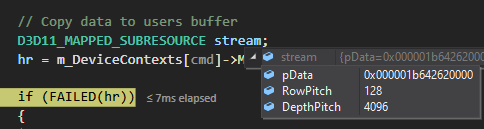

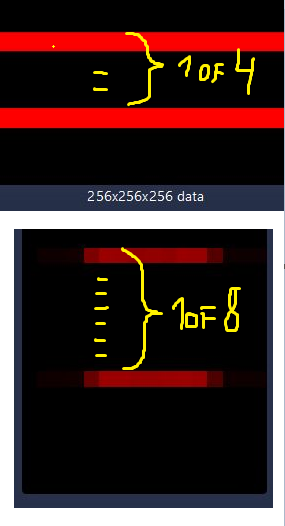

If your Texture3D is too big for the Nvidia VRAM, and if DX11 allows you to create too big resources. The reads/writes beyond the limits, will be ignored or return zeros. Intel GPU uses the RAM of the computer which is often more than the VRAM of the GPU. This could be the origin of your problem. The easisest way to test for this is to create a very small Texture3D. Let say 64x64x64 and run it on both GPUs. Using 4,4,4(2,2,2) again.

(Texture3D of 256xxx FLOAT takes only 64 MBs but maybe you have the VRAM full of other resources already.)

Try that 64x64x64(32x32x32?) thing next, in case you declared more resources and you think the VRAM could be running out.

I don't know if DX11 allows for too much resources to be created above the VRAM amount.

I don't know either if you can reinterpret a resource in DX11 just like you can do in DX12. If you are reinterpreting the contents of the resource, then the differences in the way the GPUs layout data internaly could give you differences.

You don't need a Texture3D at all if you are going to use Load() instead Sample() to read from it.

Maybe you can get some speedup from better adjacency, but it depends if the driver did the extra work to internally layout the data in a better way. This could require more VRAM than 256x256x256x4.

Sorry i can not suggest more to you. Differences that could produce a different result between Intel and NV are wavefront size, VRAM amounts, and in case of overflow or reinterpreting, the way GPU layouts data internally(and slight floating point math variations too, but the differences would look more random).