taby said:

I was hoping for like 10-100x speedup, but c'est la vie.

I usually get 50 - 70, depending HW ofc.

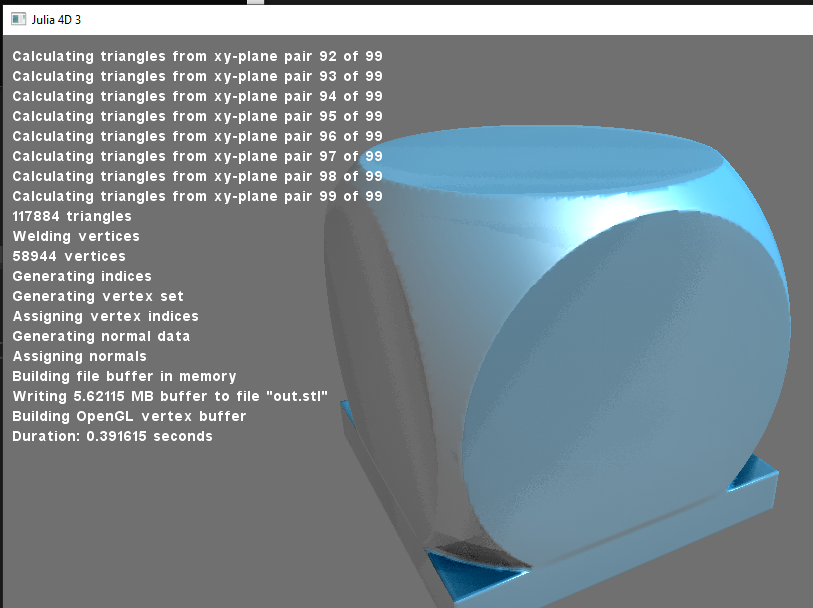

To get there, i think you would need to generate the mesh on GPU as well to get rid of the huge downloads. But this means increased complexity, e.g:

One single CS to generate 16^3 block of volume, with 1 cell overlap an each side. Store the density as half float eventually then this fits into 2kB of LDS (or even 8 bit bytes), otherwise it's 4 kB. Workgroup would be 1024 threads large, but becasue you do not read much from memory there is no need to hide memory latency so the low occupancy should not hurt. (Smaller workgroup would result in caclulating more cells twice becasue it causes more overlap.)

Then the same CS generates the mesh. You should see if this can fit into the remaining amount of LDS. (IIRC a 1024 WG can reserve 32 kB). When done, ‘allocate’ necessary main memory with a single atomic add and write the mesh in one go of linear copy from LDS to main ram. (This will hurt your performance the most, because low occupancy the GPU can not switch to another Wavefront while waiting on the memory operations. That's why i propose to create the mesh in LDS.)

To keep it simple, generate pure triangles but no edge connectivity. (If you need this, i would do it on CPU after downloading the triangle mesh.)

Notice you can use a 1D worgroup (1024,1,1), and you do not have to use (16,16,16) just because you work on a volume. The former is often easier to implement any indexing logic.

I do not really know about the complexity of Marching Cubes algo, but i have my own iso surface algorithm which would fit into a single shader together with the fractal generation, so i guess this approach would work for you.