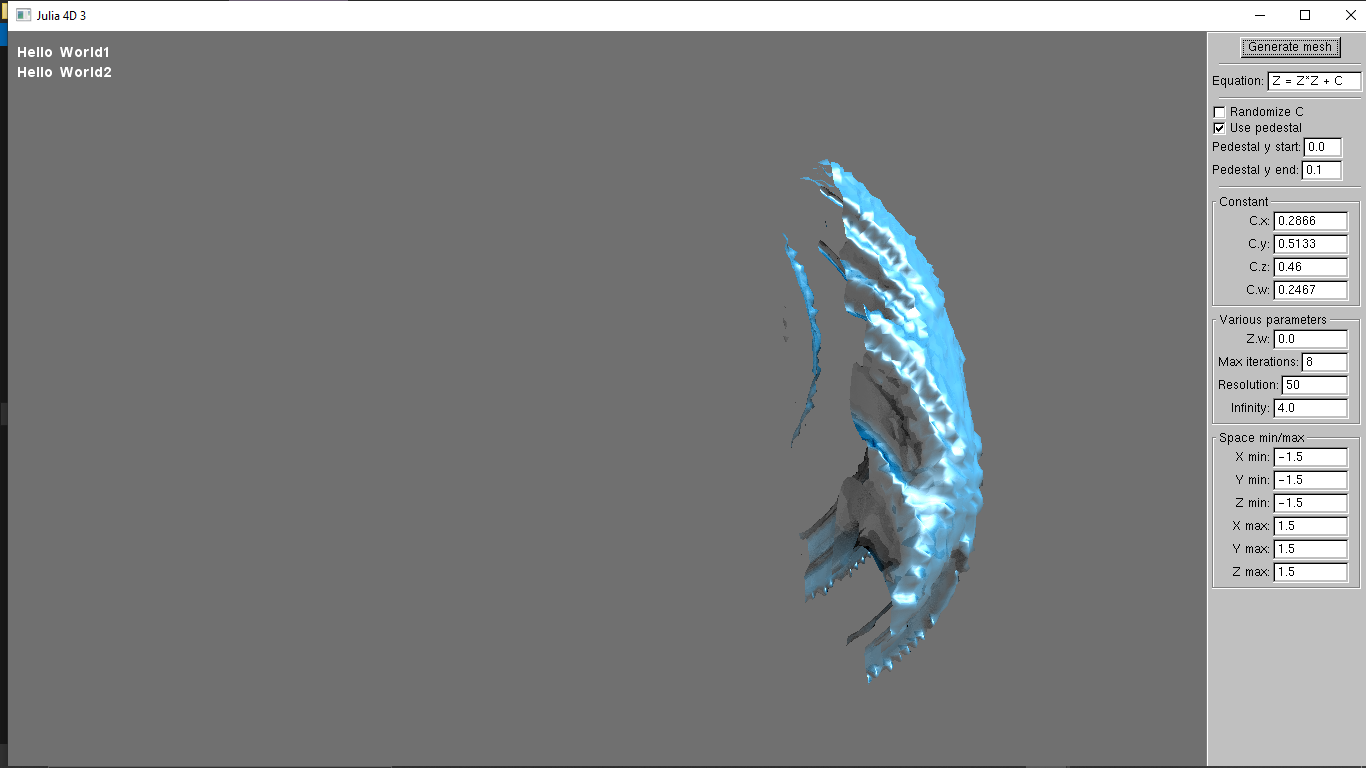

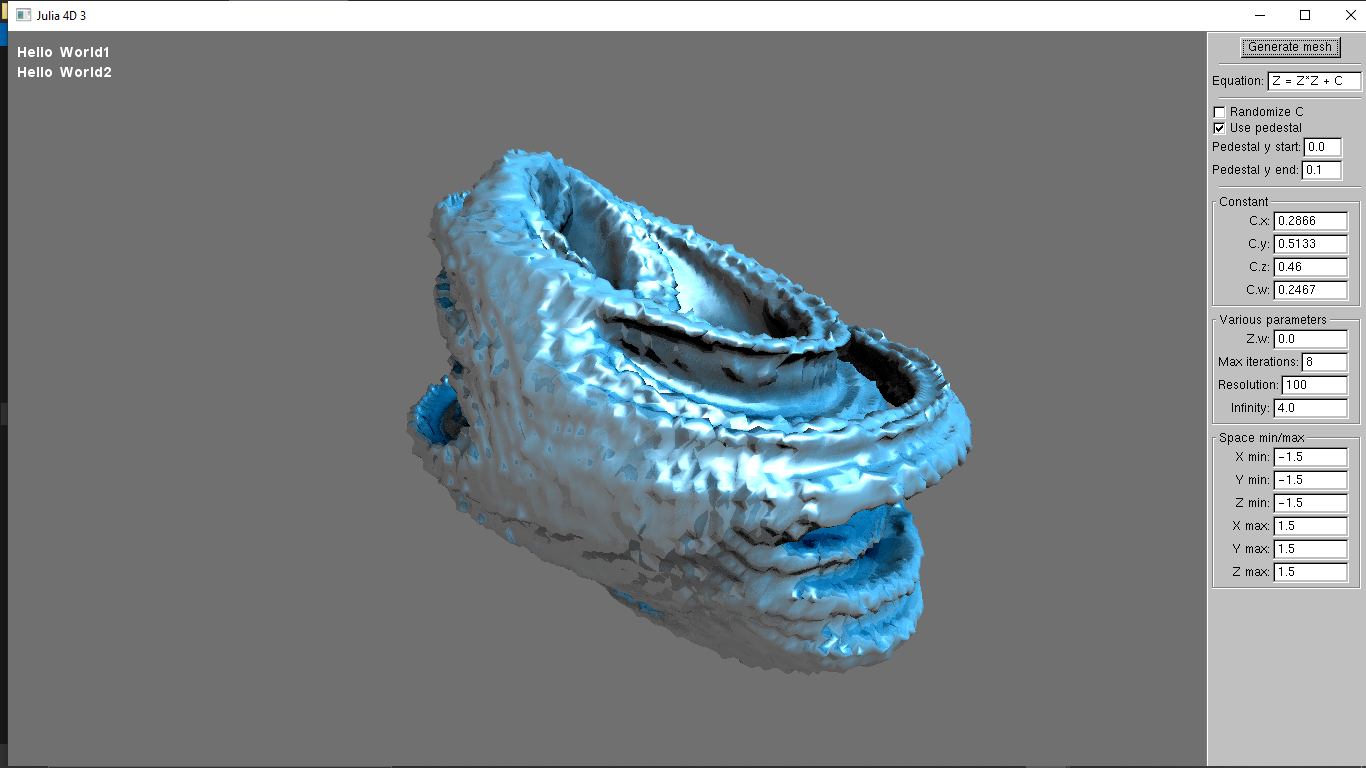

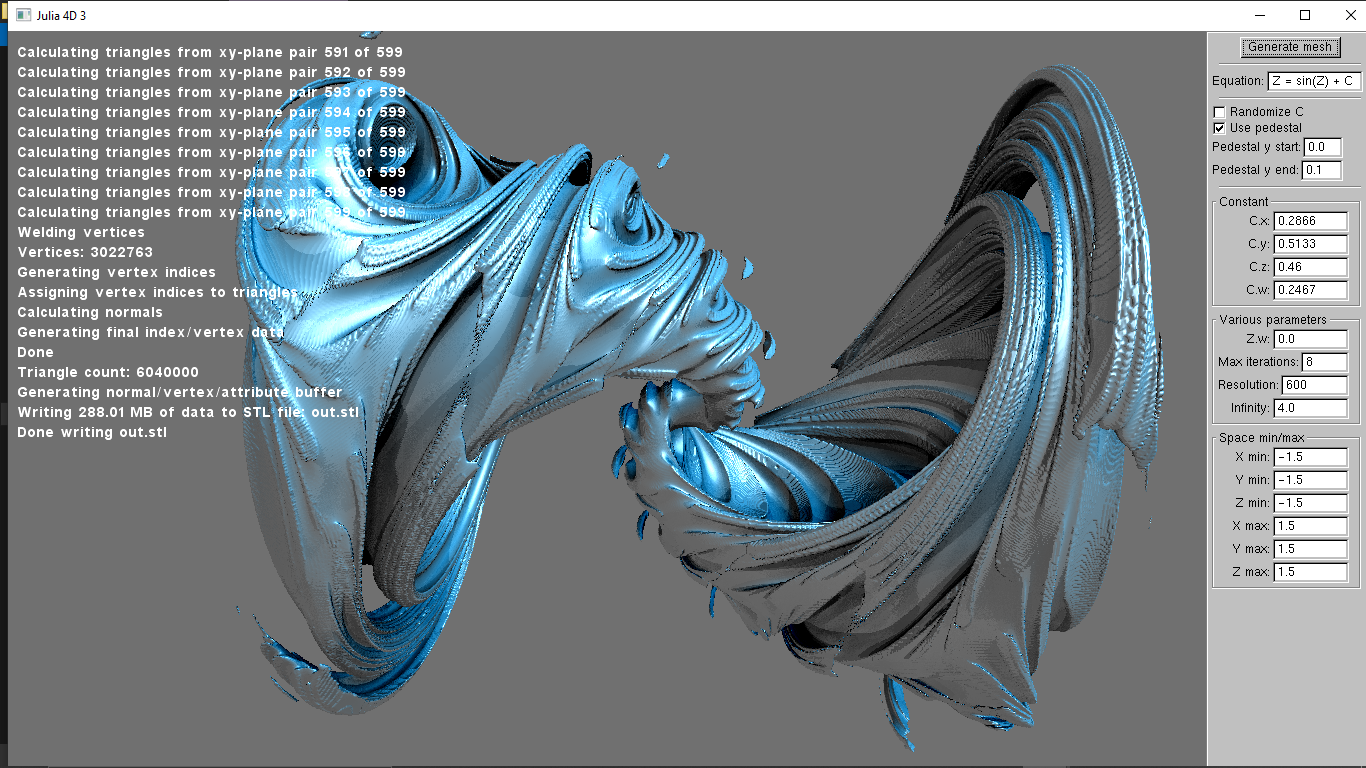

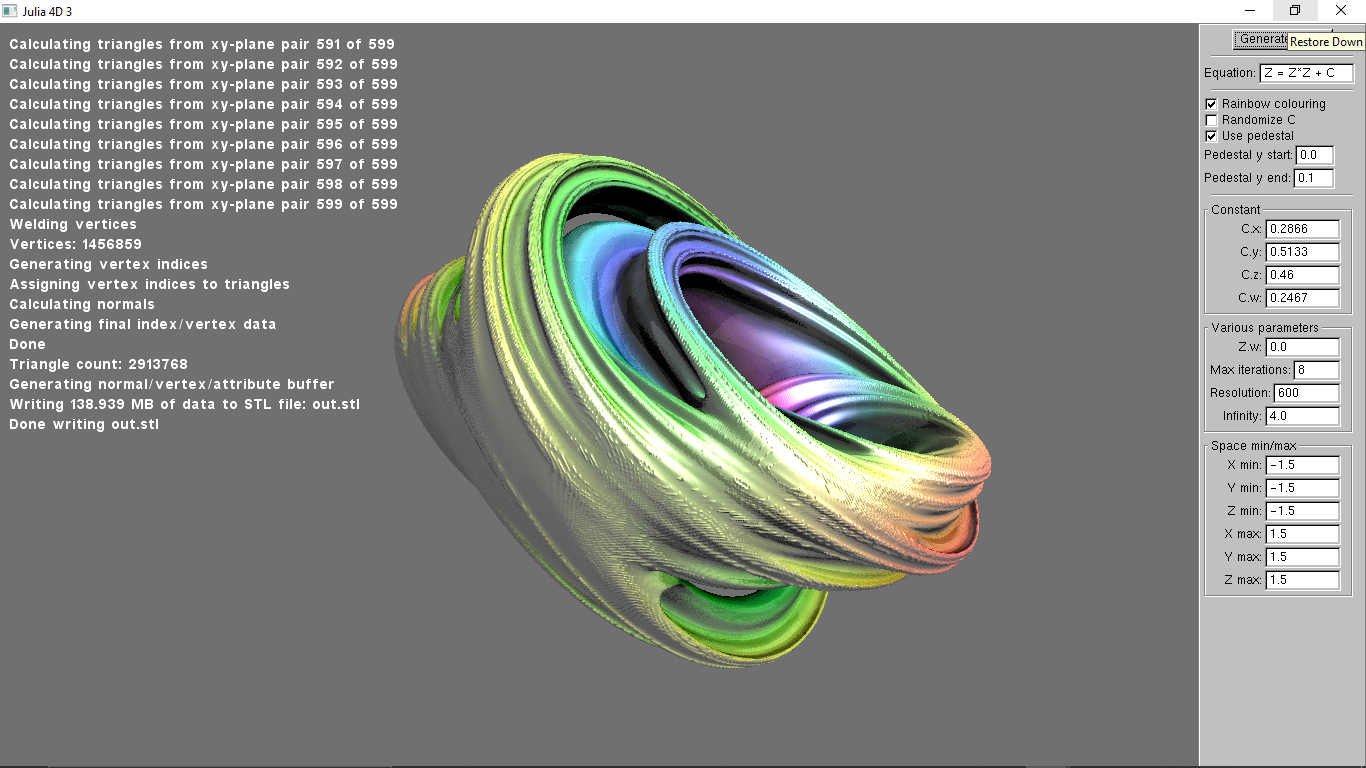

In my program, I generate a mesh. Then I generate another mesh, and I try to update the GPU, and it works fine if the second mesh is bigger than the first. If the second mesh is smaller than the first, then it doesn't seem to work. It's like it glBufferData doesn't quite work. Any ideas?

My GPU upload function can be found at: https://github.com/sjhalayka/julia4d3/blob/672d1b6042082a25cdb94477198e1cc3b84106e6/ssao.h#L843

Included are some screenshots. Thanks for any help that you can provide. I'm even willing to pay a small fee for the help. The project is open source, and in the public domain.