Hi,

I have the source code and a documentation of the problem available at https://bitbucket.org/justkarli/lookuptextureexample/src/master/.

The problem

When uploading bit (mask) information into a floating point texture, the bitmask value is either rounded to zero, clamped or not written at all.

The case

We want to encode additional material information into an own look up texture. The values that we want to encode are bitmasks or float values. For each material, its properties are stored in two 32-bit float pixels.

In the example provided, we encode the bitmask values either via reinterpret_cast or with glm::intBitsToFloat.

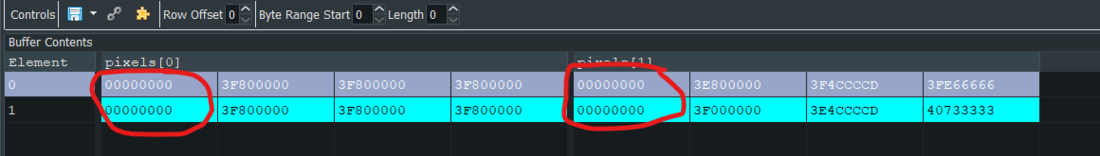

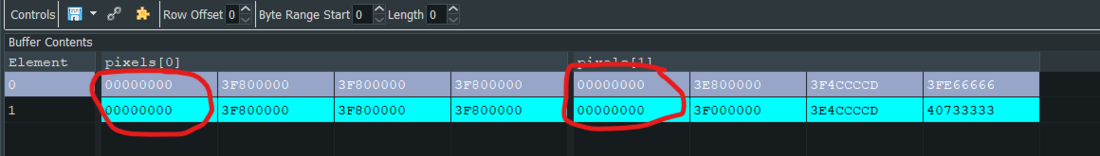

When we debug the provided example with renderdoc and view the uploaded texture as raw buffer via renderdoc, the bitmask values set on the CPU are clearly set to 0.

Expected values for those 0 entries are:

main:252 lutInfos.push_back(reinterpret_cast<float&>(3));

main:258 lutInfos.push_back(glm::intBitsToFloat(11));

main:268 lutInfos.push_back(reinterpret_cast<float&>(0x1001));

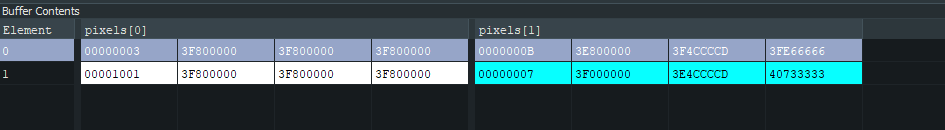

main:273 lutInfos.push_back(glm::intBitsToFloat(7)); When viewing the example with an installed nvidia card (gtx 1060, gtx 1080), we can not reproduce the issue:

The case with reading the bitmask

To avoid beeing deceived by the raw_buffer & texture visualization of renderdoc, we added a branch that tries to read the bitmask via floatBitsToUint(sampledTexture.x) and decide the color output dependent on the value. Which unfortunately supports the visualization of renderdoc and it seems to be a AMD driver issue.

Tests

We tested & reproduced the error with the following AMD cards:

- AMD Radeon RX Vega 64: Radeon Software Adrenalin 2019 Edition 19.3

- AMD Radeon RX Vega 64: Radeon Software Adrenalin 2019 Edition 19.1.1 (25.20.15011.1004)

- AMD Radeon RX Vega 64: Default windows driver

- AMD Radeon RX 480: 25.20.15027.9004

Am I am missing something? Are you able to reproduce the issue?

Cheers, karli