Heyo!

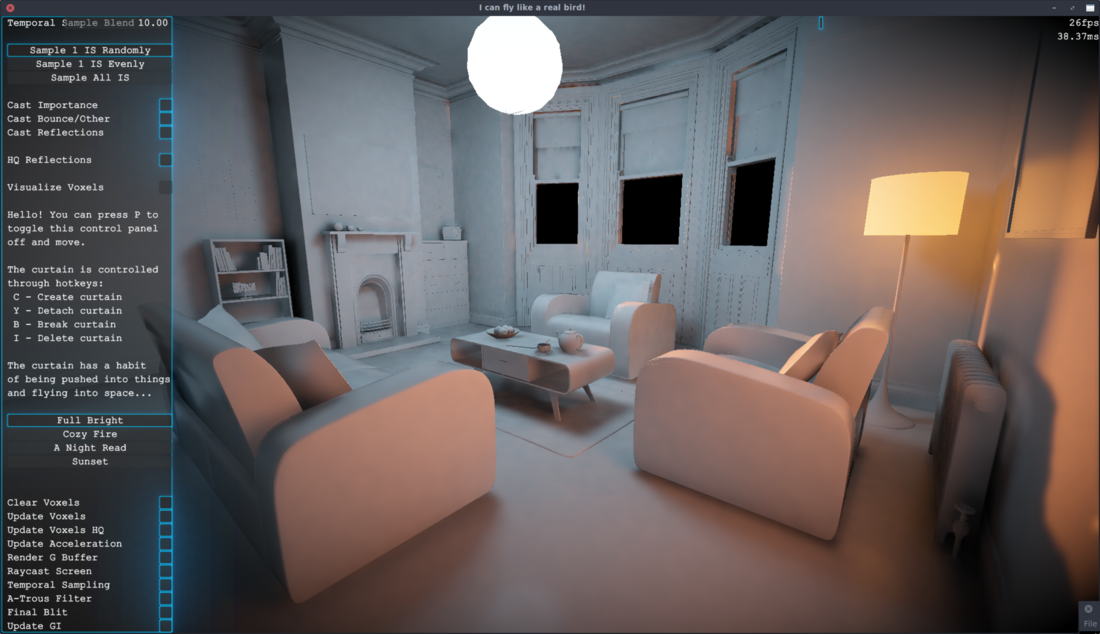

For the last few months I've been working on a realtime raytracer (like everyone currently), but have been trying to make it work on my graphics card, an NVidia GTX 750 ti - a good card but not an RTX or anything ![]() ... So I figured I'd post my results since they're kinda cool and I'm also interested to see if anyone might have some ideas on how to speed it up further

... So I figured I'd post my results since they're kinda cool and I'm also interested to see if anyone might have some ideas on how to speed it up further ![]()

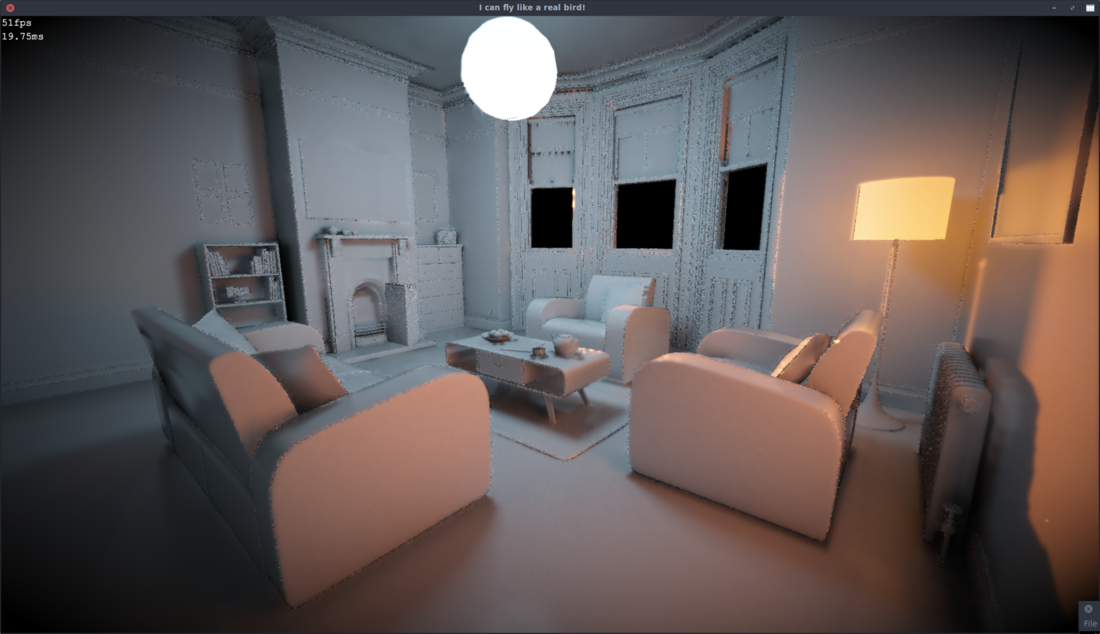

Here's a dreadful video showcasing some of what I have currently:

I've sped it up a tad and fixed reflections since then but 'eh it gets the gist across ![]() . If you're interested in trying out a demo or checking out the shader source code, I've attached a windows build (FlipperRaytracer_2019_02_25.zip). I develop on Linux so it's not as well tested as I'd like but it works on an iffy laptop I have so hopefully it'll be alright XD. You can change the resolution and whether it starts up in fullscreen in a config file next to it, and in the demo you can fly around, change the lighting setup and adjust various parameters like the frame blending (increase samples) and disabling GI, reflections, etc. If anyone tests it out I'd love to know what sort of timings you get on your GPU

. If you're interested in trying out a demo or checking out the shader source code, I've attached a windows build (FlipperRaytracer_2019_02_25.zip). I develop on Linux so it's not as well tested as I'd like but it works on an iffy laptop I have so hopefully it'll be alright XD. You can change the resolution and whether it starts up in fullscreen in a config file next to it, and in the demo you can fly around, change the lighting setup and adjust various parameters like the frame blending (increase samples) and disabling GI, reflections, etc. If anyone tests it out I'd love to know what sort of timings you get on your GPU ![]()

But yeah so currently I can achieve about 330million rays a second, enough to shoot 3 incoherent rays per pixel at 1080p at 50fps - so not too bad overall. I'm really hoping to bump this up a bit further to 5 incoherent rays at 60fps...but we'll see ![]()

I'll briefly describe how it works now :). Each render loop it goes through these steps:

- Render the scene into a 3D texture (Voxelize it)

- Generate an acceleration structure akin to an octree from that

- Render GBuffer (I use a deferred renderer approach)

- Calculate lighting by raytracing a few rays per pixel

- Blend with previous frames to increase sample count

- Finally output with motion blur and some tonemapping

Pretty much the most obvious way to do it all ![]()

So the main reason it's quick enough is the acceleration structure, which is kinda cool in how simple yet effective it is. At first I tried distance fields, which while really efficient to step through, just can't be generated fast enough in real time (I could only get it down to 300ms for a 512x512x512 texture). Besides I wanted voxel accurate casting for some reason anyway (blocky artifacts look so good...), so I figured I'd start there. Doing an unaccelerated raycast against a voxel texture is simple enough, just cast a ray and test against every voxel the ray intersects, by stepping through it pixel by pixel using a line-stepping algorithm like DDA. The cool thing is, by voxelizing the scene at different mipmaps it's possible to take differently sized steps by checking which is the lowest resolution mipmap with empty space. This can be precomputed into a single texture allows that information in 1 sample. I've found this gives pretty similar raytracing speed to the distance fields, but can be generated in 1-2ms, ending up with a texture like this (a 2D slice):

It also has some nice properties, like if the ray is cast directly next to and parallel to a wall, instead of moving tiny amounts each step (due to the distance field saying it's super close to something) it'll move...an arbitrary amount depending on where the wall falls on the grid :P. Still the worst case is the same as the distance field and it's best case is much better so it's pretty neat ![]()

So then for the raytracing I use some importance sampling, directing the rays towards the lights. I find just picking a random importance sampler per pixel and shooting towards that looks good enough and allows as many as I need without changing the framerate (only noise). Then I throw a random ray to calculate GI/other lights, and a ray for reflections. The global illumination works pretty simply too, when voxelizing the scene I throw some rays out from each voxel, and since they raycast against themselves, each frame I get an additional bounce of light :D. That said, I found that a bit slow, so I have an intermediate step where I actually render the objects into a low resolution lightmap, which is where the raycasts take place, then when voxelizing I just sample the lightmap. This also theoretically gives me a fallback in case a computer can't handle raytracing every pixel or the voxel field isn't large enough to cover an entire scene (although currently the lightmap is...iffy...wouldn't use it for that yet XD).

And yeah then I use the usual temporal anti aliasing technique to increase the sample count and anti-alias the image. I previously had a texture that would keep track of how many samples had been taken per pixel, resetting when viewing a previously unviewed region, and used this to properly average the samples (so it converged much faster/actually did converge...) rather than using the usual exponential blending. That said I had some issues integrating any sort of discarding with anti-aliasing so currently I just let everything smear like crazy XD. I think the idea there though is to just have separate temporal supersampling and temporal anti aliasing, so I might try that out. That should improve the smearing and noise significantly...I think XD

Hopefully some of that made sense and was interesting :), please ask any questions you have, I can probably explain it better haha. I'm curious to know what anyone thinks, and of course any ideas to speed it up/develop it further are very much encouraged ![]() .

.

Ooh also I'm also working on some physics simulations, so you can create a realtime cloth in it by pressing C - just usual position based dynamics stuff. Anyway questions on that are open too :P.