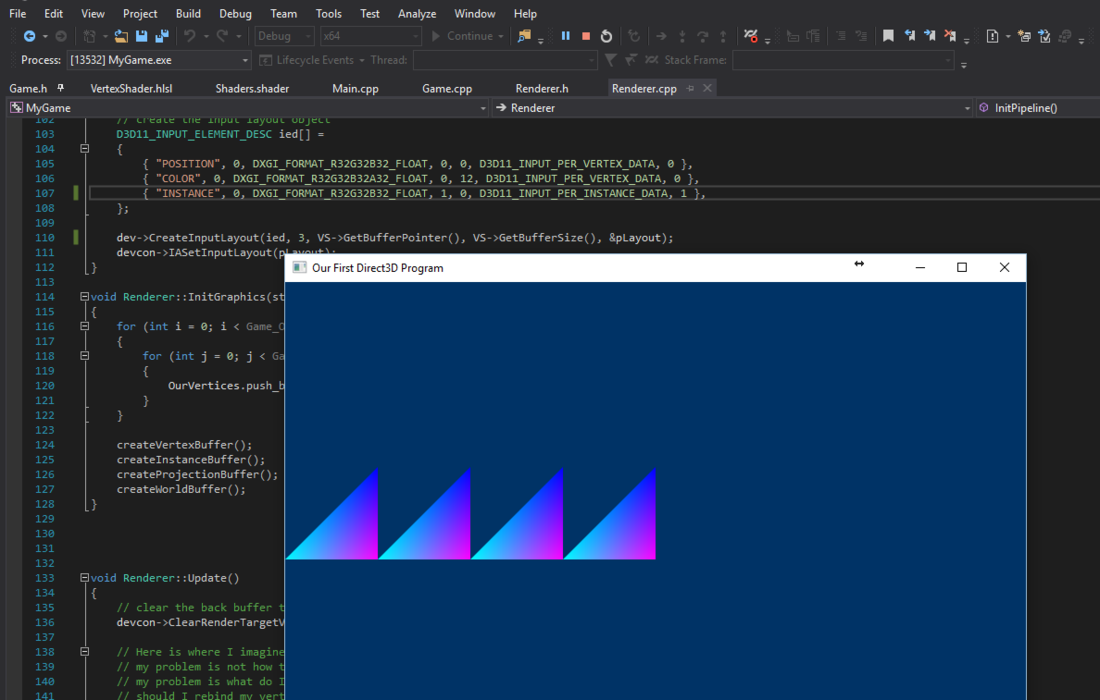

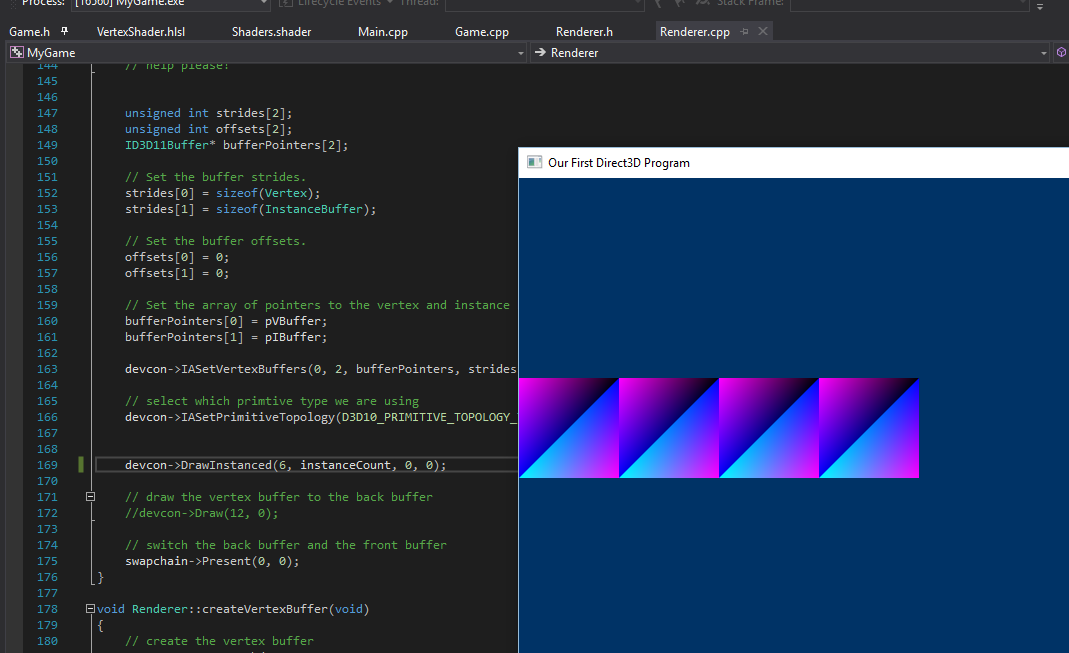

Hi all I am attempting to follow the Rastertek tutorial http://www.rastertek.com/dx11tut37.html

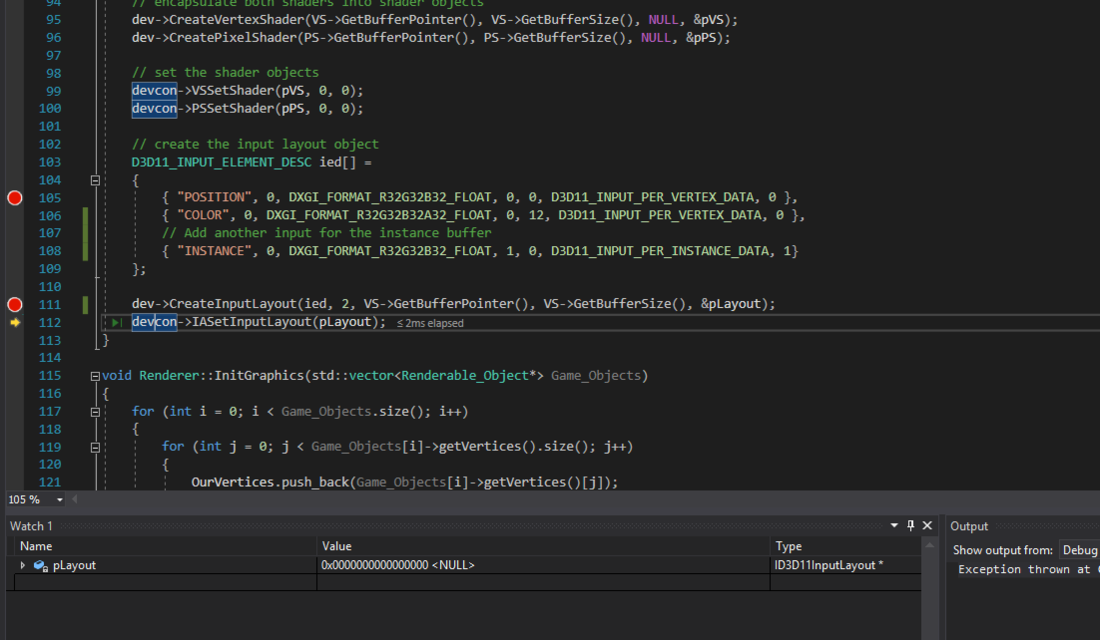

Right now I am having a problem, it appears that my input layout is not being initialized properly and I'm not sure why, an exception is being thrown when i call CreateInputLayout...

Exception thrown at 0x00007FFD9B8EA388 in MyGame.exe: Microsoft C++ exception: _com_error at memory location 0x0000000D4D18ED30.

Maybe you all can point out where I'm going wrong here?

void Renderer::InitPipeline()

{

// load and compile the two shaders

ID3D10Blob *VS, *PS;

D3DX11CompileFromFile("Shaders.shader", 0, 0, "VShader", "vs_4_0", 0, 0, 0, &VS, 0, 0);

D3DX11CompileFromFile("Shaders.shader", 0, 0, "PShader", "ps_4_0", 0, 0, 0, &PS, 0, 0);

// encapsulate both shaders into shader objects

dev->CreateVertexShader(VS->GetBufferPointer(), VS->GetBufferSize(), NULL, &pVS);

dev->CreatePixelShader(PS->GetBufferPointer(), PS->GetBufferSize(), NULL, &pPS);

// set the shader objects

devcon->VSSetShader(pVS, 0, 0);

devcon->PSSetShader(pPS, 0, 0);

// create the input layout object

D3D11_INPUT_ELEMENT_DESC ied[] =

{

{ "POSITION", 0, DXGI_FORMAT_R32G32B32_FLOAT, 0, 0, D3D11_INPUT_PER_VERTEX_DATA, 0 },

{ "COLOR", 0, DXGI_FORMAT_R32G32B32A32_FLOAT, 0, 12, D3D11_INPUT_PER_VERTEX_DATA, 0 },

// Add another input for the instance buffer

{ "INSTANCE", 0, DXGI_FORMAT_R32G32B32_FLOAT, 1, 0, D3D11_INPUT_PER_INSTANCE_DATA, 1}

};

dev->CreateInputLayout(ied, 2, VS->GetBufferPointer(), VS->GetBufferSize(), &pLayout);

devcon->IASetInputLayout(pLayout);

}

If I have not provided enough information, please help me understand what is needed so I can provide the info.