First. I apologize - especially to the moderators - for sending post with large images (yet it's necessary to explain what is going on).

Hearing about you using image editor - I know where the devil is, I'm using actually Gimp, and there is one problem -> Alpha channel contains height.

This is what image looks like in Gimp (I'm using Ceiling as example):

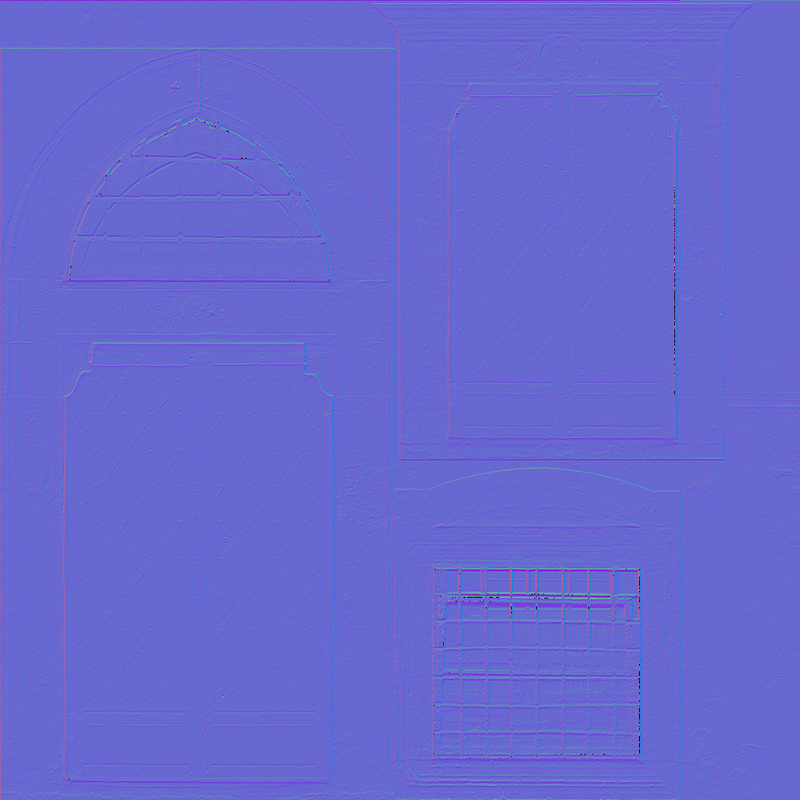

De-composing this into channels gives you these 4 images (Red, Green, Blue and Alpha):

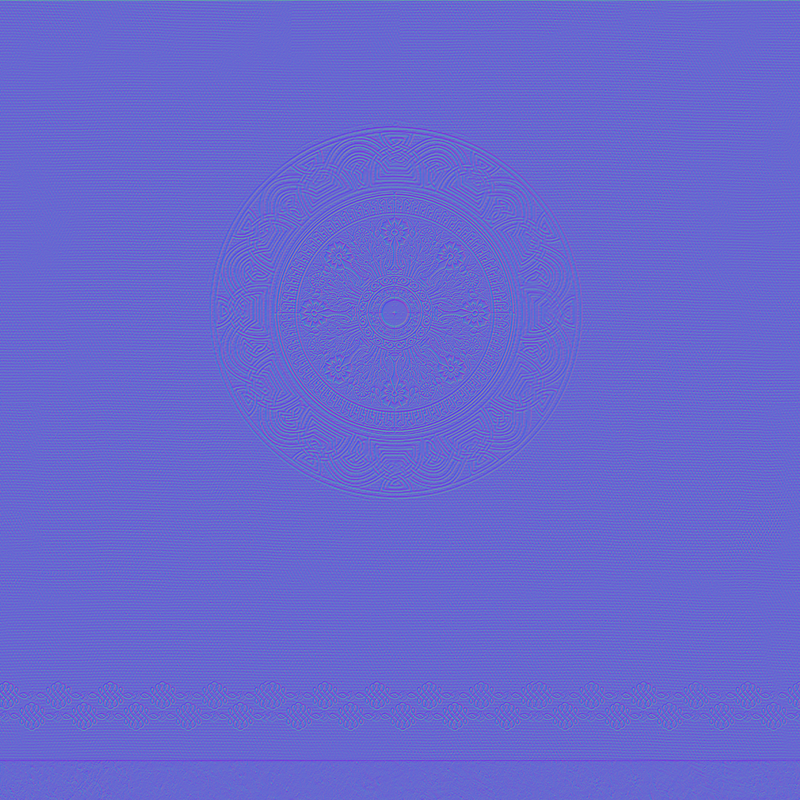

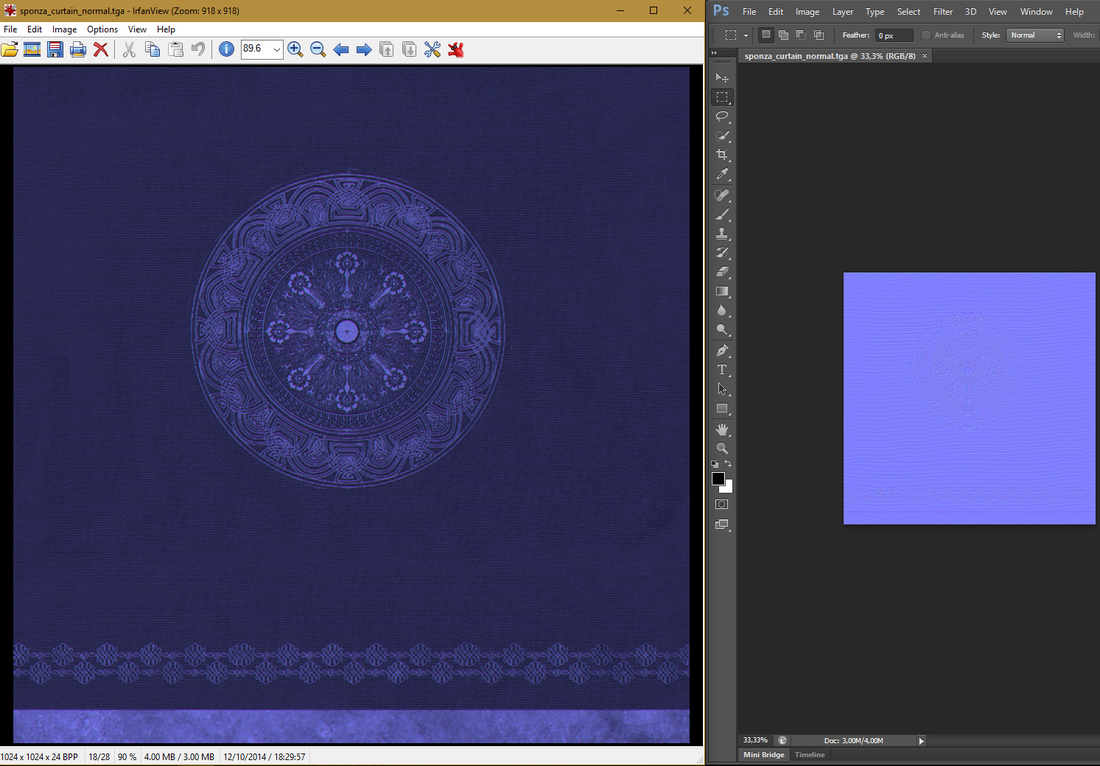

You need to decompose to RGBA, and then compose just from RGB (e.g. set alpha to 255) to obtain a normal map. Look at 2 examples, in the first one I set alpha to 255 and re-composed image. In the second one I just removed alpha:

I assume you recognize the second one. Now to explain what is going on - you need to look at how software removes alpha channel from the image.

Now I will quote here directly from GIMP source code (had to dig there a bit). The callback to remove alpha is this:

void

layers_alpha_remove_cmd_callback (GtkAction *action,

gpointer data)

{

GimpImage *image;

GimpLayer *layer;

return_if_no_layer (image, layer, data);

if (gimp_drawable_has_alpha (GIMP_DRAWABLE (layer)))

{

gimp_layer_remove_alpha (layer, action_data_get_context (data));

gimp_image_flush (image);

}

}

So what you're interested in is - gimp_layer_remove_alpha - procedure. Which is:

void

gimp_layer_remove_alpha (GimpLayer *layer,

GimpContext *context)

{

GeglBuffer *new_buffer;

GimpRGB background;

g_return_if_fail (GIMP_IS_LAYER (layer));

g_return_if_fail (GIMP_IS_CONTEXT (context));

if (! gimp_drawable_has_alpha (GIMP_DRAWABLE (layer)))

return;

new_buffer =

gegl_buffer_new (GEGL_RECTANGLE (0, 0,

gimp_item_get_width (GIMP_ITEM (layer)),

gimp_item_get_height (GIMP_ITEM (layer))),

gimp_drawable_get_format_without_alpha (GIMP_DRAWABLE (layer)));

gimp_context_get_background (context, &background);

gimp_pickable_srgb_to_image_color (GIMP_PICKABLE (layer),

&background, &background);

gimp_gegl_apply_flatten (gimp_drawable_get_buffer (GIMP_DRAWABLE (layer)),

NULL, NULL,

new_buffer, &background,

gimp_layer_get_real_composite_space (layer));

gimp_drawable_set_buffer (GIMP_DRAWABLE (layer),

gimp_item_is_attached (GIMP_ITEM (layer)),

C_("undo-type", "Remove Alpha Channel"),

new_buffer);

g_object_unref (new_buffer);

}

No need to go any further in the code base. As you see, image background is obtained in RGB format from RGBA, the:

gimp_context_get_background

gimp_pickable_srgb_to_image_color

If you would dig in these functions a bit (and you would need to also dig a bit in GEGL), you would find out that the operation actually done when removing alpha is:

R_out = R_in * A_in

G_out = G_in * A_in

B_out = B_in * A_in

Such image is then set as output instead of previous image (rest of the functions).

Now, I can't tell for Photoshop (I've worked with Gimp quite a lot so far) - but I'd assume they do similar, if not the same transformation. So you're out of luck using it for conversion. What you actually need as an operation is:

R = R_in;

G = G_in;

B = B_in;

l = sqrt(R * R + G * G + B * B);

R_out = R / l;

G_out = G / l;

B_out = B / l;

Something as simple as this. This can be done in python for Gimp as plugin F.e.