What does one normally use as the "2nd texture" to blend with your image based on your depth buffer to simulate depth-of-field? Is this just a down-scaled version of the given image? And if so which factor does one normally use: 1/16, 1/8, etc. ?

Depth-of-field

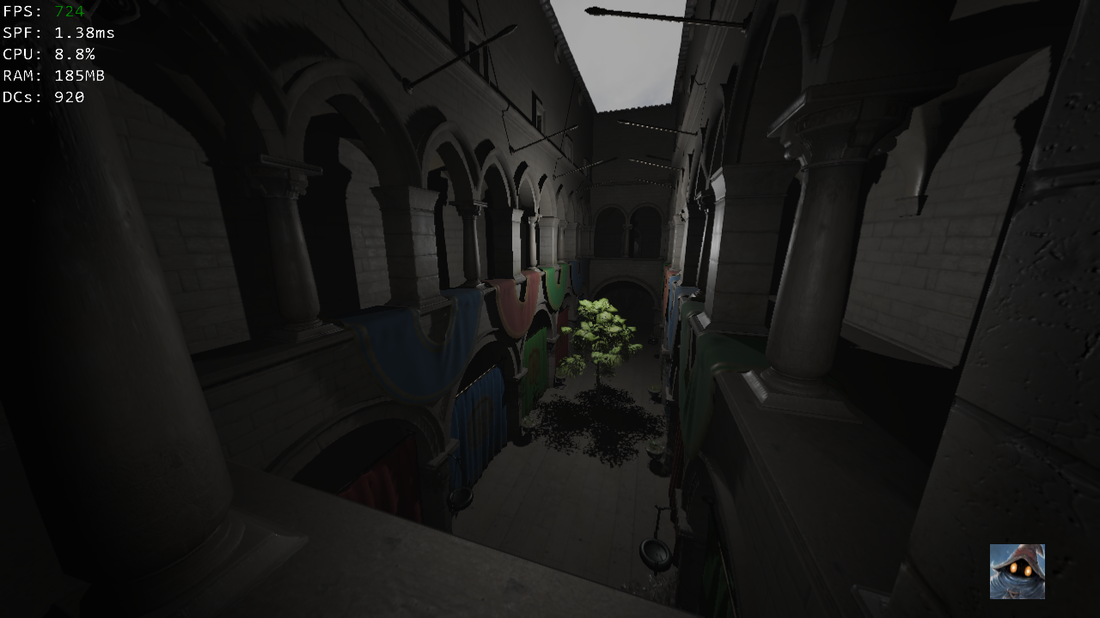

One doesn't "normally use" the blur + blend approach. ![]() It looks very unappealing, unrealistic and doesn't really look like DOF.

It looks very unappealing, unrealistic and doesn't really look like DOF.

A better, and still pretty simple approach to implement if it's your first time, is to just perform a simple disk average at full resolution instead of blending with a blurred image. Scale the samples by the blur amount that you would have used for the blending. It would look like:

float4 color = 0.0;

for(int i = 0; i < numSamples; ++i)

{

float2 sampleOffset = screenUV + offsets[i] * blurScale;

color += Texture.SampleLevel(Sampler, sampleOffset, 0);

}

color /= numSamples;Where blurScale is the calculated blur strength that you would have used to blend. The offsets are just coordinates forming a shape (circle, hexagon, whatever you want).

That said, if you need to stick to the blur approach, I'd say anything that looks good to you. There's no "Right answer" when it comes to these things.

38 minutes ago, Styves said:There's no "Right answer" when it comes to these things.

Some guys seem pretty close ![]() https://www.siliconstudio.co.jp/en/rd/presentations/

https://www.siliconstudio.co.jp/en/rd/presentations/

11 hours ago, Styves said:One doesn't "normally use" the blur + blend approach. It looks very unappealing, unrealistic and doesn't really look like DOF.

A better, and still pretty simple approach to implement if it's your first time, is to just perform a simple disk average at full resolution instead of blending with a blurred image. Scale the samples by the blur amount that you would have used for the blending. It would look like:

float4 color = 0.0; for(int i = 0; i < numSamples; ++i) { float2 sampleOffset = screenUV + offsets[i] * blurScale; color += Texture.SampleLevel(Sampler, sampleOffset, 0); } color /= numSamples;Where blurScale is the calculated blur strength that you would have used to blend. The offsets are just coordinates forming a shape (circle, hexagon, whatever you want).

That said, if you need to stick to the blur approach, I'd say anything that looks good to you. There's no "Right answer" when it comes to these things.

But how does this take depth into account?

🧙

2 hours ago, matt77hias said:But how does this take depth into account?

Depth controls the blurScale factor. You can do a linear scale of the depth to get the blur scale, like:

max((depth - focusDepth) * blurAmount, 0.0)I didn't tested it but think it would give a nice result that you can tweak easily. focusDepth would be the depth at which the image wouldn't be blurred (focus point), and blurAmount controls how quickly the blur increases and how blurry it will be. I'm clamping it to 0 to remove the near blur, which can be disturbing for games, you can replace the max with abs if you want near blur too.

26 minutes ago, DarkDrifter said:Depth controls the blurScale factor.

Ah ok thanks. I was confusing between the scale factor (constant part of the blur scale) and the blur scale itself.

🧙

I looked at some examples and currently going to use something like:

float GetBlurFactor(float p_view_z) {

return smoothstep(0.0f, g_lens_radius, abs(g_focal_length - p_view_z));

}

[numthreads(GROUP_SIZE, GROUP_SIZE, 1)]

void CS(uint3 thread_id : SV_DispatchThreadID) {

const uint2 location = g_viewport_top_left + thread_id.xy;

if (any(location >= g_display_resolution)) {

return;

}

const float p_view_z = DepthToViewZ(g_depth_texture[location]);

const float blur_factor = GetBlurFactor(p_view_z);

if (blur_factor <= 0.0f) {

return;

}

const float coc_radius = blur_factor * g_max_coc_radius;

float4 hdr_sum = g_input_image_texture[location];

float4 contribution_sum = 1.0f;

[unroll]

for (uint i = 0u; i < 12u; ++i) {

const float2 location_i = location + g_disk_offsets[i] * coc_radius;

const float4 hdr_i = g_input_image_texture[location_i];

const float p_view_z_i = DepthToViewZ(g_depth_texture[location_i]);

const float blur_factor_i = GetBlurFactor(p_view_z_i);

const float contribution_i = (p_view_z_i > p_view_z) ? 1.0f : blur_factor_i;

hdr_sum += hdr_i * contribution_i;

contribution_sum += contribution_i;

}

const float4 inv_contribution_sum = 1.0f / contribution_sum;

g_output_image_texture[location] = hdr_sum * inv_contribution_sum;

}

🧙

21 minutes ago, matt77hias said:const float contribution_i = (p_view_z_i > p_view_z) ? 1.0f : blur_factor_i;

Would it look better if you use this?:

contribution_i = (p_view_z_i > p_view_z) ? blur_factor_i : 0I assume this way only pixels behind the current contribute. Does this avoid or cause artifacts?

Maybe some range of z difference instead hard boolean transition gets best of both worlds.

25 minutes ago, matt77hias said:const float4 inv_contribution_sum = 1.0f / contribution_sum; g_output_image_texture[location] = hdr_sum * inv_contribution_sum;

Is it intended to use float4 where you could use just one float?

1 minute ago, JoeJ said:Is it intended to use float4 where you could use just one float?

Nice catch!

4 minutes ago, JoeJ said:I assume this way only pixels behind the current contribute. Does this avoid or cause artifacts?

Maybe some range of z difference instead hard boolean transition gets best of both worlds.

I have some renderings in a few minutes ;p

🧙

45 minutes ago, JoeJ said:I assume this way only pixels behind the current contribute. Does this avoid or cause artifacts?

Maybe some range of z difference instead hard boolean transition gets best of both worlds.

That kind of eliminates all blur?

Whereas the original:

I use:

camera_buffer.m_lens_radius = 0.10f;

camera_buffer.m_focal_length = 3.0f;

camera_buffer.m_max_coc_radius = 10.0f;

camera_buffer.m_lens_radius = 7.0f;

camera_buffer.m_focal_length = 10.0f;

camera_buffer.m_max_coc_radius = 3.0f;

🧙